Basit O. Alawode

Meta-Optimization of Deep CNN for Image Denoising Using LSTM

Jul 14, 2021

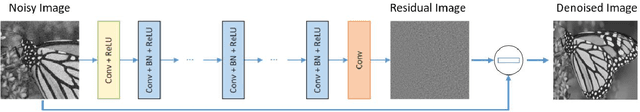

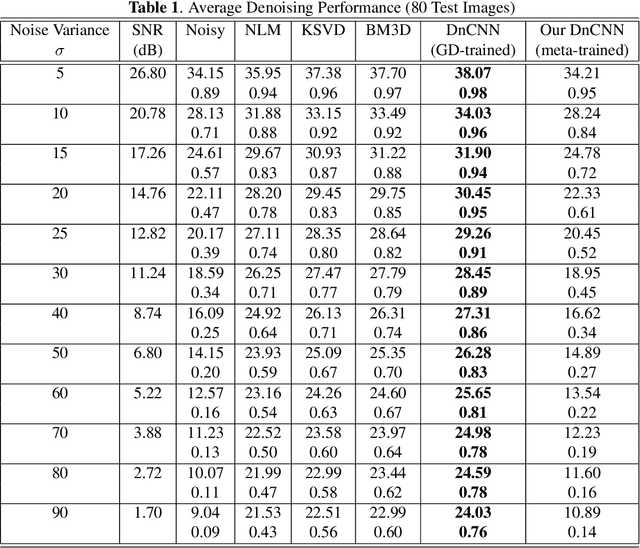

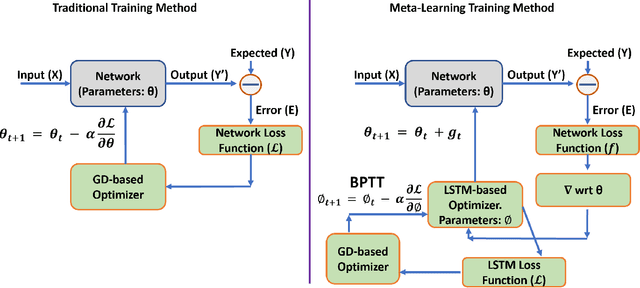

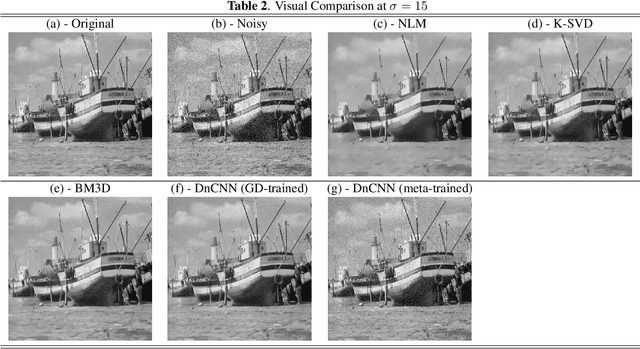

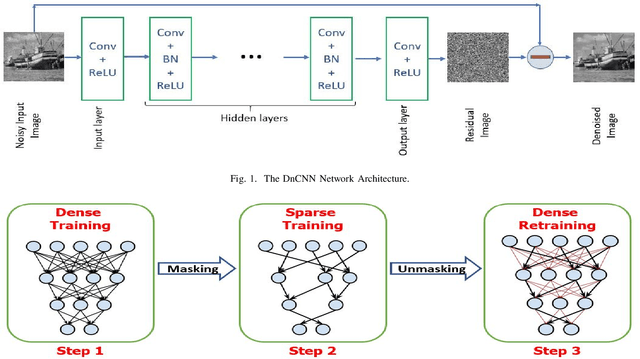

Abstract:The recent application of deep learning (DL) to various tasks has seen the performance of classical techniques surpassed by their DL-based counterparts. As a result, DL has equally seen application in the removal of noise from images. In particular, the use of deep feed-forward convolutional neural networks (DnCNNs) has been investigated for denoising. It utilizes advances in DL techniques such as deep architecture, residual learning, and batch normalization to achieve better denoising performance when compared with the other classical state-of-the-art denoising algorithms. However, its deep architecture resulted in a huge set of trainable parameters. Meta-optimization is a training approach of enabling algorithms to learn to train themselves by themselves. Training algorithms using meta-optimizers have been shown to enable algorithms to achieve better performance when compared to the classical gradient descent-based training approach. In this work, we investigate the application of the meta-optimization training approach to the DnCNN denoising algorithm to enhance its denoising capability. Our preliminary experiments on simpler algorithms reveal the prospects of utilizing the meta-optimization training approach towards the enhancement of the DnCNN denoising capability.

Details Preserving Deep Collaborative Filtering-Based Method for Image Denoising

Jul 14, 2021

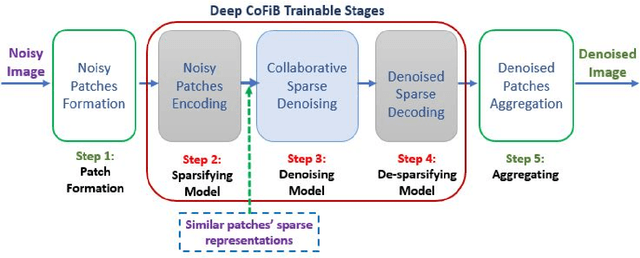

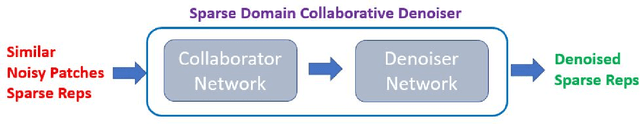

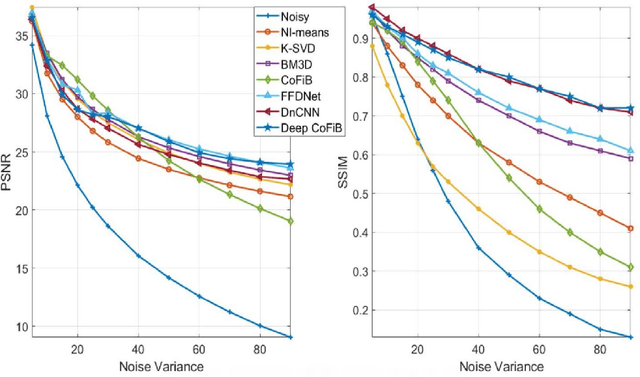

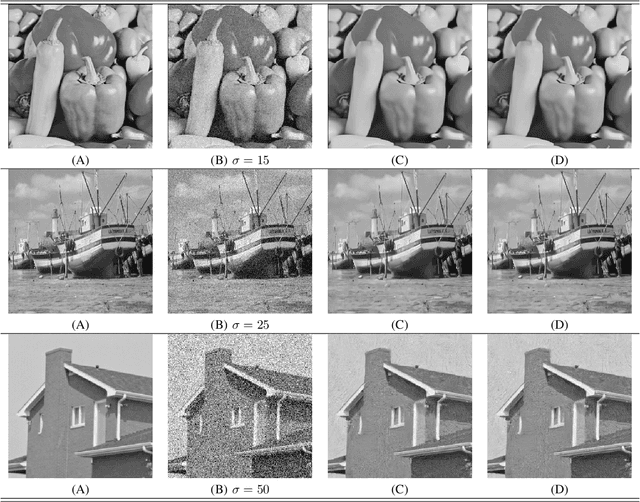

Abstract:In spite of the improvements achieved by the several denoising algorithms over the years, many of them still fail at preserving the fine details of the image after denoising. This is as a result of the smooth-out effect they have on the images. Most neural network-based algorithms have achieved better quantitative performance than the classical denoising algorithms. However, they also suffer from qualitative (visual) performance as a result of the smooth-out effect. In this paper, we propose an algorithm to address this shortcoming. We propose a deep collaborative filtering-based (Deep-CoFiB) algorithm for image denoising. This algorithm performs collaborative denoising of image patches in the sparse domain using a set of optimized neural network models. This results in a fast algorithm that is able to excellently obtain a trade-off between noise removal and details preservation. Extensive experiments show that the DeepCoFiB performed quantitatively (in terms of PSNR and SSIM) and qualitatively (visually) better than many of the state-of-the-art denoising algorithms.

Collaborative Filtering-Based Method for Low-Resolution and Details Preserving Image Denoising

Jul 10, 2021

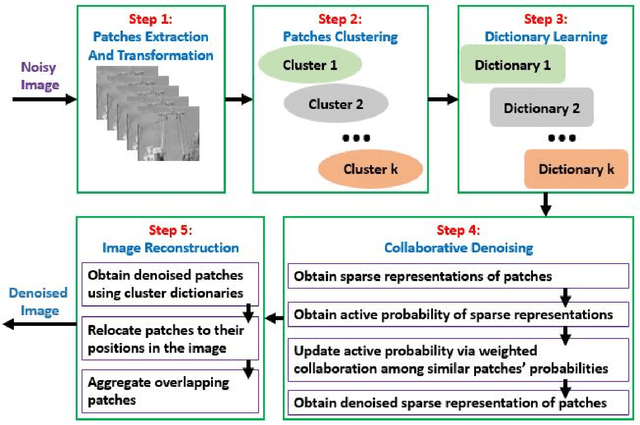

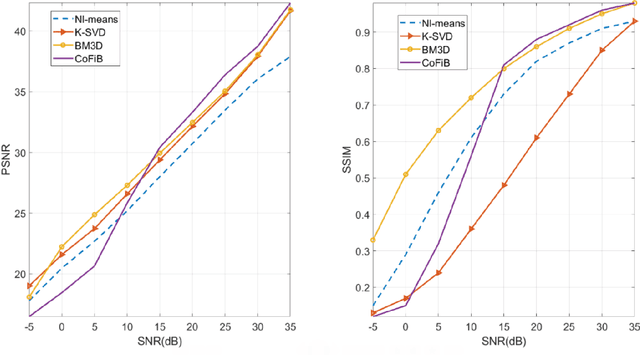

Abstract:Over the years, progressive improvements in denoising performance have been achieved by several image denoising algorithms that have been proposed. Despite this, many of these state-of-the-art algorithms tend to smooth out the denoised image resulting in the loss of some image details after denoising. Many also distort images of lower resolution resulting in a partial or complete structural loss. In this paper, we address these shortcomings by proposing a collaborative filtering-based (CoFiB) denoising algorithm. Our proposed algorithm performs weighted sparse domain collaborative denoising by taking advantage of the fact that similar patches tend to have similar sparse representations in the sparse domain. This gives our algorithm the intelligence to strike a balance between image detail preservation and noise removal. Our extensive experiments showed that our proposed CoFiB algorithm does not only preserve the image details but also perform excellently for images of any given resolution where many denoising algorithms tend to struggle, specifically at low resolutions.

Dense-Sparse Deep CNN Training for Image Denoising

Jul 10, 2021

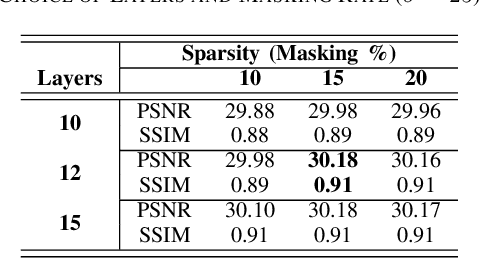

Abstract:Recently, deep learning (DL) methods such as convolutional neural networks (CNNs) have gained prominence in the area of image denoising. This is owing to their proven ability to surpass state-of-the-art classical image denoising algorithms such as BM3D. Deep denoising CNNs (DnCNNs) use many feedforward convolution layers with added regularization methods of batch normalization and residual learning to improve denoising performance significantly. However, this comes at the expense of a huge number of trainable parameters. In this paper, we address this issue by reducing the number of parameters while achieving a comparable level of performance. We derive motivation from the improved performance obtained by training networks using the dense-sparse-dense (DSD) training approach. We extend this training approach to a reduced DnCNN (RDnCNN) network resulting in a faster denoising network with significantly reduced parameters and comparable performance to the DnCNN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge