Barzan A. Yosuf

AI-Driven Resource Allocation in Optical Wireless Communication Systems

Apr 08, 2023Abstract:Visible light communication (VLC) is a promising solution to satisfy the extreme demands of emerging applications. VLC offers bandwidth that is orders of magnitude higher than what is offered by the radio spectrum, hence making best use of the resources is not a trivial matter. There is a growing interest to make next generation communication networks intelligent using AI based tools to automate the resource management and adapt to variations in the network automatically as opposed to conventional handcrafted schemes based on mathematical models assuming prior knowledge of the network. In this article, a reinforcement learning (RL) scheme is developed to intelligently allocate resources of an optical wireless communication (OWC) system in a HetNet environment. The main goal is to maximise the total reward of the system which is the sum rate of all users. The results of the RL scheme are compared with that of an optimization scheme that is based on Mixed Integer Linear Programming (MILP) model.

Energy Efficient UAV-Based Service Offloading over Cloud-Fog Architectures

May 14, 2022

Abstract:Unmanned Aerial Vehicles (UAVs) are poised to play a central role in revolutionizing future services offered by the envisioned smart cities, thanks to their agility, flexibility, and cost-efficiency. UAVs are being widely deployed in different verticals including surveillance, search and rescue missions, delivery of items, and as an infrastructure for aerial communications in future wireless networks. UAVs can be used to survey target locations, collect raw data from the ground (i.e., video streams), generate computing task(s) and offload it to the available servers for processing. In this work, we formulate a multi-objective optimization framework for both the network resource allocation and the UAV trajectory planning problem using Mixed Integer Linear Programming (MILP) optimization model. In consideration of the different stake holders that may exist in a Cloud-Fog environment, we minimize the sum of a weighted objective function, which allows network operators to tune the weights to emphasize/de-emphasize different cost functions such as the end-to-end network power consumption (EENPC), processing power consumption (PPC), UAVs total flight distance (UAVTFD), and UAVs total power consumption (UAVTPC). Our optimization models and results enable the optimum offloading decisions to be made under different constraints relating to EENPC, PPC, UAVTFD and UAVTPC which we explore in detail. For example, when the UAVs propulsion efficiency (UPE) is at its worst (10% considered), offloading via the macro base station is the best choice and a maximum power saving of 34% can be achieved. Extensive studies on the UAVs coverage path planning (CPP) and computation offloading have been conducted, but none has tackled the issue in a practical Cloud-Fog architecture in which access, metro and core layers are considered in the service offloading in a distributed architecture like the Cloud-Fog.

Energy-Efficient Distributed Machine Learning in Cloud Fog Networks

May 20, 2021

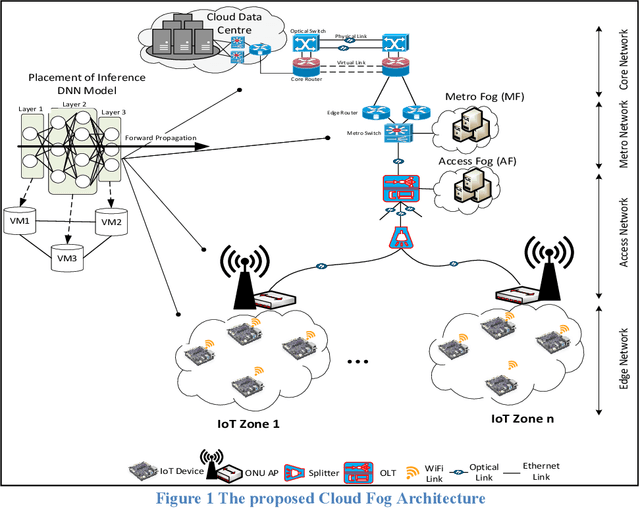

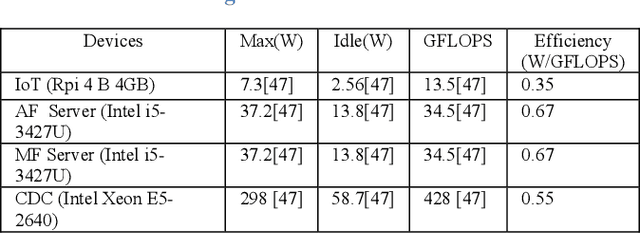

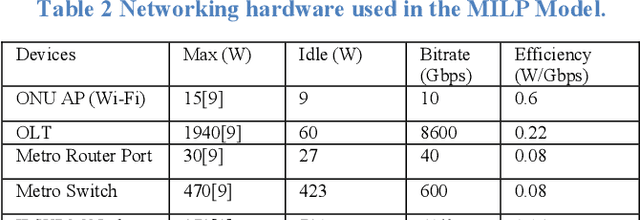

Abstract:Massive amounts of data are expected to be generated by the billions of objects that form the Internet of Things (IoT). A variety of automated services such as monitoring will largely depend on the use of different Machine Learning (ML) algorithms. Traditionally, ML models are processed by centralized cloud data centers, where IoT readings are offloaded to the cloud via multiple networking hops in the access, metro, and core layers. This approach will inevitably lead to excessive networking power consumptions as well as Quality-of-Service (QoS) degradation such as increased latency. Instead, in this paper, we propose a distributed ML approach where the processing can take place in intermediary devices such as IoT nodes and fog servers in addition to the cloud. We abstract the ML models into Virtual Service Requests (VSRs) to represent multiple interconnected layers of a Deep Neural Network (DNN). Using Mixed Integer Linear Programming (MILP), we design an optimization model that allocates the layers of a DNN in a Cloud/Fog Network (CFN) in an energy efficient way. We evaluate the impact of DNN input distribution on the performance of the CFN and compare the energy efficiency of this approach to the baseline where all layers of DNNs are processed in the centralized Cloud Data Center (CDC).

Energy-Efficient AI over a Virtualized Cloud Fog Network

May 07, 2021

Abstract:Deep Neural Networks (DNNs) have served as a catalyst in introducing a plethora of next-generation services in the era of Internet of Things (IoT), thanks to the availability of massive amounts of data collected by the objects on the edge. Currently, DNN models are used to deliver many Artificial Intelligence (AI) services that include image and natural language processing, speech recognition, and robotics. Accordingly, such services utilize various DNN models that make it computationally intensive for deployment on the edge devices alone. Thus, most AI models are offloaded to distant cloud data centers (CDCs), which tend to consolidate large amounts of computing and storage resources into one or more CDCs. Deploying services in the CDC will inevitably lead to excessive latencies and overall increase in power consumption. Instead, fog computing allows for cloud services to be extended to the edge of the network, which allows for data processing to be performed closer to the end-user device. However, different from cloud data centers, fog nodes have limited computational power and are highly distributed in the network. In this paper, using Mixed Integer Linear Programming (MILP), we formulate the placement of DNN inference models, which is abstracted as a network embedding problem in a Cloud Fog Network (CFN) architecture, where power savings are introduced through trade-offs between processing and networking. We study the performance of the CFN architecture by comparing the energy savings when compared to the baseline approach which is the CDC.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge