Sanaa H. Mohamed

Access-Point to Access-Point Connectivity for PON-based OWC Spine and Leaf Data Centre Architecture

Apr 22, 2024

Abstract:In this paper, we propose incorporating Optical Wireless Communication (OWC) and Passive Optical Network (PON) technologies into next generation spine-and-leaf Data Centre Networks (DCNs). In this work, OWC systems are used to connect the Data Centre (DC) racks through Wavelength Division Multiplexing (WDM) Infrared (IR) transceivers. The transceivers are placed on top of the racks and at distributed Access Points (APs) in the ceiling. Each transceiver on a rack is connected to a leaf switch that connects the servers within the rack. We replace the spine switches by Optical Line Terminal (OLT) and Network Interface Cards (NIC) in the APs to achieve the desired connectivity. We benchmark the power consumption of the proposed OWC-PON-based spine-and-leaf DC against traditional spine-and-leaf DC and report 46% reduction in the power consumption when considering eight racks.

Multiuser beam steering OWC system based on NOMA

Apr 10, 2023Abstract:In this paper, we propose applying Non-Orthogonal Multiple Access (NOMA) technology in a multiuser beam steering OWC system. We study the performance of the NOMA-based multiuser beam steering system in terms of the achievable rate and Bit Error Rate (BER). We investigate the impact of the power allocation factor of NOMA and the number of users in the room. The results show that the power allocation factor is a vital parameter in NOMA-based transmission that affects the performance of the network in terms of data rate and BER.

Relay Assisted Multiuser OWC Systems under Human Blockage

Apr 10, 2023Abstract:This paper proposes using cooperative communication based on optoelectronic (O-E-O) amplify-and-forward relay terminals to reduce the influence of the blockage and shadowing resulting from human movement in a beam steering Optical Wireless Communication (OWC) system. The simulation results indicate that on average, the outage probability of the cooperative communication mode with O-E-O relay terminals is two orders of magnitude lower than the outage probability of the system without relay terminals.

Energy Efficient VM Placement in a Heterogeneous Fog Computing Architecture

Mar 27, 2022

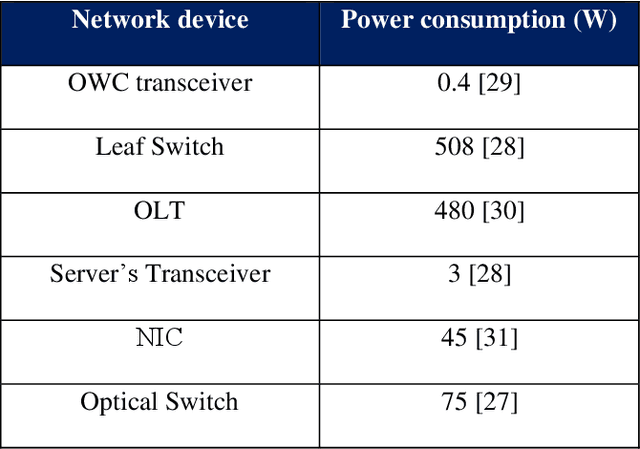

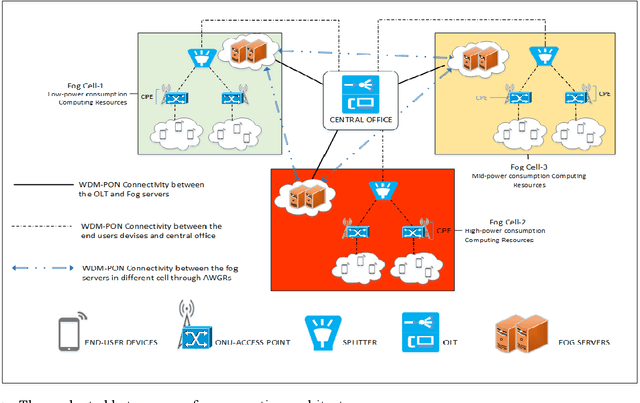

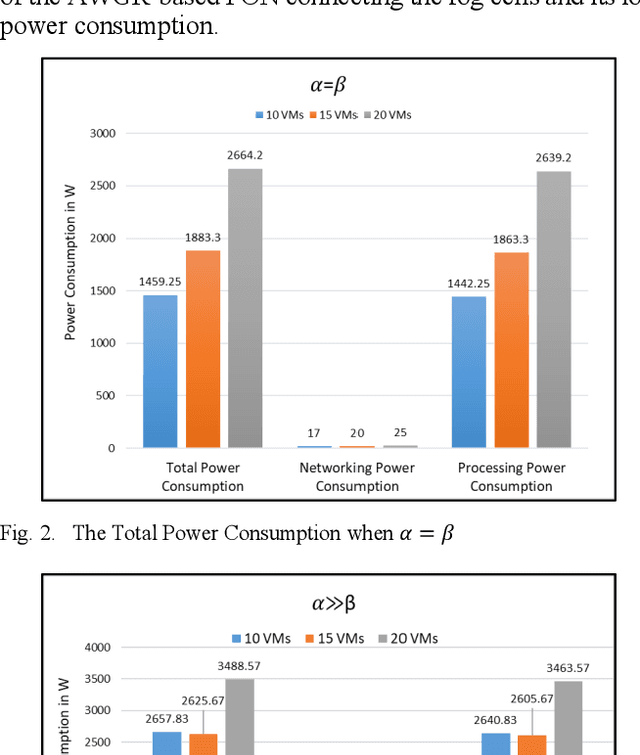

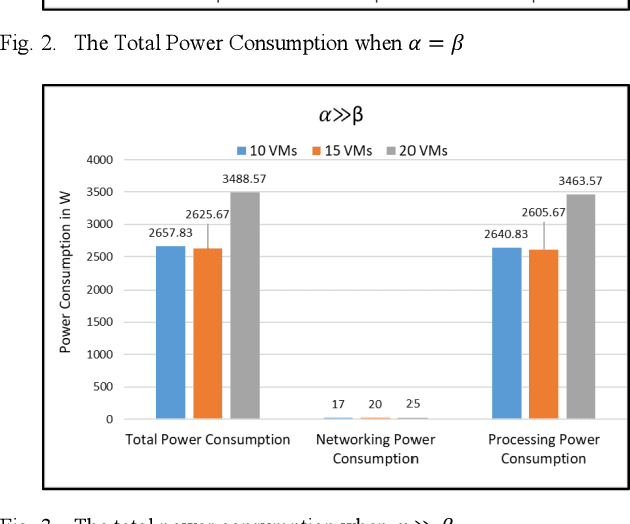

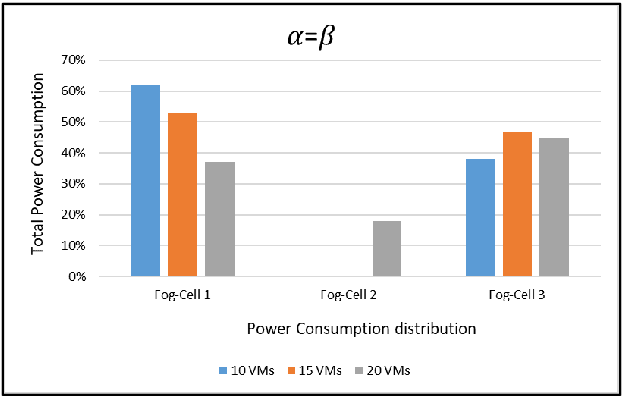

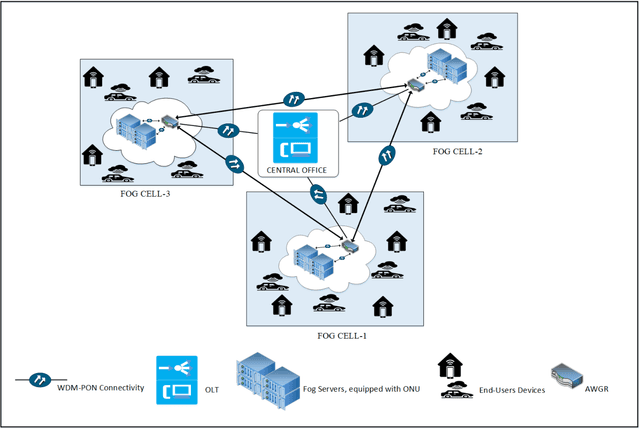

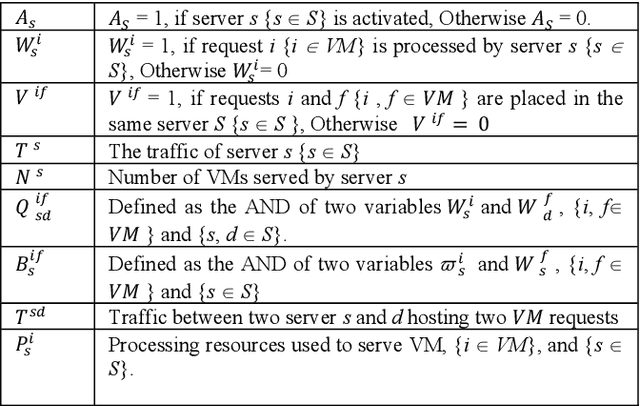

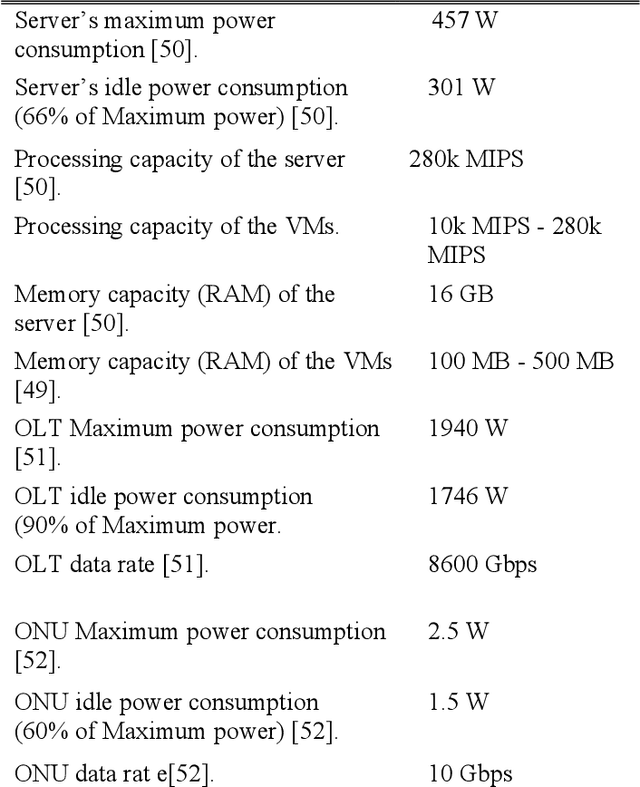

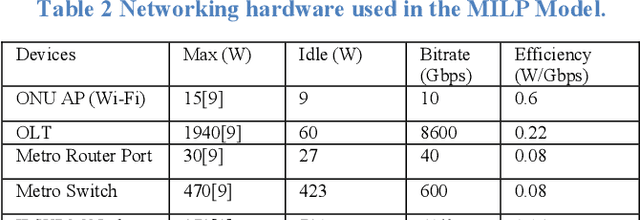

Abstract:Recent years have witnessed a remarkable development in communication and computing systems, mainly driven by the increasing demands of data and processing intensive applications such as virtual reality, M2M, connected vehicles, IoT services, to name a few. Massive amounts of data will be collected by various mobile and fixed terminals that will need to be processed in order to extract knowledge from the data. Traditionally, a centralized approach is taken for processing the collected data using large data centers connected to a core network. However, due to the scale of the Internet-connected things, transporting raw data all the way to the core network is costly in terms of the power consumption, delay, and privacy. This has compelled researchers to propose different decentralized computing paradigms such as fog computing to process collected data at the network edge close to the terminals and users. In this paper, we study, in a Passive Optical Network (PON)-based collaborative-fog computing system, the impact of the heterogeneity of the fog units capacity and energy-efficiency on the overall energy-efficiency of the fog system. We optimized the virtual machine (VM) placement in this fog system with three fog cells and formulated the problem as a mixed integer linear programming (MILP) optimization model with the objective of minimizing the networking and processing power consumption of the fog system. The results indicate that in our proposed architecture, the processing power consumption is the crucial element to achieve energy efficient VMs placement.

Angle Diversity Trasmitter For High Speed Data Center Uplink Communications

Oct 29, 2021

Abstract:This paper proposes an uplink optical wireless communication (OWC) link design that can be used by data centers to support communication in spine and leaf architectures between the top of rack leaf switches and large spine switches whose access points are mounted in the ceiling. The use of optical wireless links reduces cabling and allows easy reconfigurability for example when data centres expand. We consider three racks in a data center where each rack contains an Angle Diversity Transmitter (ADT) positioned on the top of the rack to realize the uplink function of a top-of-the-rack (ToR) or a leaf switch. Four receivers are considered to be installed on the ceiling where each is connected to a spine switch. Two types of optical receivers are studied which are a Wide Field-of-View Receiver (WFOVR) and an Angle Diversity Receiver (ADR). The performance of the proposed system is evaluated when the links run at data rates higher than 19 Gbps. The results indicate that the proposed approach achieves excellent performance using simple On-Off Keying (OOK)

Energy Efficient Resource Allocation in Federated Fog Computing Networks

Oct 28, 2021

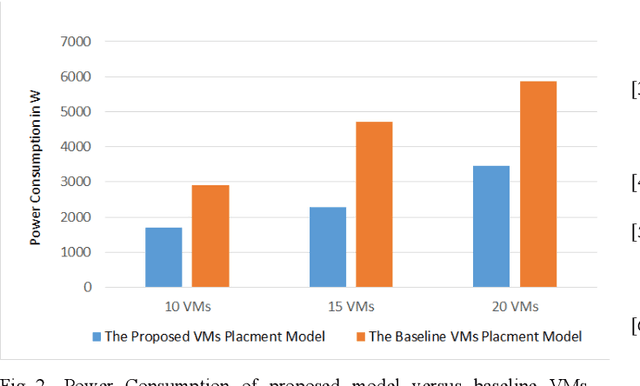

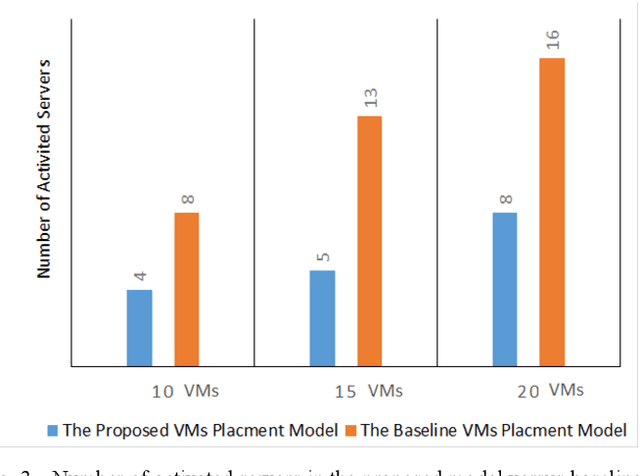

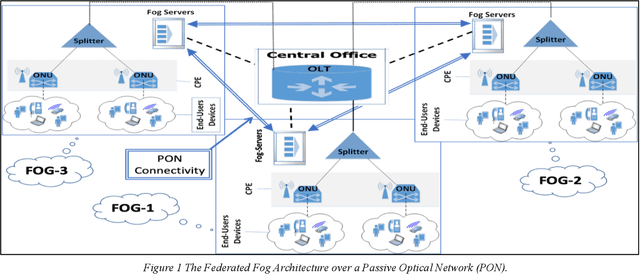

Abstract:There is a continuous growth in demand for time sensitive applications which has shifted the cloud paradigm from a centralized computing architecture towards distributed heterogeneous computing platforms where resources located at the edge of the network are used to provide cloud-like services. This paradigm is widely known as fog computing. Virtual machines (VMs) have been widely utilized in both paradigms to enhance the network scalability, improve resource utilization, and energy efficiency. Moreover, Passive Optical Networks (PONs) are a technology suited to handling the enormous volumes of data generated in the access network due to their energy efficiency and large bandwidth. In this paper, we utilize a PON to provide the connectivity between multiple distributed fog units to achieve federated (i.e. cooperative) computing units in the access network to serve intensive demands. We propose a mixed integer linear program (MILP) to optimize the VM placement in the federated fog computing units with the objective of minimizing the total power consumption while considering inter-VM traffic. The results show a significant power saving as a result of the proposed optimization model by up to 52%, in the VM-allocation compared to a baseline approach that allocates the VM requests while neglecting the power consumption and inter-VMs traffic in the optimization framework.

Energy Minimized Federated Fog Computing over Passive Optical Networks

May 21, 2021

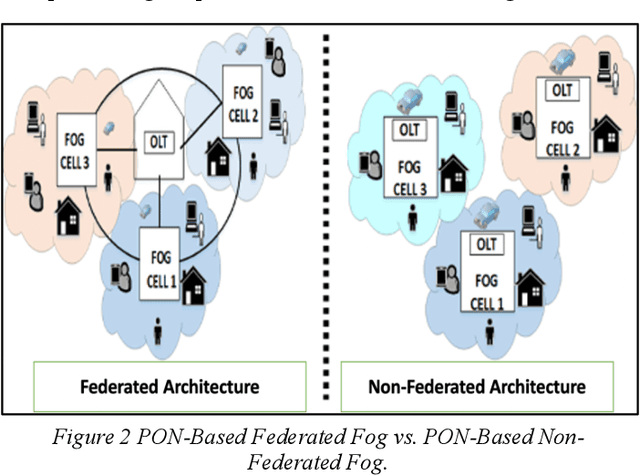

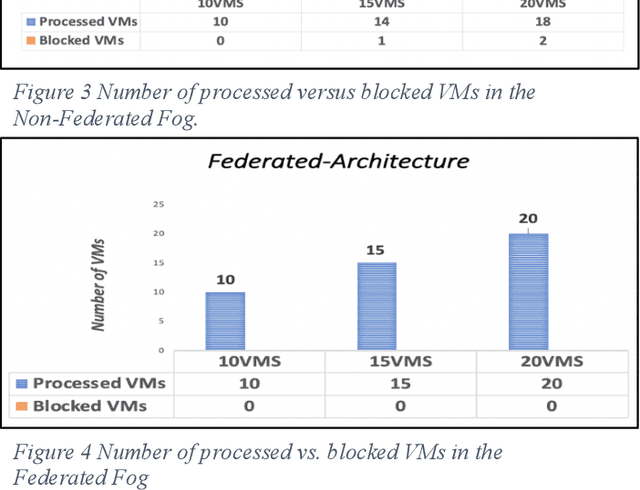

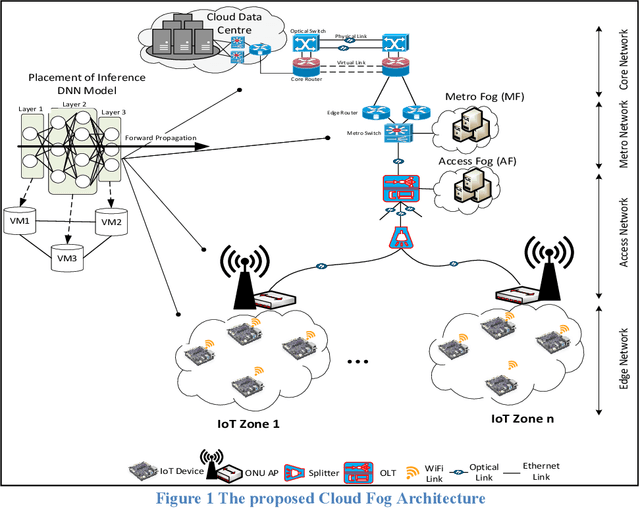

Abstract:The rapid growth of time-sensitive applications and services has driven enhancements to computing infrastructures. The main challenge that needs addressing for these applications is the optimal placement of the end-users demands to reduce the total power consumption and delay. One of the widely adopted paradigms to address such a challenge is fog computing. Placing fog units close to end-users at the edge of the network can help mitigate some of the latency and energy efficiency issues. Compared to the traditional hyperscale cloud data centres, fog computing units are constrained by computational power, hence, the capacity of fog units plays a critical role in meeting the stringent demands of the end-users due to intensive processing workloads. In this paper, we aim to optimize the placement of virtual machines (VMs) demands originating from end-users in a fog computing setting by formulating a Mixed Integer Linear Programming (MILP) model to minimize the total power consumption through the use of a federated architecture made up of multiple distributed fog cells. The obtained results show an increase in processing capacity in the fog layer and a reduction in the power consumption by up to 26% compared to the Non-Federated fogs network.

Energy-Efficient Distributed Machine Learning in Cloud Fog Networks

May 20, 2021

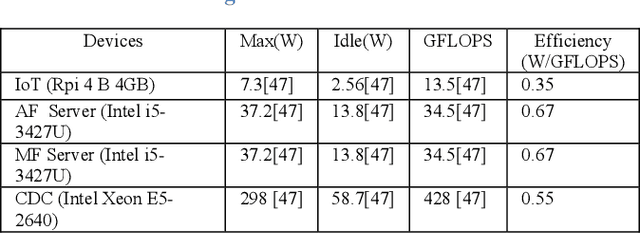

Abstract:Massive amounts of data are expected to be generated by the billions of objects that form the Internet of Things (IoT). A variety of automated services such as monitoring will largely depend on the use of different Machine Learning (ML) algorithms. Traditionally, ML models are processed by centralized cloud data centers, where IoT readings are offloaded to the cloud via multiple networking hops in the access, metro, and core layers. This approach will inevitably lead to excessive networking power consumptions as well as Quality-of-Service (QoS) degradation such as increased latency. Instead, in this paper, we propose a distributed ML approach where the processing can take place in intermediary devices such as IoT nodes and fog servers in addition to the cloud. We abstract the ML models into Virtual Service Requests (VSRs) to represent multiple interconnected layers of a Deep Neural Network (DNN). Using Mixed Integer Linear Programming (MILP), we design an optimization model that allocates the layers of a DNN in a Cloud/Fog Network (CFN) in an energy efficient way. We evaluate the impact of DNN input distribution on the performance of the CFN and compare the energy efficiency of this approach to the baseline where all layers of DNNs are processed in the centralized Cloud Data Center (CDC).

Energy-Efficient AI over a Virtualized Cloud Fog Network

May 07, 2021

Abstract:Deep Neural Networks (DNNs) have served as a catalyst in introducing a plethora of next-generation services in the era of Internet of Things (IoT), thanks to the availability of massive amounts of data collected by the objects on the edge. Currently, DNN models are used to deliver many Artificial Intelligence (AI) services that include image and natural language processing, speech recognition, and robotics. Accordingly, such services utilize various DNN models that make it computationally intensive for deployment on the edge devices alone. Thus, most AI models are offloaded to distant cloud data centers (CDCs), which tend to consolidate large amounts of computing and storage resources into one or more CDCs. Deploying services in the CDC will inevitably lead to excessive latencies and overall increase in power consumption. Instead, fog computing allows for cloud services to be extended to the edge of the network, which allows for data processing to be performed closer to the end-user device. However, different from cloud data centers, fog nodes have limited computational power and are highly distributed in the network. In this paper, using Mixed Integer Linear Programming (MILP), we formulate the placement of DNN inference models, which is abstracted as a network embedding problem in a Cloud Fog Network (CFN) architecture, where power savings are introduced through trade-offs between processing and networking. We study the performance of the CFN architecture by comparing the energy savings when compared to the baseline approach which is the CDC.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge