Bartosz Kostka

Towards Using Context-Dependent Symbols in CTC Without State-Tying Decision Trees

Jan 14, 2019

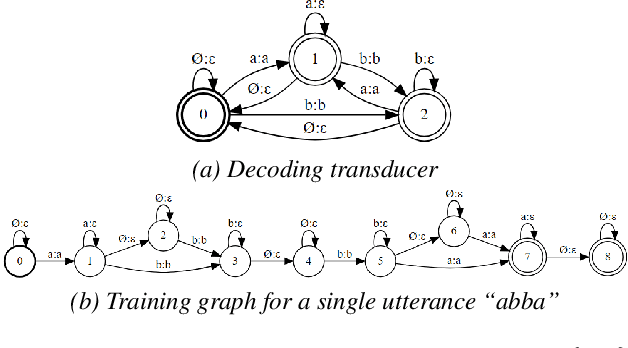

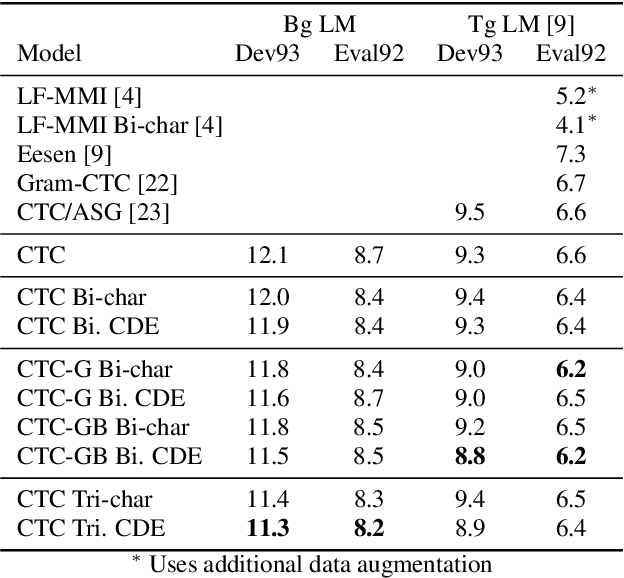

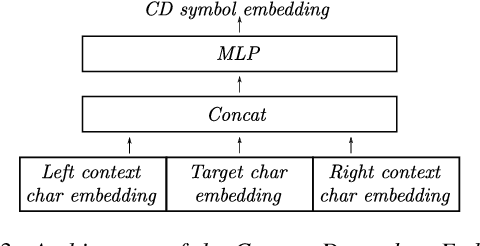

Abstract:Deep neural acoustic models benefit from context dependent modeling of output symbols. However, their usage requires state-tying decision trees that are typically transferred from classical GMM-HMM systems. In this work we consider direct training of CTC networks with context dependent outputs. A state-tying decision tree is replaced with a neural network that predicts the weights of the final SoftMax classifier in a context-dependent way. This network is trained together with the rest of the acoustic model and lifts one of the last cases in which neural systems have to be bootstrapped from GMM-HMM ones. We describe changes to the CTC cost function that are needed to accommodate context-dependent symbols and validate this idea on bigram context dependent system built for character-based WSJ.

Text-based Adventures of the Golovin AI Agent

May 16, 2017

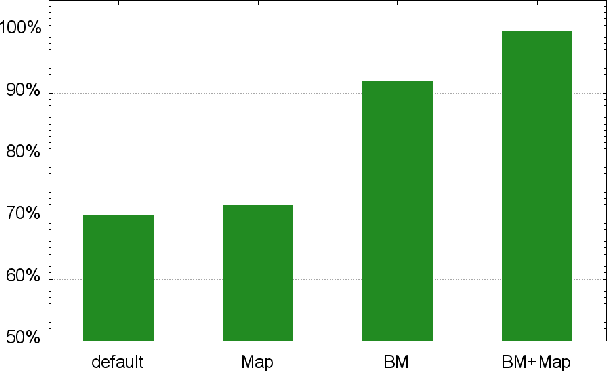

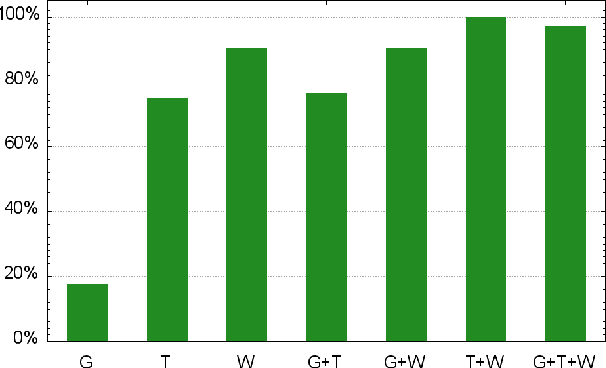

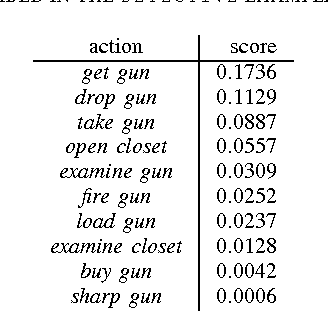

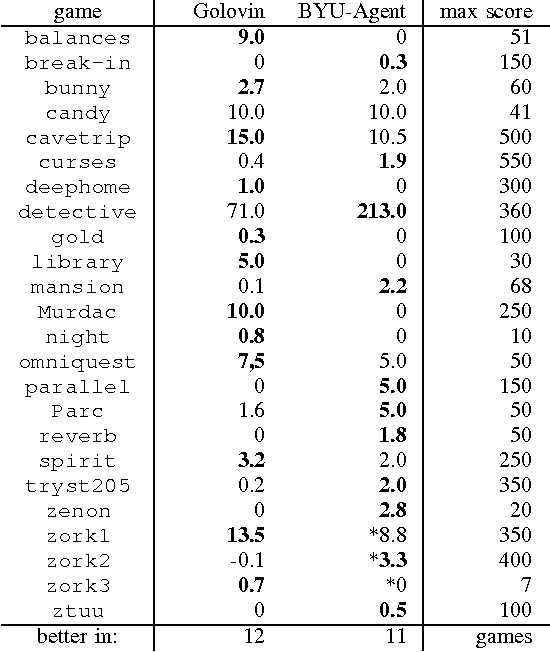

Abstract:The domain of text-based adventure games has been recently established as a new challenge of creating the agent that is both able to understand natural language, and acts intelligently in text-described environments. In this paper, we present our approach to tackle the problem. Our agent, named Golovin, takes advantage of the limited game domain. We use genre-related corpora (including fantasy books and decompiled games) to create language models suitable to this domain. Moreover, we embed mechanisms that allow us to specify, and separately handle, important tasks as fighting opponents, managing inventory, and navigating on the game map. We validated usefulness of these mechanisms, measuring agent's performance on the set of 50 interactive fiction games. Finally, we show that our agent plays on a level comparable to the winner of the last year Text-Based Adventure AI Competition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge