Bala Shyamala Balaji

Resource-Constrained Federated Learning with Heterogeneous Labels and Models

Nov 06, 2020

Abstract:Various IoT applications demand resource-constrained machine learning mechanisms for different applications such as pervasive healthcare, activity monitoring, speech recognition, real-time computer vision, etc. This necessitates us to leverage information from multiple devices with few communication overheads. Federated Learning proves to be an extremely viable option for distributed and collaborative machine learning. Particularly, on-device federated learning is an active area of research, however, there are a variety of challenges in addressing statistical (non-IID data) and model heterogeneities. In addition, in this paper we explore a new challenge of interest -- to handle label heterogeneities in federated learning. To this end, we propose a framework with simple $\alpha$-weighted federated aggregation of scores which leverages overlapping information gain across labels, while saving bandwidth costs in the process. Empirical evaluation on Animals-10 dataset (with 4 labels for effective elucidation of results) indicates an average deterministic accuracy increase of at least ~16.7%. We also demonstrate the on-device capabilities of our proposed framework by experimenting with federated learning and inference across different iterations on a Raspberry Pi 2, a single-board computing platform.

Reinforcement Learning based dynamic weighing of Ensemble Models for Time Series Forecasting

Aug 20, 2020

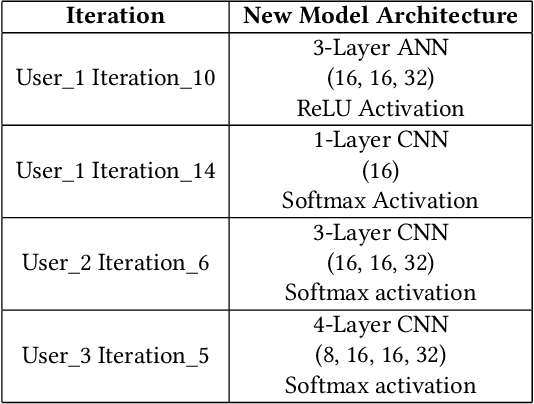

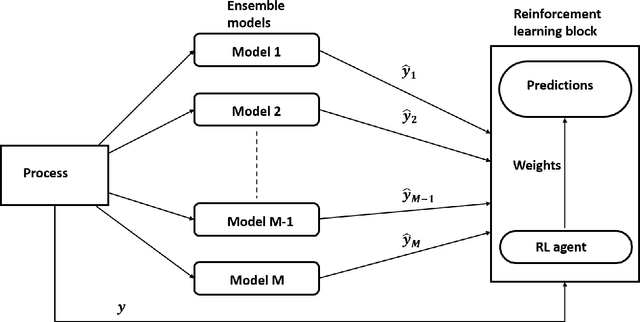

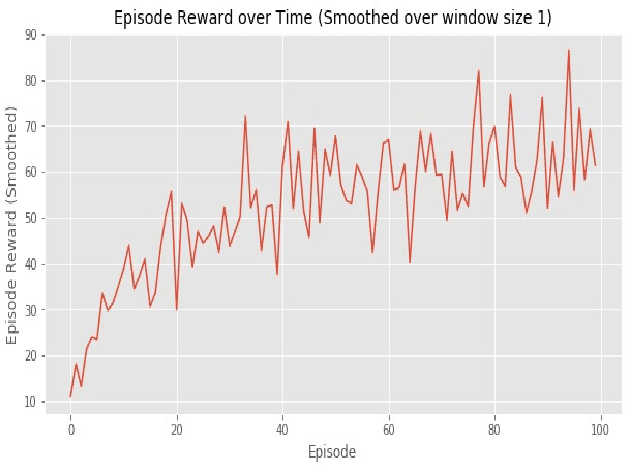

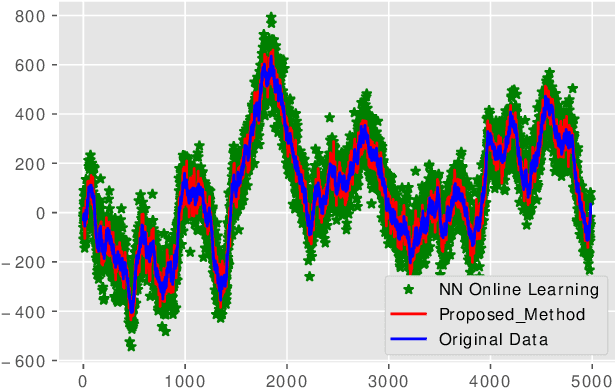

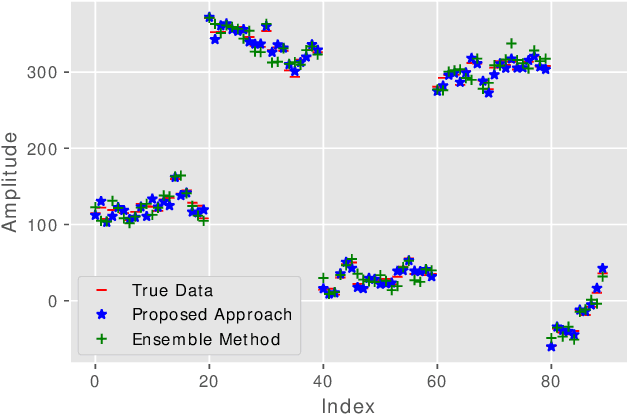

Abstract:Ensemble models are powerful model building tools that are developed with a focus to improve the accuracy of model predictions. They find applications in time series forecasting in varied scenarios including but not limited to process industries, health care, and economics where a single model might not provide optimal performance. It is known that if models selected for data modelling are distinct (linear/non-linear, static/dynamic) and independent (minimally correlated models), the accuracy of the predictions is improved. Various approaches suggested in the literature to weigh the ensemble models use a static set of weights. Due to this limitation, approaches using a static set of weights for weighing ensemble models cannot capture the dynamic changes or local features of the data effectively. To address this issue, a Reinforcement Learning (RL) approach to dynamically assign and update weights of each of the models at different time instants depending on the nature of data and the individual model predictions is proposed in this work. The RL method implemented online, essentially learns to update the weights and reduce the errors as the time progresses. Simulation studies on time series data showed that the dynamic weighted approach using RL learns the weight better than existing approaches. The accuracy of the proposed method is compared with an existing approach of online Neural Network tuning quantitatively through normalized mean square error(NMSE) values.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge