Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Augusto Peres

Equivariant neural networks for recovery of Hadamard matrices

Jan 31, 2022Figures and Tables:

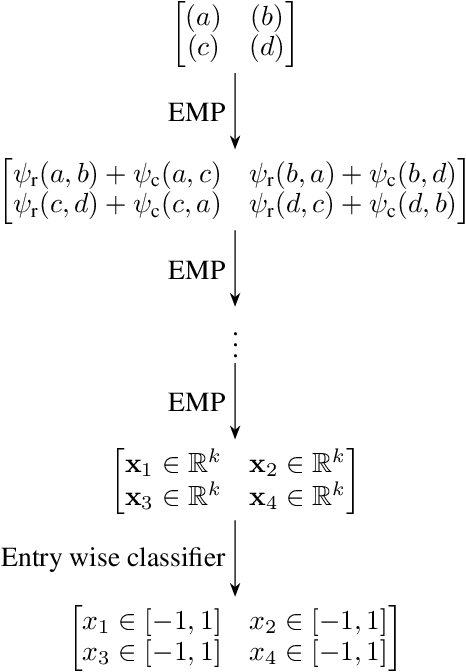

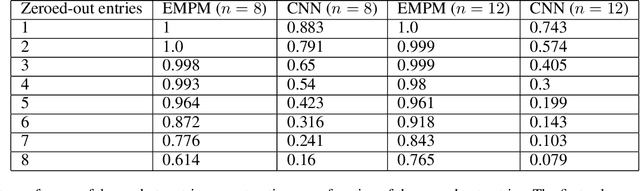

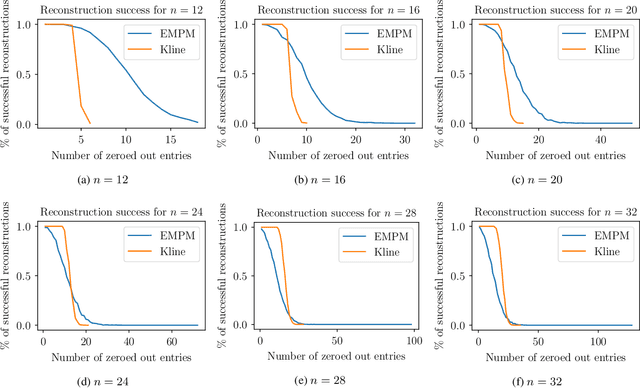

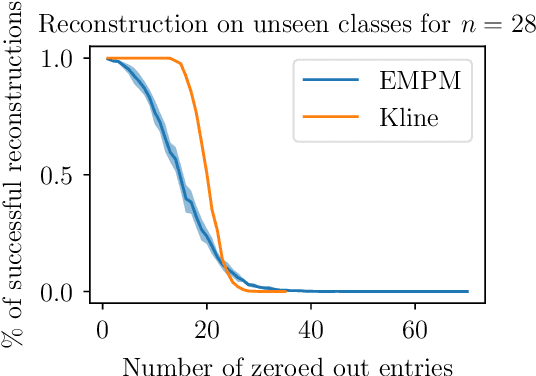

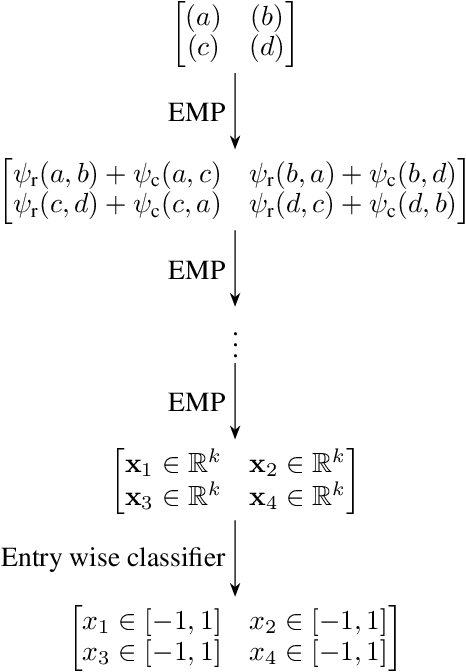

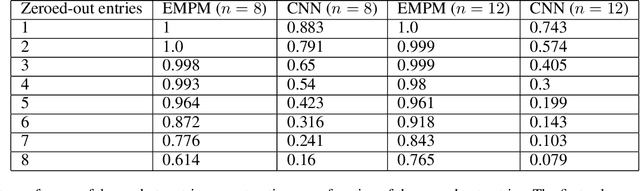

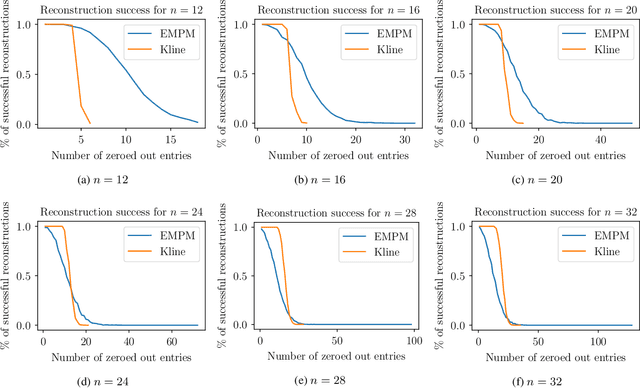

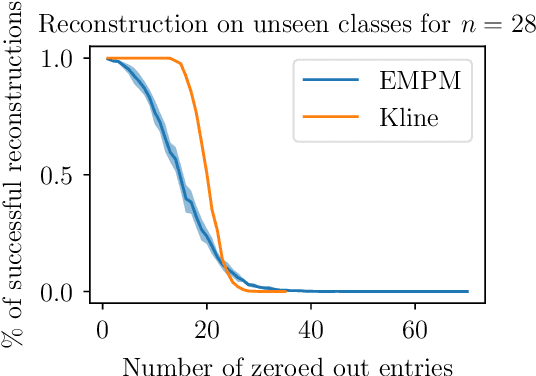

Abstract:We propose a message passing neural network architecture designed to be equivariant to column and row permutations of a matrix. We illustrate its advantages over traditional architectures like multi-layer perceptrons (MLPs), convolutional neural networks (CNNs) and even Transformers, on the combinatorial optimization task of recovering a set of deleted entries of a Hadamard matrix. We argue that this is a powerful application of the principles of Geometric Deep Learning to fundamental mathematics, and a potential stepping stone toward more insights on the Hadamard conjecture using Machine Learning techniques.

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge