Athanasios Polydoros

Zero-Shot Sim-to-Real Reinforcement Learning for Fruit Harvesting

May 13, 2025Abstract:This paper presents a comprehensive sim-to-real pipeline for autonomous strawberry picking from dense clusters using a Franka Panda robot. Our approach leverages a custom Mujoco simulation environment that integrates domain randomization techniques. In this environment, a deep reinforcement learning agent is trained using the dormant ratio minimization algorithm. The proposed pipeline bridges low-level control with high-level perception and decision making, demonstrating promising performance in both simulation and in a real laboratory environment, laying the groundwork for successful transfer to real-world autonomous fruit harvesting.

Robotic Learning in your Backyard: A Neural Simulator from Open Source Components

Oct 25, 2024

Abstract:The emergence of 3D Gaussian Splatting for fast and high-quality novel view synthesize has opened up the possibility to construct photo-realistic simulations from video for robotic reinforcement learning. While the approach has been demonstrated in several research papers, the software tools used to build such a simulator remain unavailable or proprietary. We present SplatGym, an open source neural simulator for training data-driven robotic control policies. The simulator creates a photorealistic virtual environment from a single video. It supports ego camera view generation, collision detection, and virtual object in-painting. We demonstrate training several visual navigation policies via reinforcement learning. SplatGym represents a notable first step towards an open-source general-purpose neural environment for robotic learning. It broadens the range of applications that can effectively utilise reinforcement learning by providing convenient and unrestricted tooling, and by eliminating the need for the manual development of conventional 3D environments.

Pretrained Visual Representations in Reinforcement Learning

Jul 24, 2024

Abstract:Visual reinforcement learning (RL) has made significant progress in recent years, but the choice of visual feature extractor remains a crucial design decision. This paper compares the performance of RL algorithms that train a convolutional neural network (CNN) from scratch with those that utilize pre-trained visual representations (PVRs). We evaluate the Dormant Ratio Minimization (DRM) algorithm, a state-of-the-art visual RL method, against three PVRs: ResNet18, DINOv2, and Visual Cortex (VC). We use the Metaworld Push-v2 and Drawer-Open-v2 tasks for our comparison. Our results show that the choice of training from scratch compared to using PVRs for maximising performance is task-dependent, but PVRs offer advantages in terms of reduced replay buffer size and faster training times. We also identify a strong correlation between the dormant ratio and model performance, highlighting the importance of exploration in visual RL. Our study provides insights into the trade-offs between training from scratch and using PVRs, informing the design of future visual RL algorithms.

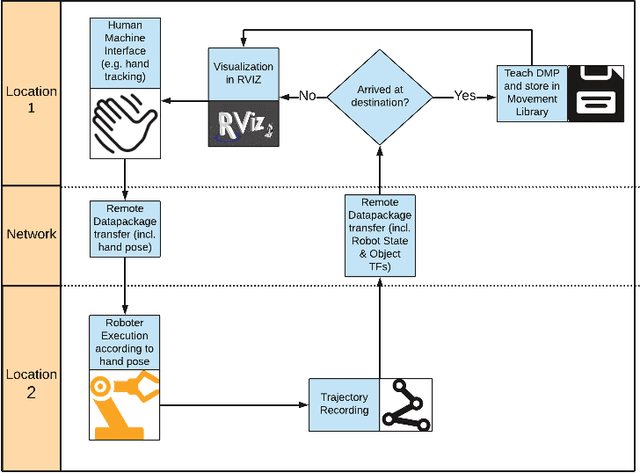

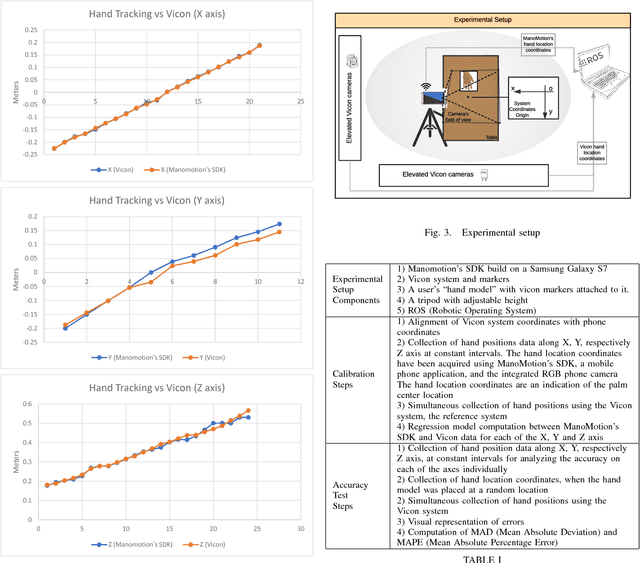

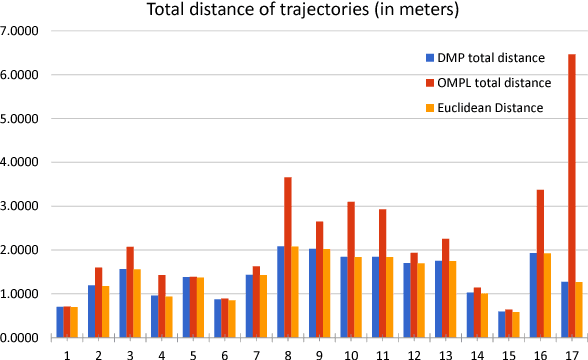

Human-Machine Interface for Remote Training of Robot Tasks

Sep 25, 2018

Abstract:Regardless of their industrial or research application, the streamlining of robot operations is limited by the proximity of experienced users to the actual hardware. Be it massive open online robotics courses, crowd-sourcing of robot task training, or remote research on massive robot farms for machine learning, the need to create an apt remote Human-Machine Interface is quite prevalent. The paper at hand proposes a novel solution to the programming/training of remote robots employing an intuitive and accurate user-interface which offers all the benefits of working with real robots without imposing delays and inefficiency. The system includes: a vision-based 3D hand detection and gesture recognition subsystem, a simulated digital twin of a robot as visual feedback, and the "remote" robot learning/executing trajectories using dynamic motion primitives. Our results indicate that the system is a promising solution to the problem of remote training of robot tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge