Atefeh Hajijamali Arani

Mobile Cellular-Connected UAVs: Reinforcement Learning for Sky Limits

Sep 21, 2020

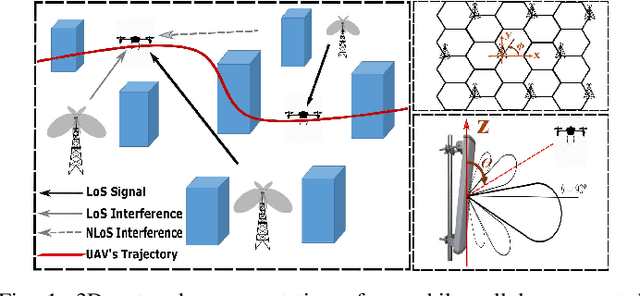

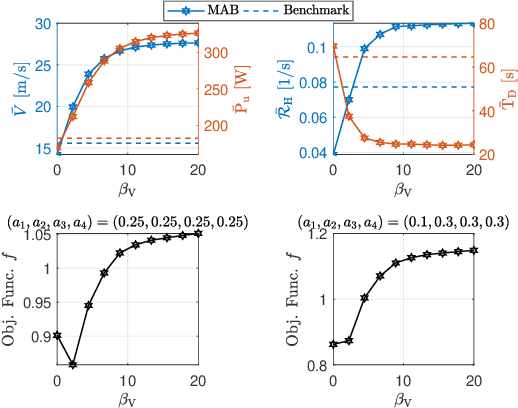

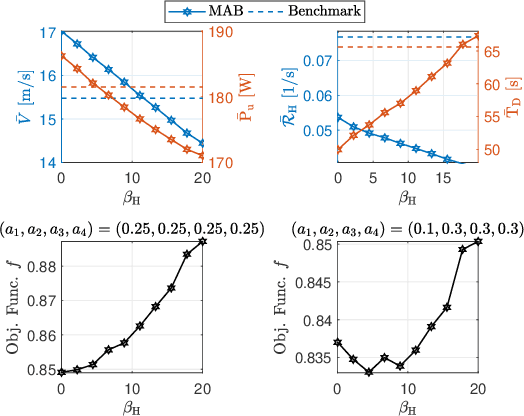

Abstract:A cellular-connected unmanned aerial vehicle (UAV)faces several key challenges concerning connectivity and energy efficiency. Through a learning-based strategy, we propose a general novel multi-armed bandit (MAB) algorithm to reduce disconnectivity time, handover rate, and energy consumption of UAV by taking into account its time of task completion. By formulating the problem as a function of UAV's velocity, we show how each of these performance indicators (PIs) is improved by adopting a proper range of corresponding learning parameter, e.g. 50% reduction in HO rate as compared to a blind strategy. However, results reveal that the optimal combination of the learning parameters depends critically on any specific application and the weights of PIs on the final objective function.

Learning in the Sky: An Efficient 3D Placement of UAVs

Mar 02, 2020

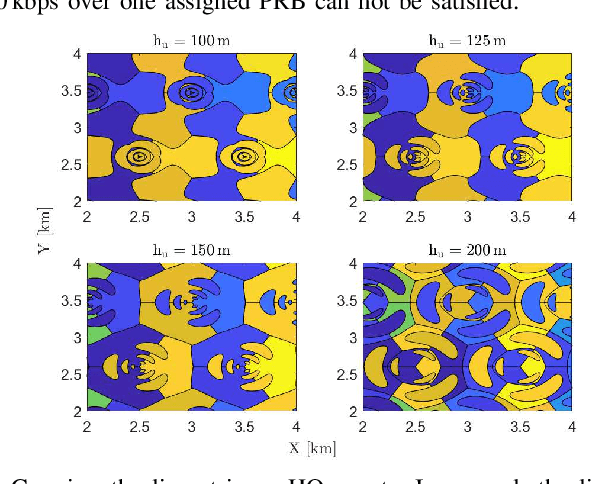

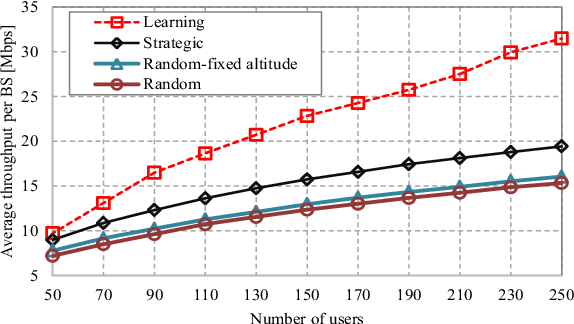

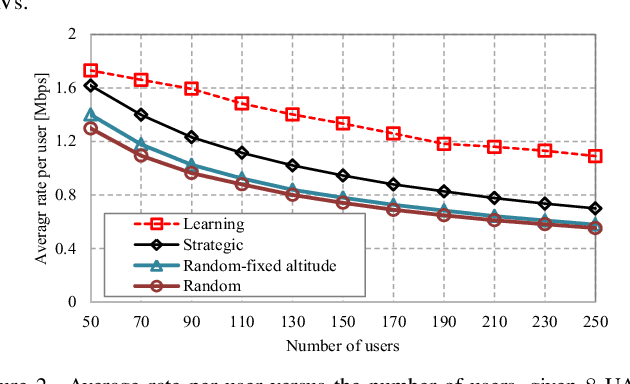

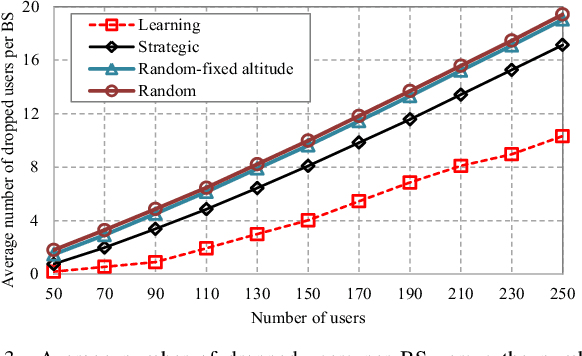

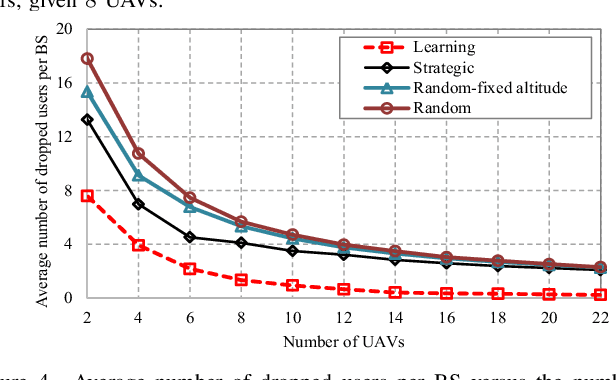

Abstract:Deployment of unmanned aerial vehicles (UAVs) as aerial base stations can deliver a fast and flexible solution for serving varying traffic demand. In order to adequately benefit of UAVs deployment, their efficient placement is of utmost importance, and requires to intelligently adapt to the environment changes. In this paper, we propose a learning-based mechanism for the three-dimensional deployment of UAVs assisting terrestrial cellular networks in the downlink. The problem is modeled as a non-cooperative game among UAVs in satisfaction form. To solve the game, we utilize a low complexity algorithm, in which unsatisfied UAVs update their locations based on a learning algorithm. Simulation results reveal that the proposed UAV placement algorithm yields significant performance gains up to about 52% and 74% in terms of throughput and the number of dropped users, respectively, compared to an optimized baseline algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge