Asiegbu Miracle Kanu-Asiegbu

Strip-Fusion: Spatiotemporal Fusion for Multispectral Pedestrian Detection

Jan 25, 2026Abstract:Pedestrian detection is a critical task in robot perception. Multispectral modalities (visible light and thermal) can boost pedestrian detection performance by providing complementary visual information. Several gaps remain with multispectral pedestrian detection methods. First, existing approaches primarily focus on spatial fusion and often neglect temporal information. Second, RGB and thermal image pairs in multispectral benchmarks may not always be perfectly aligned. Pedestrians are also challenging to detect due to varying lighting conditions, occlusion, etc. This work proposes Strip-Fusion, a spatial-temporal fusion network that is robust to misalignment in input images, as well as varying lighting conditions and heavy occlusions. The Strip-Fusion pipeline integrates temporally adaptive convolutions to dynamically weigh spatial-temporal features, enabling our model to better capture pedestrian motion and context over time. A novel Kullback-Leibler divergence loss was designed to mitigate modality imbalance between visible and thermal inputs, guiding feature alignment toward the more informative modality during training. Furthermore, a novel post-processing algorithm was developed to reduce false positives. Extensive experimental results show that our method performs competitively for both the KAIST and the CVC-14 benchmarks. We also observed significant improvements compared to previous state-of-the-art on challenging conditions such as heavy occlusion and misalignment.

MambaST: A Plug-and-Play Cross-Spectral Spatial-Temporal Fuser for Efficient Pedestrian Detection

Aug 02, 2024Abstract:This paper proposes MambaST, a plug-and-play cross-spectral spatial-temporal fusion pipeline for efficient pedestrian detection. Several challenges exist for pedestrian detection in autonomous driving applications. First, it is difficult to perform accurate detection using RGB cameras under dark or low-light conditions. Cross-spectral systems must be developed to integrate complementary information from multiple sensor modalities, such as thermal and visible cameras, to improve the robustness of the detections. Second, pedestrian detection models are latency-sensitive. Efficient and easy-to-scale detection models with fewer parameters are highly desirable for real-time applications such as autonomous driving. Third, pedestrian video data provides spatial-temporal correlations of pedestrian movement. It is beneficial to incorporate temporal as well as spatial information to enhance pedestrian detection. This work leverages recent advances in the state space model (Mamba) and proposes a novel Multi-head Hierarchical Patching and Aggregation (MHHPA) structure to extract both fine-grained and coarse-grained information from both RGB and thermal imagery. Experimental results show that the proposed MHHPA is an effective and efficient alternative to a Transformer model for cross-spectral pedestrian detection. Our proposed model also achieves superior performance on small-scale pedestrian detection. The code is available at https://github.com/XiangboGaoBarry/MambaST}{https://github.com/XiangboGaoBarry/MambaST.

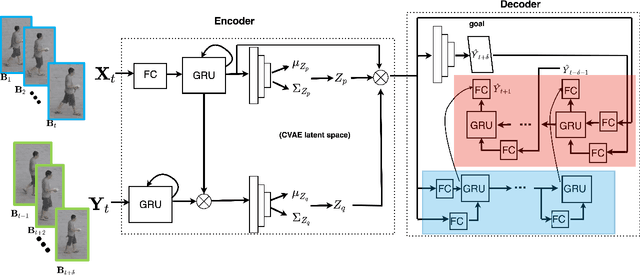

BiPOCO: Bi-Directional Trajectory Prediction with Pose Constraints for Pedestrian Anomaly Detection

Jul 05, 2022

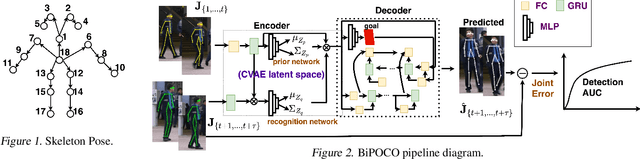

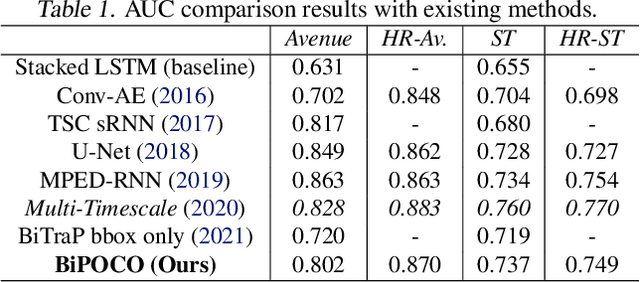

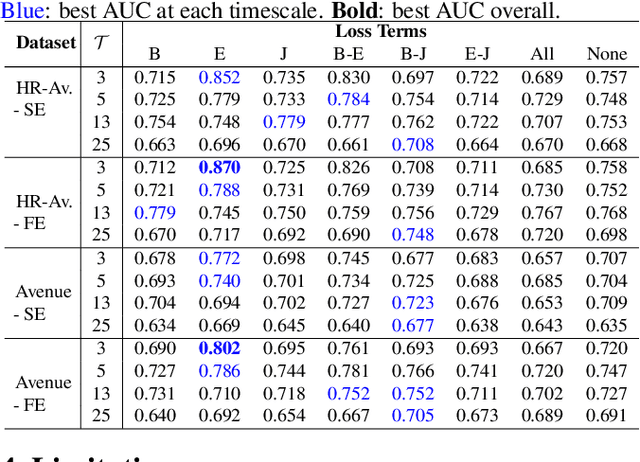

Abstract:We present BiPOCO, a Bi-directional trajectory predictor with POse COnstraints, for detecting anomalous activities of pedestrians in videos. In contrast to prior work based on feature reconstruction, our work identifies pedestrian anomalous events by forecasting their future trajectories and comparing the predictions with their expectations. We introduce a set of novel compositional pose-based losses with our predictor and leverage prediction errors of each body joint for pedestrian anomaly detection. Experimental results show that our BiPOCO approach can detect pedestrian anomalous activities with a high detection rate (up to 87.0%) and incorporating pose constraints helps distinguish normal and anomalous poses in prediction. This work extends current literature of using prediction-based methods for anomaly detection and can benefit safety-critical applications such as autonomous driving and surveillance. Code is available at https://github.com/akanuasiegbu/BiPOCO.

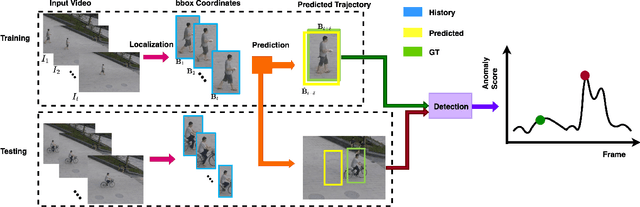

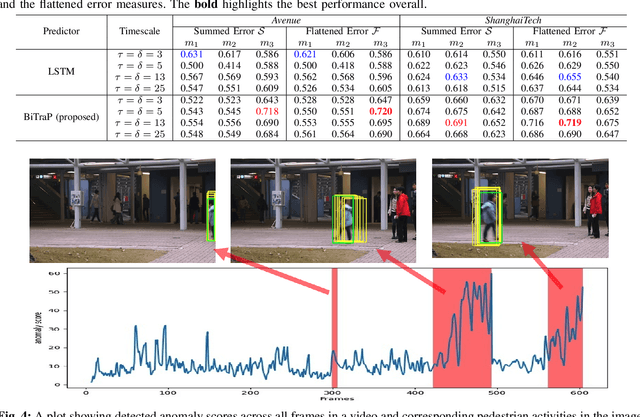

Leveraging Trajectory Prediction for Pedestrian Video Anomaly Detection

Jul 05, 2022

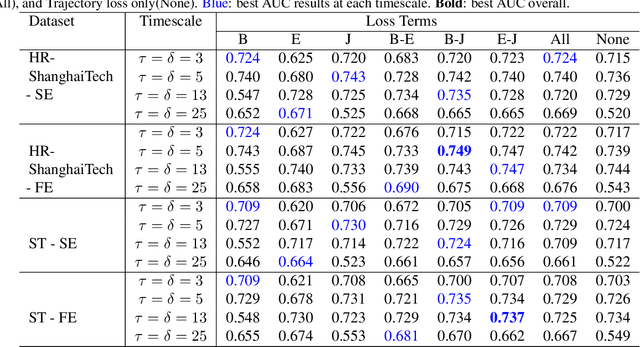

Abstract:Video anomaly detection is a core problem in vision. Correctly detecting and identifying anomalous behaviors in pedestrians from video data will enable safety-critical applications such as surveillance, activity monitoring, and human-robot interaction. In this paper, we propose to leverage trajectory localization and prediction for unsupervised pedestrian anomaly event detection. Different than previous reconstruction-based approaches, our proposed framework rely on the prediction errors of normal and abnormal pedestrian trajectories to detect anomalies spatially and temporally. We present experimental results on real-world benchmark datasets on varying timescales and show that our proposed trajectory-predictor-based anomaly detection pipeline is effective and efficient at identifying anomalous activities of pedestrians in videos. Code will be made available at https://github.com/akanuasiegbu/Leveraging-Trajectory-Prediction-for-Pedestrian-Video-Anomaly-Detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge