Arnaud Deleruyelle

Self-supervised U-net for few-shot learning of object segmentation in microscopy images

May 22, 2022

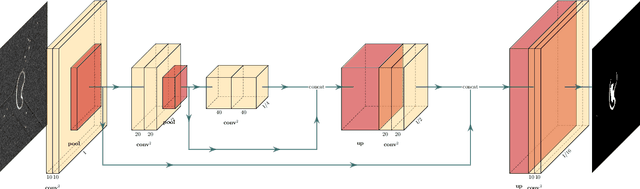

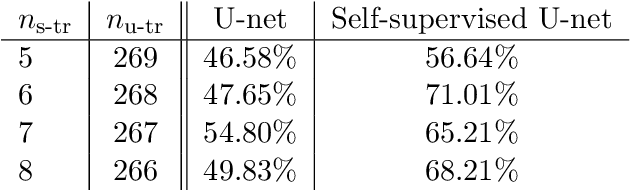

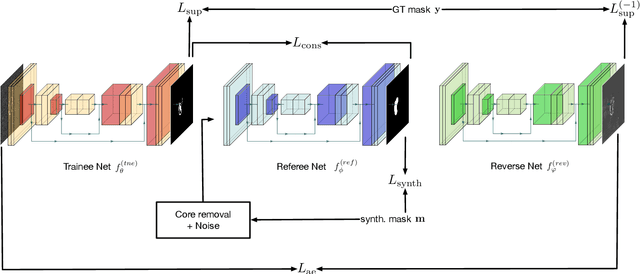

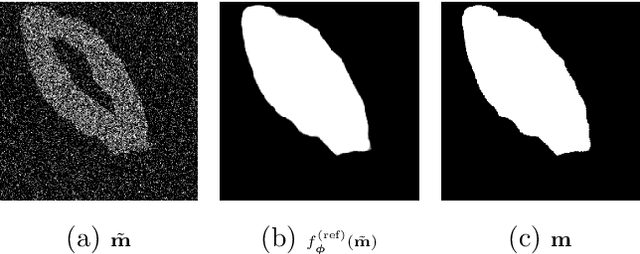

Abstract:State-of-the-art segmentation performances are achieved by deep neural networks. Training these networks from only a few training examples is challenging while producing annotated images that provide supervision is tedious. Recently, self-supervision, i.e. designing a neural pipeline providing synthetic or indirect supervision, has proved to significantly increase generalization performances of models trained on few shots. This paper introduces one such neural pipeline in the context of microscopic image segmentation. By leveraging the rather simple content of these images a trainee network can be mentored by a referee network which has been previously trained on synthetically generated pairs of corrupted/correct region masks.

SODA: Self-organizing data augmentation in deep neural networks -- Application to biomedical image segmentation tasks

Feb 07, 2022

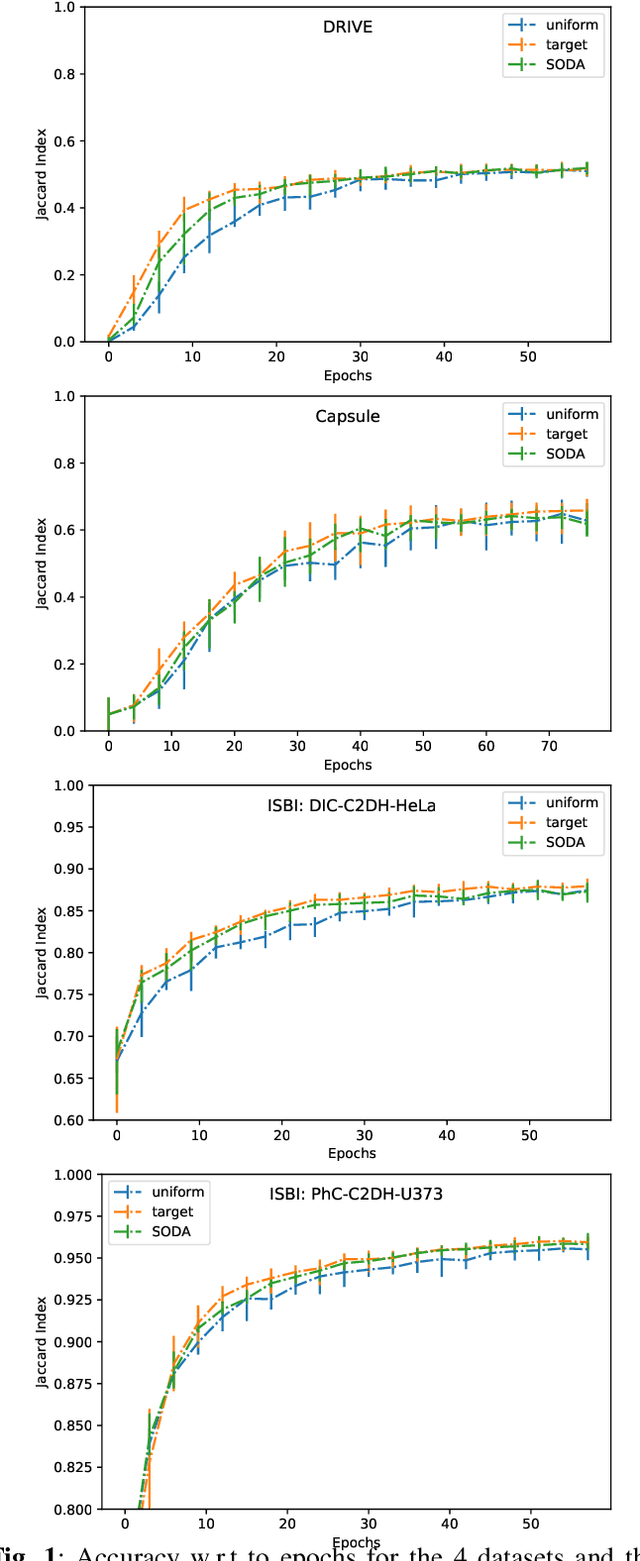

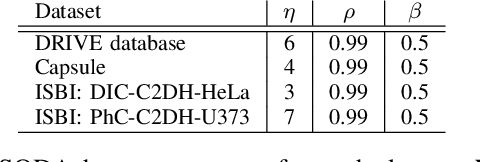

Abstract:In practice, data augmentation is assigned a predefined budget in terms of newly created samples per epoch. When using several types of data augmentation, the budget is usually uniformly distributed over the set of augmentations but one can wonder if this budget should not be allocated to each type in a more efficient way. This paper leverages online learning to allocate on the fly this budget as part of neural network training. This meta-algorithm can be run at almost no extra cost as it exploits gradient based signals to determine which type of data augmentation should be preferred. Experiments suggest that this strategy can save computation time and thus goes in the way of greener machine learning practices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge