Arindam Saha

Automating Experimental Optics with Sample Efficient Machine Learning Methods

Mar 18, 2025

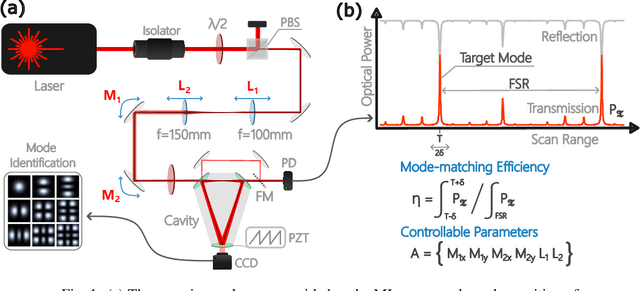

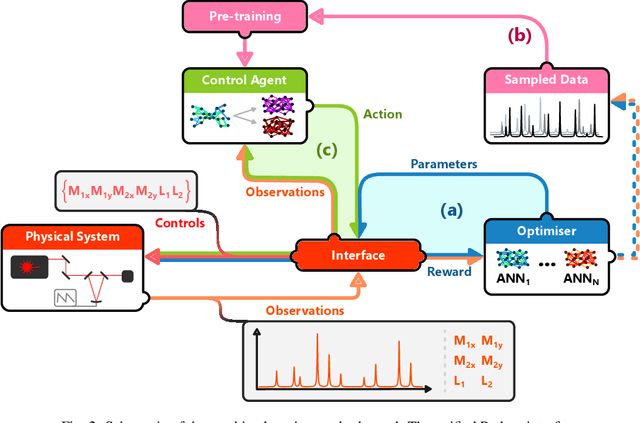

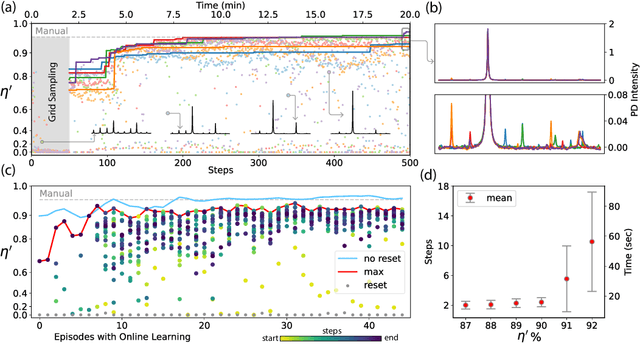

Abstract:As free-space optical systems grow in scale and complexity, troubleshooting becomes increasingly time-consuming and, in the case of remote installations, perhaps impractical. An example of a task that is often laborious is the alignment of a high-finesse optical resonator, which is highly sensitive to the mode of the input beam. In this work, we demonstrate how machine learning can be used to achieve autonomous mode-matching of a free-space optical resonator with minimal supervision. Our approach leverages sample-efficient algorithms to reduce data requirements while maintaining a simple architecture for easy deployment. The reinforcement learning scheme that we have developed shows that automation is feasible even in systems prone to drift in experimental parameters, as may well be the case in real-world applications.

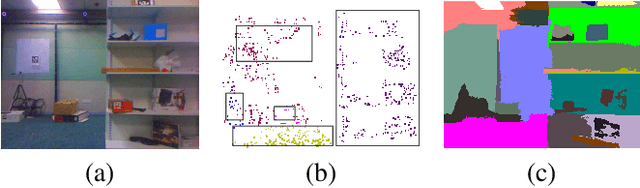

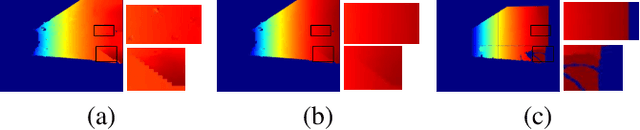

Indoor dense depth map at drone hovering

Apr 25, 2019

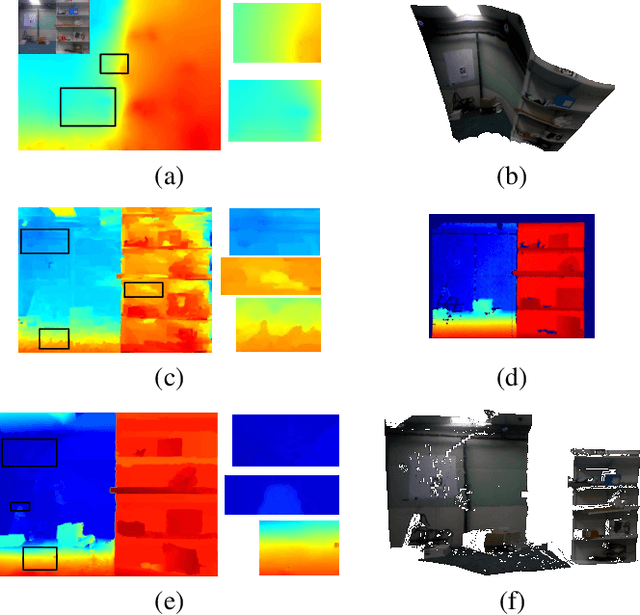

Abstract:Autonomous Micro Aerial Vehicles (MAVs) gained tremendous attention in recent years. Autonomous flight in indoor requires a dense depth map for navigable space detection which is the fundamental component for autonomous navigation. In this paper, we address the problem of reconstructing dense depth while a drone is hovering (small camera motion) in indoor scenes using already estimated cameras and sparse point cloud obtained from a vSLAM. We start by segmenting the scene based on sudden depth variation using sparse 3D points and introduce a patch-based local plane fitting via energy minimization which combines photometric consistency and co-planarity with neighbouring patches. The method also combines a plane sweep technique for image segments having almost no sparse point for initialization. Experiments show, the proposed method produces better depth for indoor in artificial lighting condition, low-textured environment compared to earlier literature in small motion.

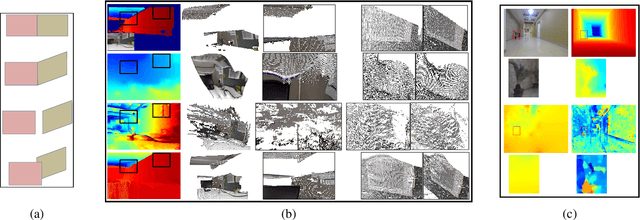

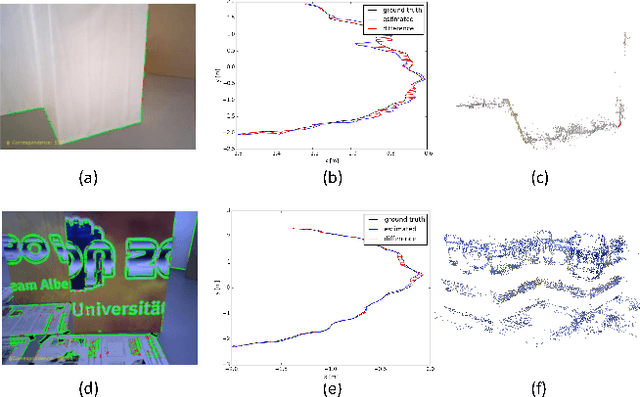

Edge SLAM: Edge Points Based Monocular Visual SLAM

Jan 14, 2019

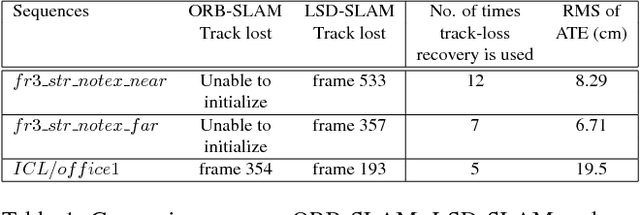

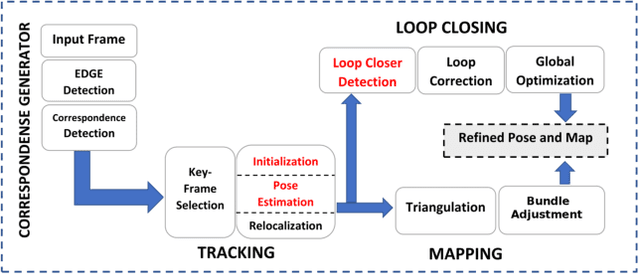

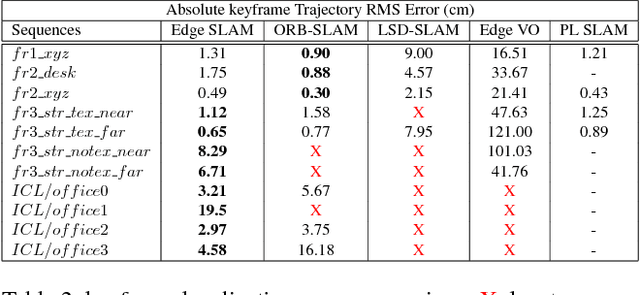

Abstract:Visual SLAM shows significant progress in recent years due to high attention from vision community but still, challenges remain for low-textured environments. Feature based visual SLAMs do not produce reliable camera and structure estimates due to insufficient features in a low-textured environment. Moreover, existing visual SLAMs produce partial reconstruction when the number of 3D-2D correspondences is insufficient for incremental camera estimation using bundle adjustment. This paper presents Edge SLAM, a feature based monocular visual SLAM which mitigates the above mentioned problems. Our proposed Edge SLAM pipeline detects edge points from images and tracks those using optical flow for point correspondence. We further refine these point correspondences using geometrical relationship among three views. Owing to our edge-point tracking, we use a robust method for two-view initialization for bundle adjustment. Our proposed SLAM also identifies the potential situations where estimating a new camera into the existing reconstruction is becoming unreliable and we adopt a novel method to estimate the new camera reliably using a local optimization technique. We present an extensive evaluation of our proposed SLAM pipeline with most popular open datasets and compare with the state-of-the art. Experimental result indicates that our Edge SLAM is robust and works reliably well for both textured and less-textured environment in comparison to existing state-of-the-art SLAMs.

* ICCV Workshops 2017, Venice, Italy, October 22-29, 2017

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge