Arindam Roychoudhury

Perception for Humanoid Robots

Sep 27, 2023Abstract:Purpose of Review: The field of humanoid robotics, perception plays a fundamental role in enabling robots to interact seamlessly with humans and their surroundings, leading to improved safety, efficiency, and user experience. This scientific study investigates various perception modalities and techniques employed in humanoid robots, including visual, auditory, and tactile sensing by exploring recent state-of-the-art approaches for perceiving and understanding the internal state, the environment, objects, and human activities. Recent Findings: Internal state estimation makes extensive use of Bayesian filtering methods and optimization techniques based on maximum a-posteriori formulation by utilizing proprioceptive sensing. In the area of external environment understanding, with an emphasis on robustness and adaptability to dynamic, unforeseen environmental changes, the new slew of research discussed in this study have focused largely on multi-sensor fusion and machine learning in contrast to the use of hand-crafted, rule-based systems. Human robot interaction methods have established the importance of contextual information representation and memory for understanding human intentions. Summary: This review summarizes the recent developments and trends in the field of perception in humanoid robots. Three main areas of application are identified, namely, internal state estimation, external environment estimation, and human robot interaction. The applications of diverse sensor modalities in each of these areas are considered and recent significant works are discussed.

Fast-Replanning Motion Control with Short-Term Aborting A*

Sep 16, 2021

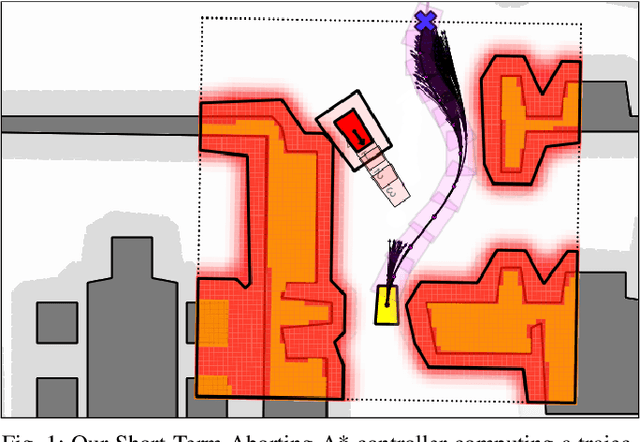

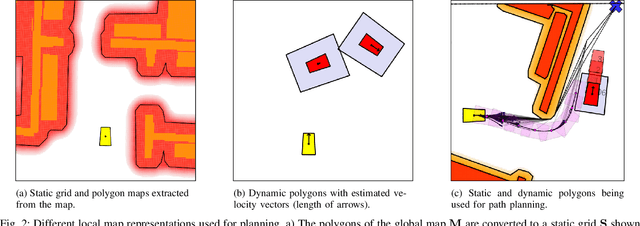

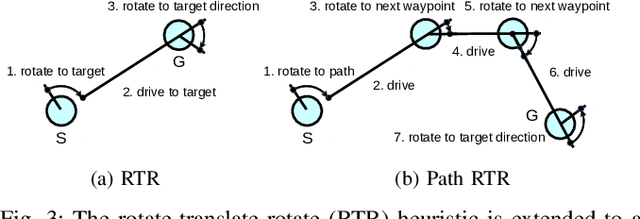

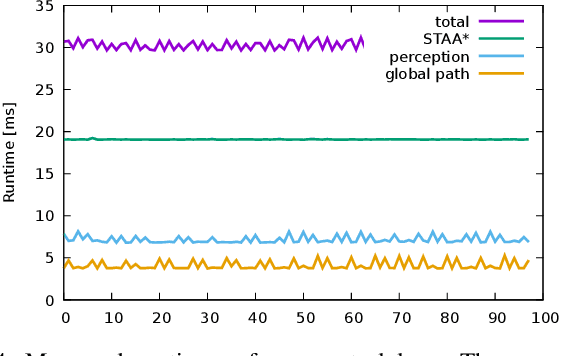

Abstract:Autonomously driving vehicles must be able to navigate in dynamic and unpredictable environments in a collision-free manner. So far, this has only been partially achieved in driverless cars and warehouse installations where marked structures such as roads, lanes, and traffic signs simplify the motion planning and collision avoidance problem. We are presenting a new control framework for car-like vehicles that is suitable for virtually any environment. It is based on an unprecedentedly fast-paced A* implementation that allows the control cycle to run at a frequency of 33~Hz. Due to an efficient heuristic consisting of rotate-translate-rotate motions laid out along the shortest path to the target, our Short Term Aborting A* (STAA*) can be aborted early in order to maintain a high and steady control rate. This enables us to place our STAA* algorithm as a low-level replanning controller that is well suited for navigation and collision avoidance in dynamic environments. While our STAA* expands states along the shortest path, it takes care of collision checking with the environment including predicted future states of moving obstacles, and returns the best solution found when the computation time runs out. Despite the bounded computation time, our STAA* does not get trapped in environmental minima due to the following of the shortest path. In simulated experiments, we demonstrate that our control approach is superior to an improved version of the Dynamic Window Approach with predictive collision avoidance capabilities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge