Arindam Biswas

Graph Expansion in Pruned Recurrent Neural Network Layers Preserve Performance

Mar 17, 2024

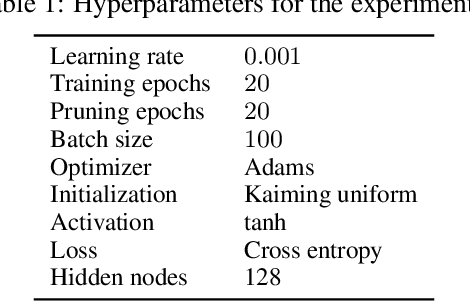

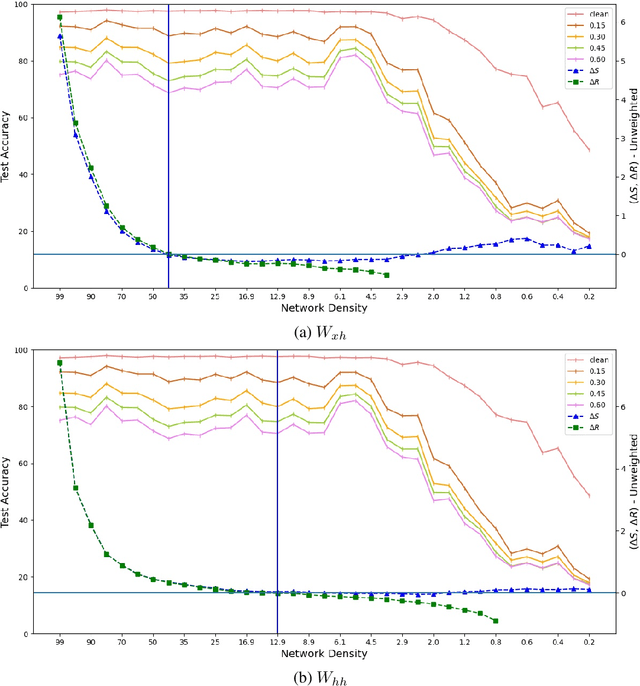

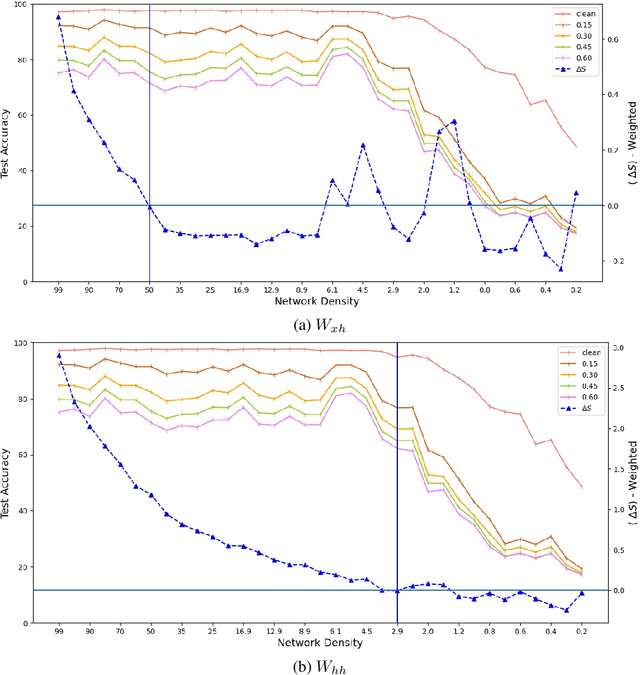

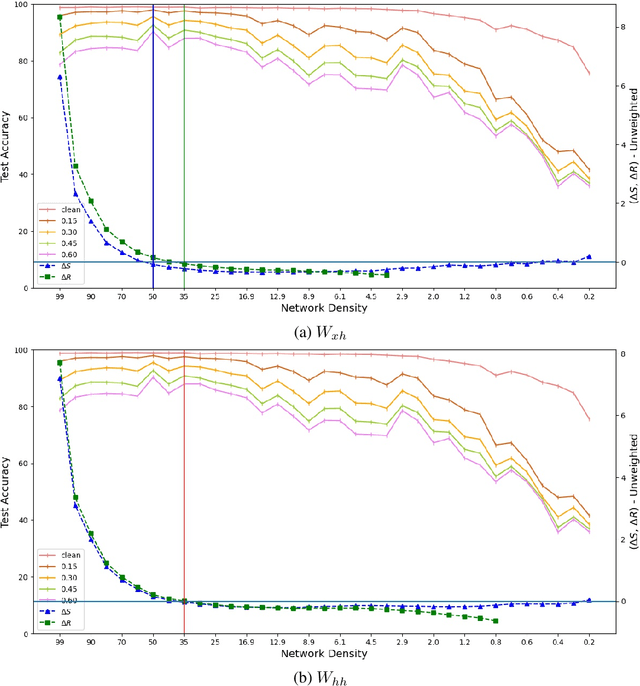

Abstract:Expansion property of a graph refers to its strong connectivity as well as sparseness. It has been reported that deep neural networks can be pruned to a high degree of sparsity while maintaining their performance. Such pruning is essential for performing real time sequence learning tasks using recurrent neural networks in resource constrained platforms. We prune recurrent networks such as RNNs and LSTMs, maintaining a large spectral gap of the underlying graphs and ensuring their layerwise expansion properties. We also study the time unfolded recurrent network graphs in terms of the properties of their bipartite layers. Experimental results for the benchmark sequence MNIST, CIFAR-10, and Google speech command data show that expander graph properties are key to preserving classification accuracy of RNN and LSTM.

A New Bengali Readability Score

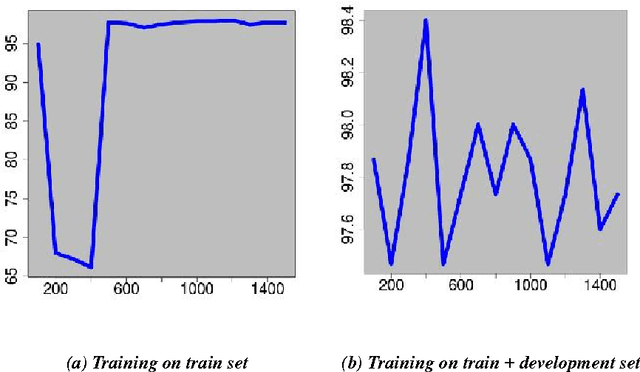

Mar 14, 2017Abstract:In this paper we have proposed methods to analyze the readability of Bengali language texts. We have got some exceptionally good results out of the experiments.

A Supervised Authorship Attribution Framework for Bengali Language

Sep 07, 2016

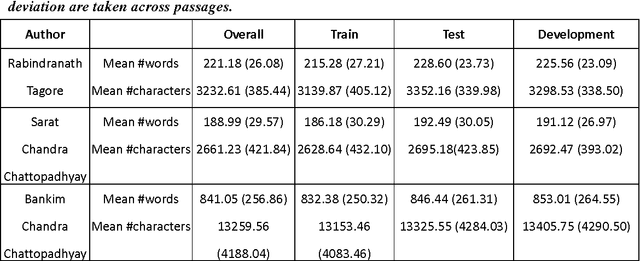

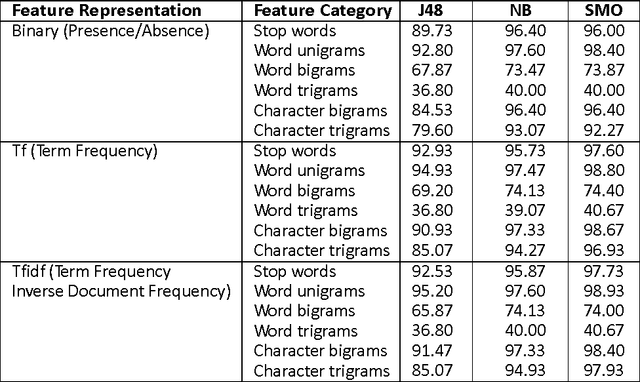

Abstract:Authorship Attribution is a long-standing problem in Natural Language Processing. Several statistical and computational methods have been used to find a solution to this problem. In this paper, we have proposed methods to deal with the authorship attribution problem in Bengali.

Inter-Rater Agreement Study on Readability Assessment in Bengali

Jul 08, 2014

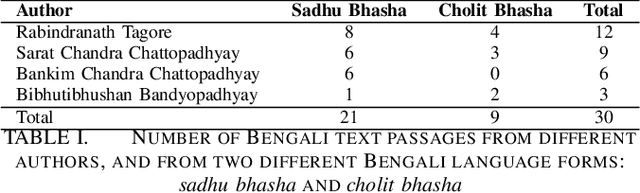

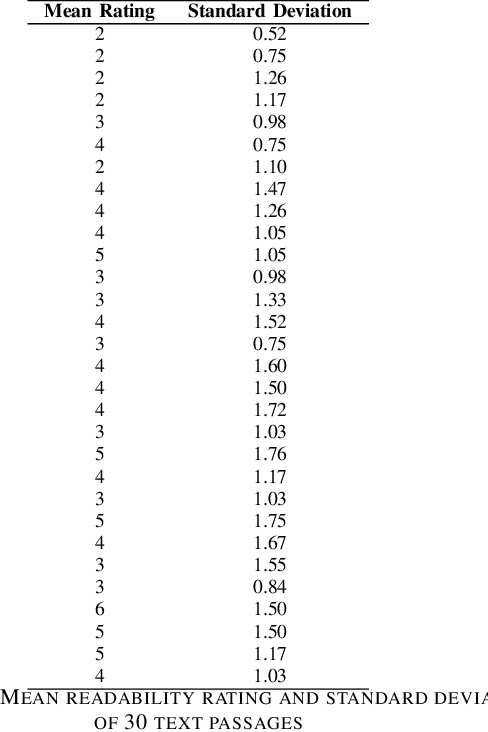

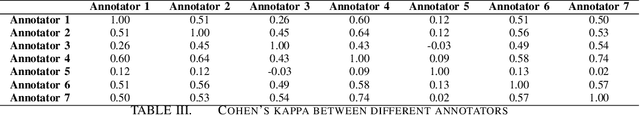

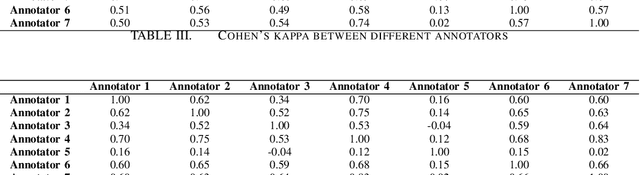

Abstract:An inter-rater agreement study is performed for readability assessment in Bengali. A 1-7 rating scale was used to indicate different levels of readability. We obtained moderate to fair agreement among seven independent annotators on 30 text passages written by four eminent Bengali authors. As a by product of our study, we obtained a readability-annotated ground truth dataset in Bengali. .

* 6 pages, 4 tables, Accepted in ICCONAC, 2014

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge