Areej Eweida

Learning Position From Vehicle Vibration Using an Inertial Measurement Unit

Mar 06, 2023

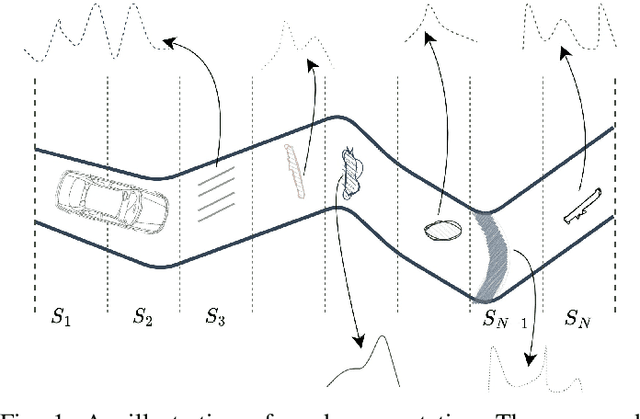

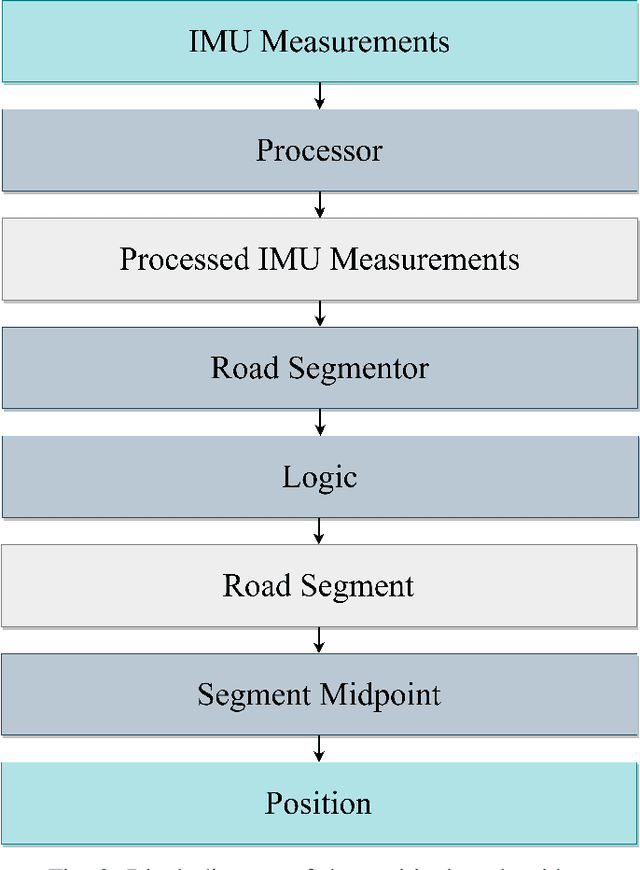

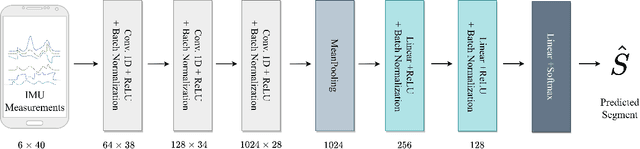

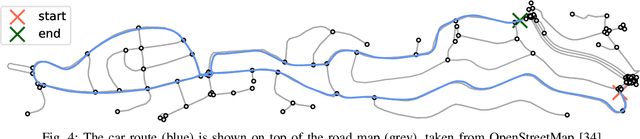

Abstract:This paper presents a novel approach to vehicle positioning that operates without reliance on the global navigation satellite system (GNSS). Traditional GNSS approaches are vulnerable to interference in certain environments, rendering them unreliable in situations such as urban canyons, under flyovers, or in low reception areas. This study proposes a vehicle positioning method based on learning the road signature from accelerometer and gyroscope measurements obtained by an inertial measurement unit (IMU) sensor. In our approach, the route is divided into segments, each with a distinct signature that the IMU can detect through the vibrations of a vehicle in response to subtle changes in the road surface. The study presents two different data-driven methods for learning the road segment from IMU measurements. One method is based on convolutional neural networks and the other on ensemble random forest applied to handcrafted features. Additionally, the authors present an algorithm to deduce the position of a vehicle in real-time using the learned road segment. The approach was applied in two positioning tasks: (i) a car along a 6[km] route in a dense urban area; (ii) an e-scooter on a 1[km] route that combined road and pavement surfaces. The mean error between the proposed method's position and the ground truth was approximately 50[m] for the car and 30[m] for the e-scooter. Compared to a solution based on time integration of the IMU measurements, the proposed approach has a mean error of more than 5 times better for e-scooters and 20 times better for cars.

MountNet: Learning an Inertial Sensor Mounting Angle with Deep Neural Networks

Dec 10, 2022

Abstract:Finding the mounting angle of a smartphone inside a car is crucial for navigation, motion detection, activity recognition, and other applications. It is a challenging task in several aspects: (i) the mounting angle at the drive start is unknown and may differ significantly between users; (ii) the user, or bad fixture, may change the mounting angle while driving; (iii) a rapid and computationally efficient real-time solution is required for most applications. To tackle these problems, a data-driven approach using deep neural networks (DNNs) is presented to learn the yaw mounting angle of a smartphone equipped with an inertial measurement unit (IMU) and strapped to a car. The proposed model, MountNet, uses only IMU readings as input and, in contrast to existing solutions, does not require inputs from global navigation satellite systems (GNSS). IMU data is collected for training and validation with the sensor mounted at a known yaw mounting angle and a range of ground truth labels is generated by applying a prescribed rotation to the measurements. Although the training data did not include recordings with real sensor rotations, tests on data with real and synthetic rotations show similar results. An algorithm is formulated for real-time deployment to detect and smooth transitions in device mounting angle estimated by MountNet. MountNet is shown to find the mounting angle rapidly which is critical in real-time applications. Our method converges in less than 30 seconds of driving to a mean error of 4 degrees allowing a fast calibration phase for other algorithms and applications. When the device is rotated in the middle of a drive, large changes converge in 5 seconds and small changes converge in less than 30 seconds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge