Ardavan Bidgoli

Artistic Style in Robotic Painting; a Machine Learning Approach to Learning Brushstroke from Human Artists

Jul 07, 2020

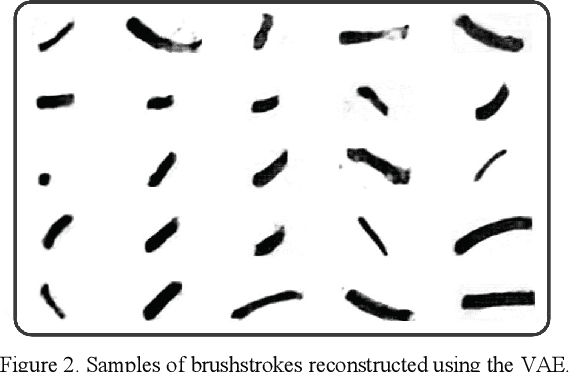

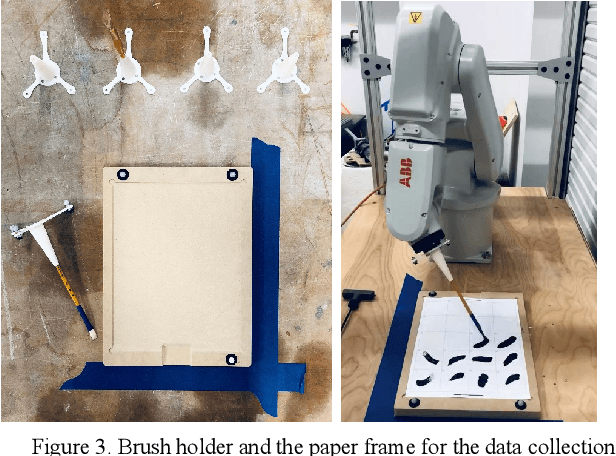

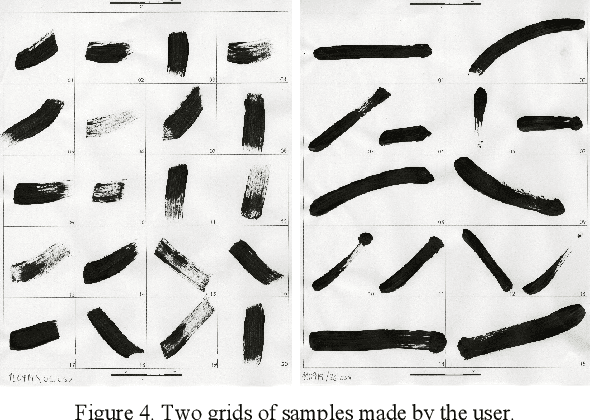

Abstract:Robotic painting has been a subject of interest among both artists and roboticists since the 1970s. Researchers and interdisciplinary artists have employed various painting techniques and human-robot collaboration models to create visual mediums on canvas. One of the challenges of robotic painting is to apply a desired artistic style to the painting. Style transfer techniques with machine learning models have helped us address this challenge with the visual style of a specific painting. However, other manual elements of style, i.e., painting techniques and brushstrokes of an artist have not been fully addressed. We propose a method to integrate an artistic style to the brushstrokes and the painting process through collaboration with a human artist. In this paper, we describe our approach to 1) collect brushstrokes and hand-brush motion samples from an artist, and 2) train a generative model to generate brushstrokes that pertains to the artist's style, and 3) integrate the learned model on a robot arm to paint on a canvas. In a preliminary study, 71% of human evaluators find our robot's paintings pertaining to the characteristics of the artist's style.

Machinic Surrogates: Human-Machine Relationships in Computational Creativity

Aug 03, 2019

Abstract:Recent advancements in artificial intelligence (AI) and its sub-branch machine learning (ML) promise machines that go beyond the boundaries of automation and behave autonomously. Applications of these machines in creative practices such as art and design entail relationships between users and machines that have been described as a form of collaboration or co-creation between computational and human agents. This paper uses examples from art and design to argue that this frame is incomplete as it fails to acknowledge the socio-technical nature of AI systems, and the different human agencies involved in their design, implementation, and operation. Situating applications of AI-enabled tools in creative practices in a spectrum between automation and autonomy, this paper distinguishes different kinds of human engagement elicited by systems deemed automated or autonomous. Reviewing models of artistic collaboration during the late 20th century, it suggests that collaboration is at the core of these artistic practices. We build upon the growing literature of machine learning and art to look for the human agencies inscribed in works of computational creativity, and expand the co-creation frame to incorporate emerging forms of human-human collaboration mediated through technical artifacts such as algorithms and data.

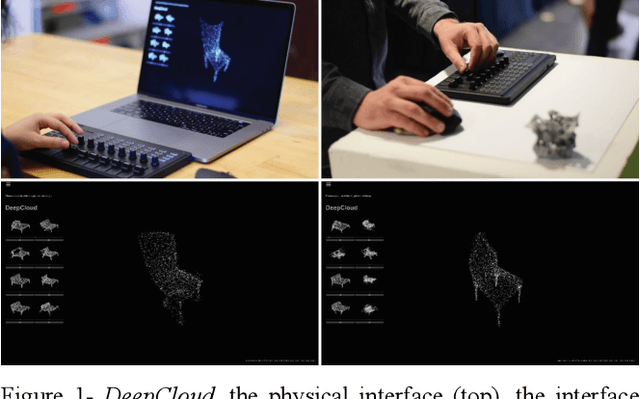

DeepCloud. The Application of a Data-driven, Generative Model in Design

Apr 01, 2019Abstract:Generative systems have a significant potential to synthesize innovative design alternatives. Still, most of the common systems that have been adopted in design require the designer to explicitly define the specifications of the procedures and in some cases the design space. In contrast, a generative system could potentially learn both aspects through processing a database of existing solutions without the supervision of the designer. To explore this possibility, we review recent advancements of generative models in machine learning and current applications of learning techniques in design. Then, we describe the development of a data-driven generative system titled DeepCloud. It combines an autoencoder architecture for point clouds with a web-based interface and analog input devices to provide an intuitive experience for data-driven generation of design alternatives. We delineate the implementation of two prototypes of DeepCloud, their contributions, and potentials for generative design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge