Archisman Ghosh

AI-driven Reverse Engineering of QML Models

Aug 29, 2024Abstract:Quantum machine learning (QML) is a rapidly emerging area of research, driven by the capabilities of Noisy Intermediate-Scale Quantum (NISQ) devices. With the progress in the research of QML models, there is a rise in third-party quantum cloud services to cater to the increasing demand for resources. New security concerns surface, specifically regarding the protection of intellectual property (IP) from untrustworthy service providers. One of the most pressing risks is the potential for reverse engineering (RE) by malicious actors who may steal proprietary quantum IPs such as trained parameters and QML architecture, modify them to remove additional watermarks or signatures and re-transpile them for other quantum hardware. Prior work presents a brute force approach to RE the QML parameters which takes exponential time overhead. In this paper, we introduce an autoencoder-based approach to extract the parameters from transpiled QML models deployed on untrusted third-party vendors. We experiment on multi-qubit classifiers and note that they can be reverse-engineered under restricted conditions with a mean error of order 10^-1. The amount of time taken to prepare the dataset and train the model to reverse engineer the QML circuit being of the order 10^3 seconds (which is 10^2x better than the previously reported value for 4-layered 4-qubit classifiers) makes the threat of RE highly potent, underscoring the need for continued development of effective defenses.

R-STELLAR: A Resilient Synthesizable Signature Attenuation SCA Protection on AES-256 with built-in Attack-on-Countermeasure Detection

Aug 21, 2024Abstract:Side channel attacks (SCAs) remain a significant threat to the security of cryptographic systems in modern embedded devices. Even mathematically secure cryptographic algorithms, when implemented in hardware, inadvertently leak information through physical side channel signatures such as power consumption, electromagnetic (EM) radiation, light emissions, and acoustic emanations. Exploiting these side channels significantly reduces the search space of the attacker. In recent years, physical countermeasures have significantly increased the minimum traces to disclosure (MTD) to 1 billion. Among them, signature attenuation is the first method to achieve this mark. Signature attenuation often relies on analog techniques, and digital signature attenuation reduces MTD to 20 million, requiring additional methods for high resilience. We focus on improving the digital signature attenuation by an order of magnitude (MTD 200M). Additionally, we explore possible attacks against signature attenuation countermeasure. We introduce a Voltage drop Linear region Biasing (VLB) attack technique that reduces the MTD to over 2000 times less than the previous threshold. This is the first known attack against a physical side-channel attack (SCA) countermeasure. We have implemented an attack detector with a response time of 0.8 milliseconds to detect such attacks, limiting SCA leakage window to sub-ms, which is insufficient for a successful attack.

The Quantum Imitation Game: Reverse Engineering of Quantum Machine Learning Models

Jul 09, 2024

Abstract:Quantum Machine Learning (QML) amalgamates quantum computing paradigms with machine learning models, providing significant prospects for solving complex problems. However, with the expansion of numerous third-party vendors in the Noisy Intermediate-Scale Quantum (NISQ) era of quantum computing, the security of QML models is of prime importance, particularly against reverse engineering, which could expose trained parameters and algorithms of the models. We assume the untrusted quantum cloud provider is an adversary having white-box access to the transpiled user-designed trained QML model during inference. Reverse engineering (RE) to extract the pre-transpiled QML circuit will enable re-transpilation and usage of the model for various hardware with completely different native gate sets and even different qubit technology. Such flexibility may not be obtained from the transpiled circuit which is tied to a particular hardware and qubit technology. The information about the number of parameters, and optimized values can allow further training of the QML model to alter the QML model, tamper with the watermark, and/or embed their own watermark or refine the model for other purposes. In this first effort to investigate the RE of QML circuits, we perform RE and compare the training accuracy of original and reverse-engineered Quantum Neural Networks (QNNs) of various sizes. We note that multi-qubit classifiers can be reverse-engineered under specific conditions with a mean error of order 1e-2 in a reasonable time. We also propose adding dummy fixed parametric gates in the QML models to increase the RE overhead for defense. For instance, adding 2 dummy qubits and 2 layers increases the overhead by ~1.76 times for a classifier with 2 qubits and 3 layers with a performance overhead of less than 9%. We note that RE is a very powerful attack model which warrants further efforts on defenses.

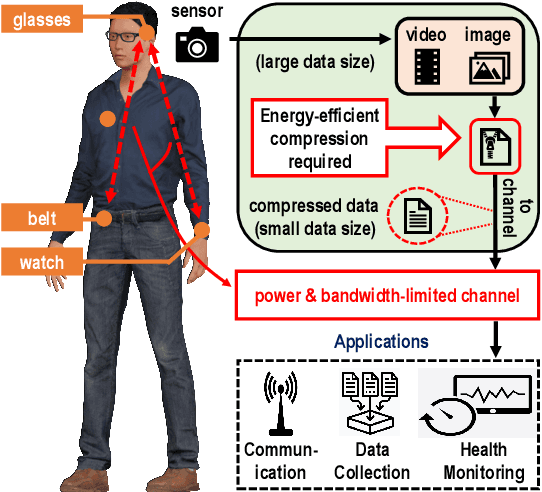

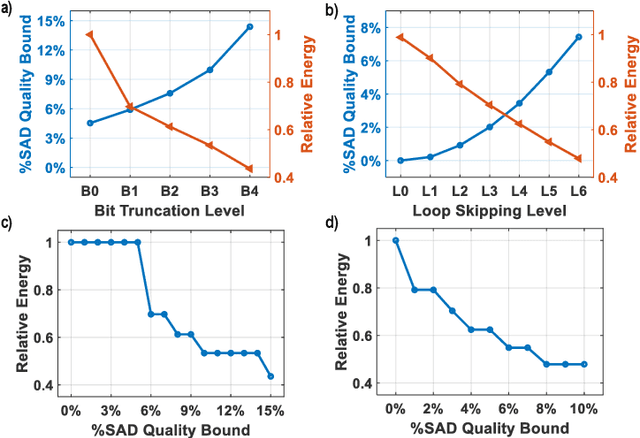

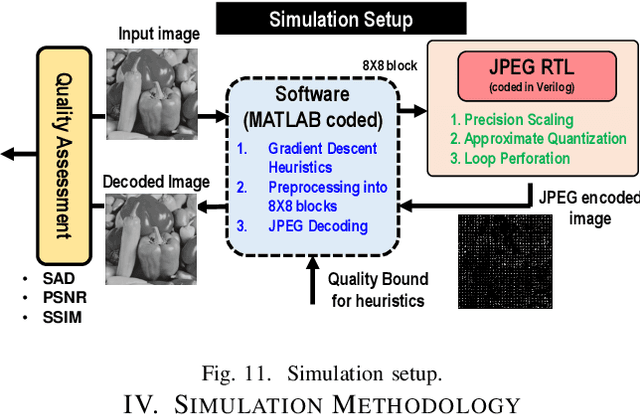

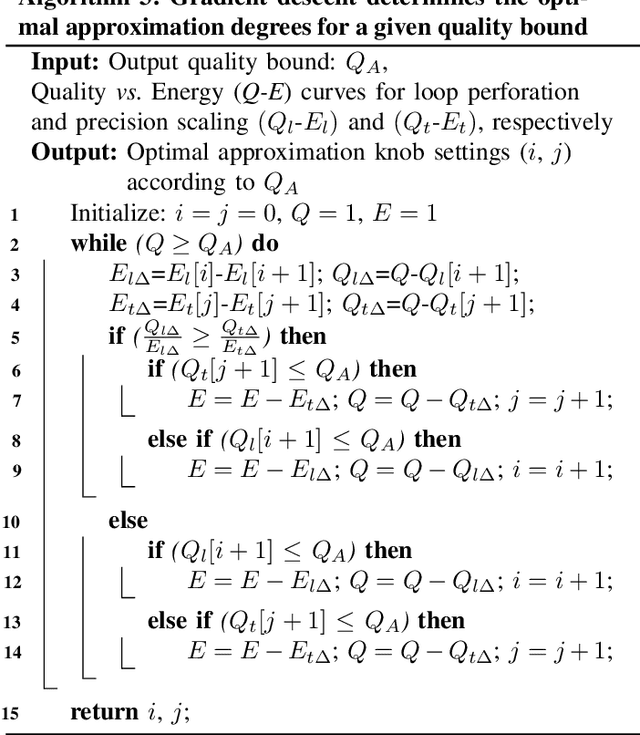

Approximate DCT and Quantization Techniques for Energy-Constrained Image Sensors

Jun 24, 2024

Abstract:Recent expansions in multimedia devices gather enormous amounts of real-time images for processing and inference. The images are first compressed using compression schemes, like JPEG, to reduce storage costs and power for transmitting the captured data. Due to inherent error resilience and imperceptibility in images, JPEG can be approximated to reduce the required computation power and area. This work demonstrates the first end-to-end approximation computing-based optimization of JPEG hardware using i) an approximate division realized using bit-shift operators to reduce the complexity of the quantization block, ii) loop perforation, and iii) precision scaling on top of a multiplier-less fast DCT architecture to achieve an extremely energy-efficient JPEG compression unit which will be a perfect fit for power/bandwidth-limited scenario. Furthermore, a gradient descent-based heuristic composed of two conventional approximation strategies, i.e., Precision Scaling and Loop Perforation, is implemented for tuning the degree of approximation to trade off energy consumption with the quality degradation of the decoded image. The entire RTL design is coded in Verilog HDL, synthesized, mapped to TSMC 65nm CMOS technology, and simulated using Cadence Spectre Simulator under 25$^{\circ}$\textbf{C}, TT corner. The approximate division approach achieved around $\textbf{28\%}$ reduction in the active design area. The heuristic-based approximation technique combined with accelerator optimization achieves a significant energy reduction of $\textbf{36\%}$ for a minimal image quality degradation of $\textbf{2\%}$ SAD. Simulation results also show that the proposed architecture consumes 15uW at the DCT and quantization stages to compress a colored 480p image at 6fps.

Guardians of the Quantum GAN

Apr 26, 2024

Abstract:Quantum Generative Adversarial Networks (qGANs) are at the forefront of image-generating quantum machine learning models. To accommodate the growing demand for Noisy Intermediate-Scale Quantum (NISQ) devices to train and infer quantum machine learning models, the number of third-party vendors offering quantum hardware as a service is expected to rise. This expansion introduces the risk of untrusted vendors potentially stealing proprietary information from the quantum machine learning models. To address this concern we propose a novel watermarking technique that exploits the noise signature embedded during the training phase of qGANs as a non-invasive watermark. The watermark is identifiable in the images generated by the qGAN allowing us to trace the specific quantum hardware used during training hence providing strong proof of ownership. To further enhance the security robustness, we propose the training of qGANs on a sequence of multiple quantum hardware, embedding a complex watermark comprising the noise signatures of all the training hardware that is difficult for adversaries to replicate. We also develop a machine learning classifier to extract this watermark robustly, thereby identifying the training hardware (or the suite of hardware) from the images generated by the qGAN validating the authenticity of the model. We note that the watermark signature is robust against inferencing on hardware different than the hardware that was used for training. We obtain watermark extraction accuracy of 100% and ~90% for training the qGAN on individual and multiple quantum hardware setups (and inferencing on different hardware), respectively. Since parameter evolution during training is strongly modulated by quantum noise, the proposed watermark can be extended to other quantum machine learning models as well.

Application of Quantum Tensor Networks for Protein Classification

Mar 11, 2024Abstract:We show that protein sequences can be thought of as sentences in natural language processing and can be parsed using the existing Quantum Natural Language framework into parameterized quantum circuits of reasonable qubits, which can be trained to solve various protein-related machine-learning problems. We classify proteins based on their subcellular locations, a pivotal task in bioinformatics that is key to understanding biological processes and disease mechanisms. Leveraging the quantum-enhanced processing capabilities, we demonstrate that Quantum Tensor Networks (QTN) can effectively handle the complexity and diversity of protein sequences. We present a detailed methodology that adapts QTN architectures to the nuanced requirements of protein data, supported by comprehensive experimental results. We demonstrate two distinct QTNs, inspired by classical recurrent neural networks (RNN) and convolutional neural networks (CNN), to solve the binary classification task mentioned above. Our top-performing quantum model has achieved a 94% accuracy rate, which is comparable to the performance of a classical model that uses the ESM2 protein language model embeddings. It's noteworthy that the ESM2 model is extremely large, containing 8 million parameters in its smallest configuration, whereas our best quantum model requires only around 800 parameters. We demonstrate that these hybrid models exhibit promising performance, showcasing their potential to compete with classical models of similar complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge