Araz Taeihagh

The Risks of Machine Learning Systems

Apr 21, 2022

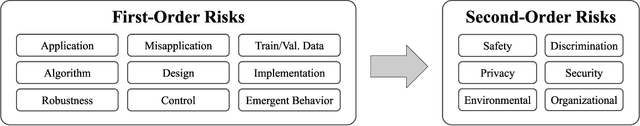

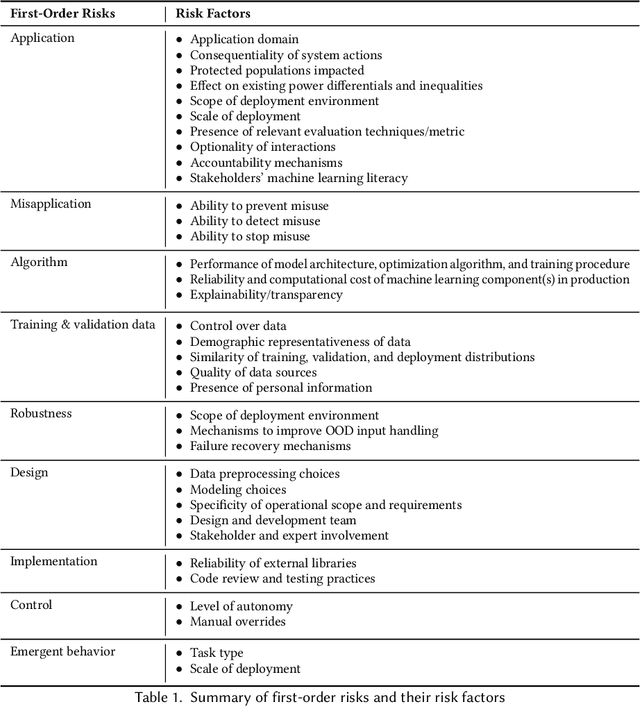

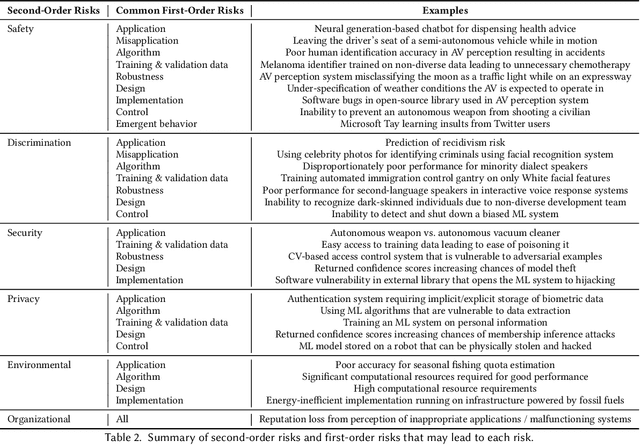

Abstract:The speed and scale at which machine learning (ML) systems are deployed are accelerating even as an increasing number of studies highlight their potential for negative impact. There is a clear need for companies and regulators to manage the risk from proposed ML systems before they harm people. To achieve this, private and public sector actors first need to identify the risks posed by a proposed ML system. A system's overall risk is influenced by its direct and indirect effects. However, existing frameworks for ML risk/impact assessment often address an abstract notion of risk or do not concretize this dependence. We propose to address this gap with a context-sensitive framework for identifying ML system risks comprising two components: a taxonomy of the first- and second-order risks posed by ML systems, and their contributing factors. First-order risks stem from aspects of the ML system, while second-order risks stem from the consequences of first-order risks. These consequences are system failures that result from design and development choices. We explore how different risks may manifest in various types of ML systems, the factors that affect each risk, and how first-order risks may lead to second-order effects when the system interacts with the real world. Throughout the paper, we show how real events and prior research fit into our Machine Learning System Risk framework (MLSR). MLSR operates on ML systems rather than technologies or domains, recognizing that a system's design, implementation, and use case all contribute to its risk. In doing so, it unifies the risks that are commonly discussed in the ethical AI community (e.g., ethical/human rights risks) with system-level risks (e.g., application, design, control risks), paving the way for holistic risk assessments of ML systems.

Reliability Testing for Natural Language Processing Systems

Jun 01, 2021

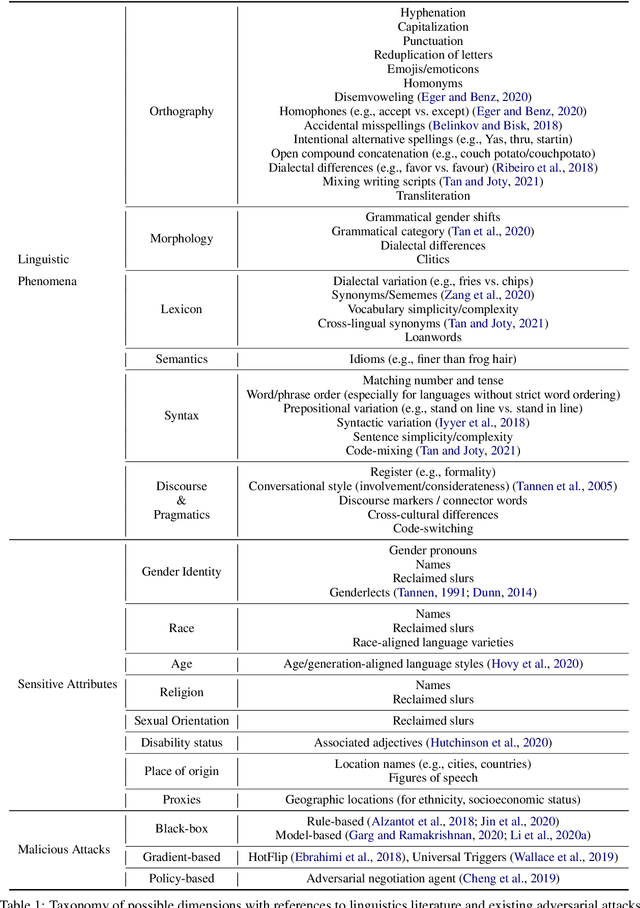

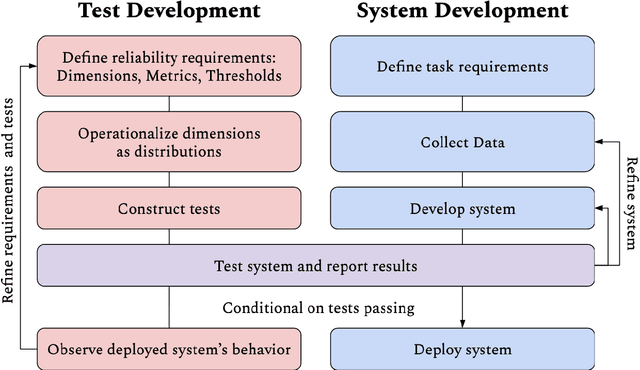

Abstract:Questions of fairness, robustness, and transparency are paramount to address before deploying NLP systems. Central to these concerns is the question of reliability: Can NLP systems reliably treat different demographics fairly and function correctly in diverse and noisy environments? To address this, we argue for the need for reliability testing and contextualize it among existing work on improving accountability. We show how adversarial attacks can be reframed for this goal, via a framework for developing reliability tests. We argue that reliability testing -- with an emphasis on interdisciplinary collaboration -- will enable rigorous and targeted testing, and aid in the enactment and enforcement of industry standards.

Regulating human control over autonomous systems

Jul 22, 2020

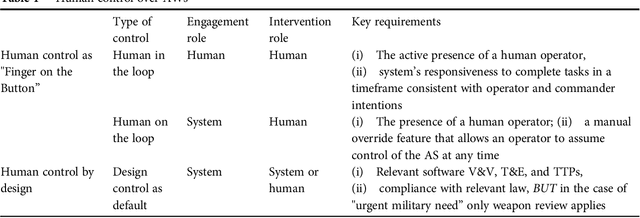

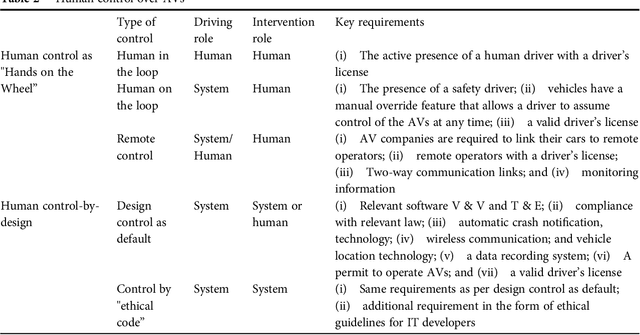

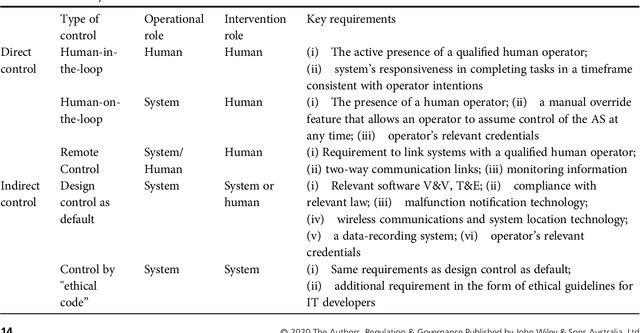

Abstract:In recent years, many sectors have experienced significant progress in automation, associated with the growing advances in artificial intelligence and machine learning. There are already automated robotic weapons, which are able to evaluate and engage with targets on their own, and there are already autonomous vehicles that do not need a human driver. It is argued that the use of increasingly autonomous systems (AS) should be guided by the policy of human control, according to which humans should execute a certain significant level of judgment over AS. While in the military sector there is a fear that AS could mean that humans lose control over life and death decisions, in the transportation domain, on the contrary, there is a strongly held view that autonomy could bring significant operational benefits by removing the need for a human driver. This article explores the notion of human control in the United States in the two domains of defense and transportation. The operationalization of emerging policies of human control results in the typology of direct and indirect human controls exercised over the use of AS. The typology helps to steer the debate away from the linguistic complexities of the term autonomy. It identifies instead where human factors are undergoing important changes and ultimately informs about more detailed rules and standards formulation, which differ across domains, applications, and sectors.

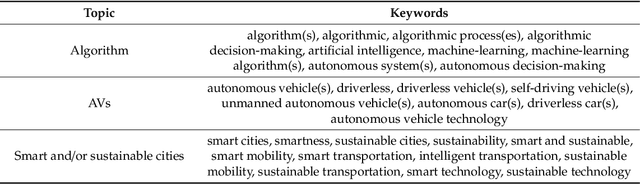

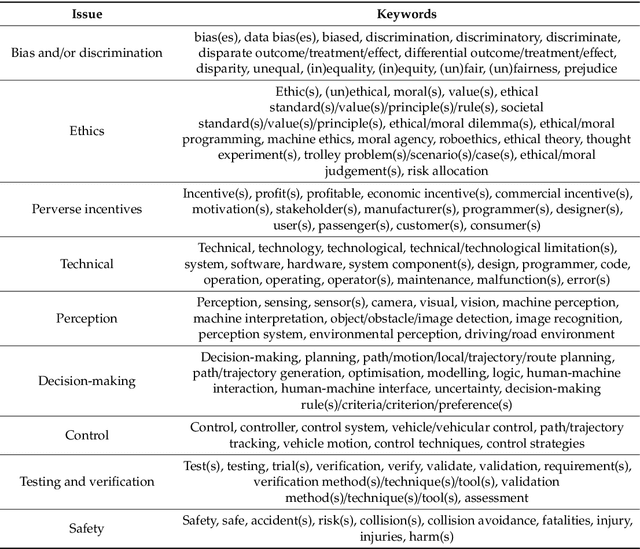

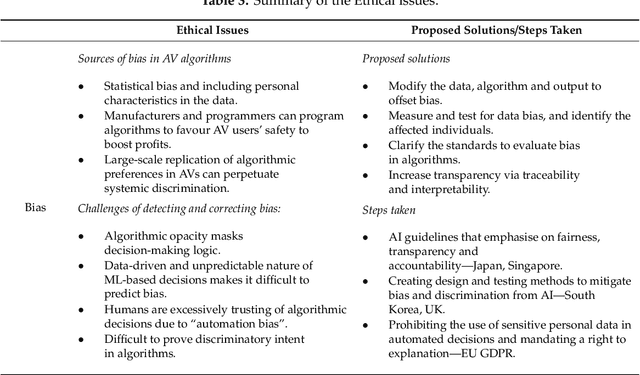

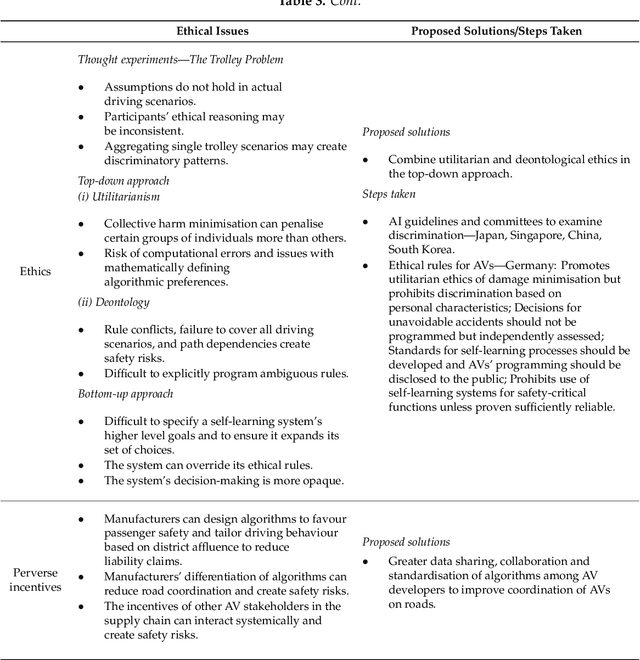

Algorithmic decision-making in AVs: Understanding ethical and technical concerns for smart cities

Oct 29, 2019

Abstract:Autonomous Vehicles (AVs) are increasingly embraced around the world to advance smart mobility and more broadly, smart, and sustainable cities. Algorithms form the basis of decision-making in AVs, allowing them to perform driving tasks autonomously, efficiently, and more safely than human drivers and offering various economic, social, and environmental benefits. However, algorithmic decision-making in AVs can also introduce new issues that create new safety risks and perpetuate discrimination. We identify bias, ethics, and perverse incentives as key ethical issues in the AV algorithms' decision-making that can create new safety risks and discriminatory outcomes. Technical issues in the AVs' perception, decision-making and control algorithms, limitations of existing AV testing and verification methods, and cybersecurity vulnerabilities can also undermine the performance of the AV system. This article investigates the ethical and technical concerns surrounding algorithmic decision-making in AVs by exploring how driving decisions can perpetuate discrimination and create new safety risks for the public. We discuss steps taken to address these issues, highlight the existing research gaps and the need to mitigate these issues through the design of AV's algorithms and of policies and regulations to fully realise AVs' benefits for smart and sustainable cities.

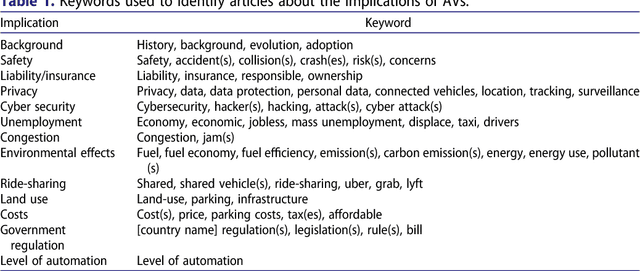

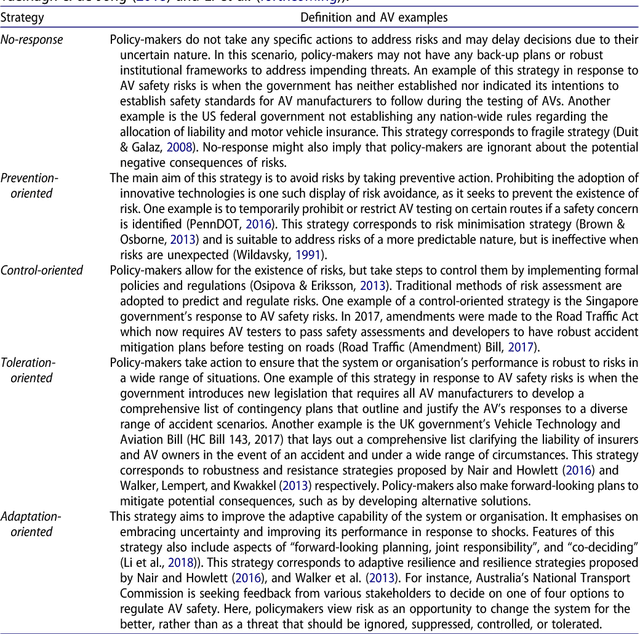

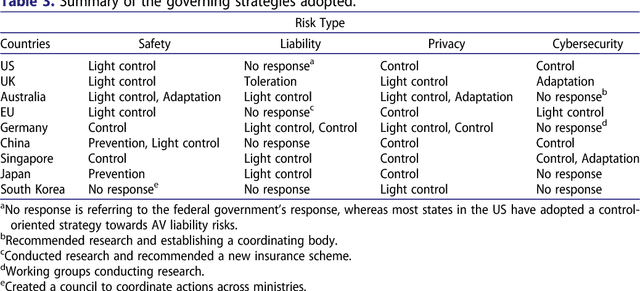

Governing autonomous vehicles: emerging responses for safety, liability, privacy, cybersecurity, and industry risks

Jul 16, 2018

Abstract:The benefits of autonomous vehicles (AVs) are widely acknowledged, but there are concerns about the extent of these benefits and AV risks and unintended consequences. In this article, we first examine AVs and different categories of the technological risks associated with them. We then explore strategies that can be adopted to address these risks, and explore emerging responses by governments for addressing AV risks. Our analyses reveal that, thus far, governments have in most instances avoided stringent measures in order to promote AV developments and the majority of responses are non-binding and focus on creating councils or working groups to better explore AV implications. The US has been active in introducing legislations to address issues related to privacy and cybersecurity. The UK and Germany, in particular, have enacted laws to address liability issues, other countries mostly acknowledge these issues, but have yet to implement specific strategies. To address privacy and cybersecurity risks strategies ranging from introduction or amendment of non-AV specific legislation to creating working groups have been adopted. Much less attention has been paid to issues such as environmental and employment risks, although a few governments have begun programmes to retrain workers who might be negatively affected.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge