Anurag Sanyal

Automatic Rendering of Building Floor Plan Images from Textual Descriptions in English

Nov 29, 2018

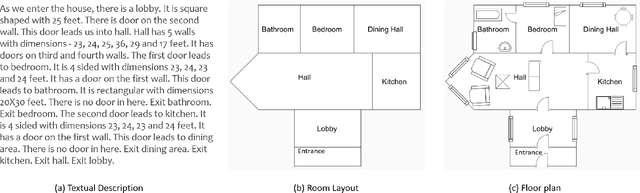

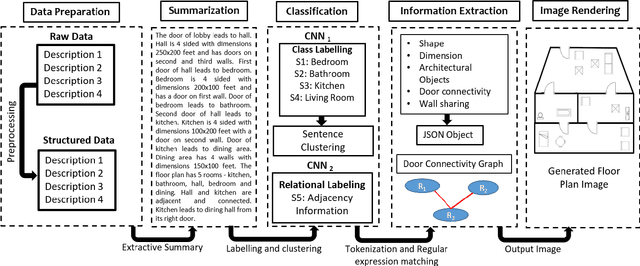

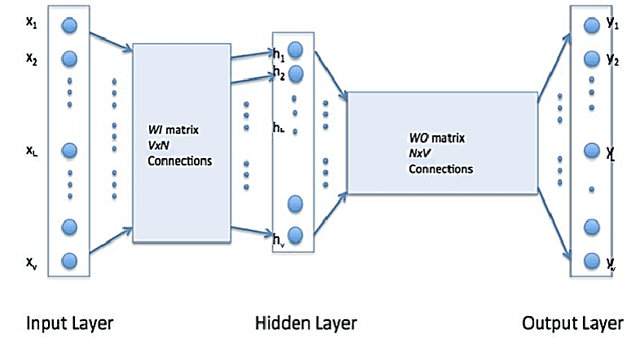

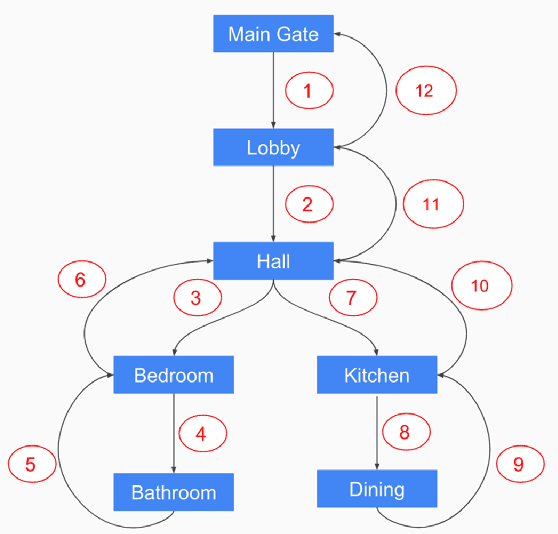

Abstract:Human beings understand natural language description and could able to imagine a corresponding visual for the same. For example, given a description of the interior of a house, we could imagine its structure and arrangements of furniture. Automatic synthesis of real-world images from text descriptions has been explored in the computer vision community. However, there is no such attempt in the area of document images, like floor plans. Floor plan synthesis from sketches, as well as data-driven models, were proposed earlier. Ours is the first attempt to render building floor plan images from textual description automatically. Here, the input is a natural language description of the internal structure and furniture arrangements within a house, and the output is the 2D floor plan image of the same. We have experimented on publicly available benchmark floor plan datasets. We were able to render realistic synthesized floor plan images from the description written in English.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge