Anthony Vento

A Penalty Approach for Normalizing Feature Distributions to Build Confounder-Free Models

Jul 11, 2022

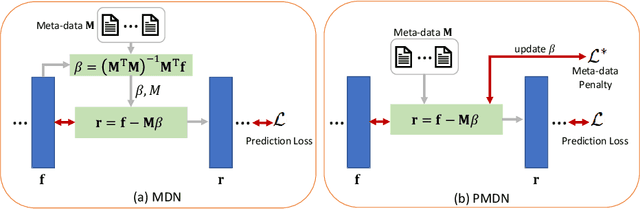

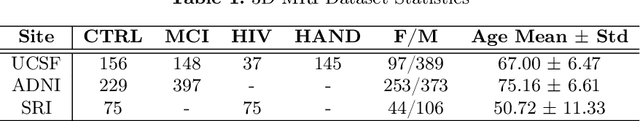

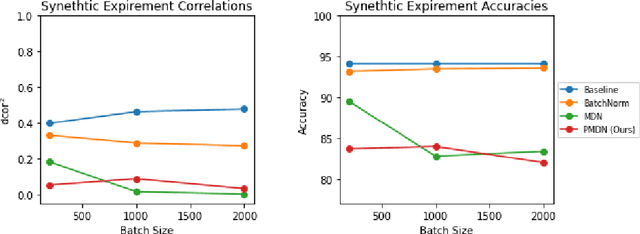

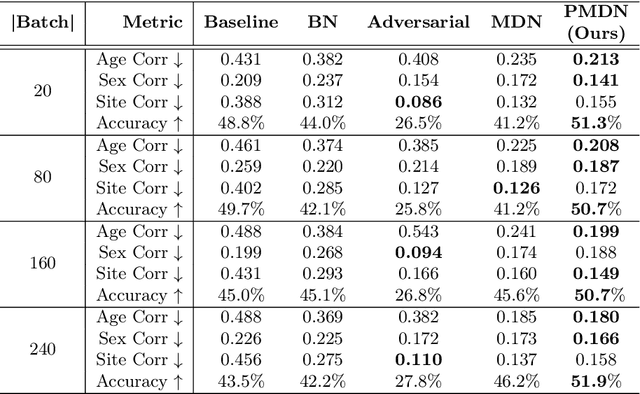

Abstract:Translating machine learning algorithms into clinical applications requires addressing challenges related to interpretability, such as accounting for the effect of confounding variables (or metadata). Confounding variables affect the relationship between input training data and target outputs. When we train a model on such data, confounding variables will bias the distribution of the learned features. A recent promising solution, MetaData Normalization (MDN), estimates the linear relationship between the metadata and each feature based on a non-trainable closed-form solution. However, this estimation is confined by the sample size of a mini-batch and thereby may cause the approach to be unstable during training. In this paper, we extend the MDN method by applying a Penalty approach (referred to as PDMN). We cast the problem into a bi-level nested optimization problem. We then approximate this optimization problem using a penalty method so that the linear parameters within the MDN layer are trainable and learned on all samples. This enables PMDN to be plugged into any architectures, even those unfit to run batch-level operations, such as transformers and recurrent models. We show improvement in model accuracy and greater independence from confounders using PMDN over MDN in a synthetic experiment and a multi-label, multi-site dataset of magnetic resonance images (MRIs).

MetaHDR: Model-Agnostic Meta-Learning for HDR Image Reconstruction

Mar 20, 2021

Abstract:Capturing scenes with a high dynamic range is crucial to reproducing images that appear similar to those seen by the human visual system. Despite progress in developing data-driven deep learning approaches for converting low dynamic range images to high dynamic range images, existing approaches are limited by the assumption that all conversions are governed by the same nonlinear mapping. To address this problem, we propose "Model-Agnostic Meta-Learning for HDR Image Reconstruction" (MetaHDR), which applies meta-learning to the LDR-to-HDR conversion problem using existing HDR datasets. Our key novelty is the reinterpretation of LDR-to-HDR conversion scenes as independently sampled tasks from a common LDR-to-HDR conversion task distribution. Naturally, we use a meta-learning framework that learns a set of meta-parameters which capture the common structure consistent across all LDR-to-HDR conversion tasks. Finally, we perform experimentation with MetaHDR to demonstrate its capacity to tackle challenging LDR-to-HDR image conversions. Code and pretrained models are available at https://github.com/edwin-pan/MetaHDR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge