António Ramires

LoopNet: Musical Loop Synthesis Conditioned On Intuitive Musical Parameters

May 21, 2021

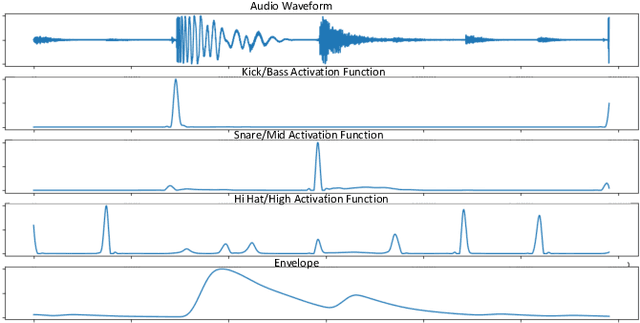

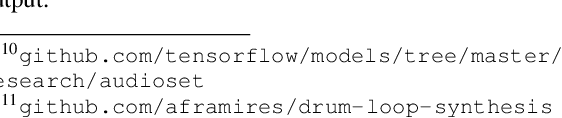

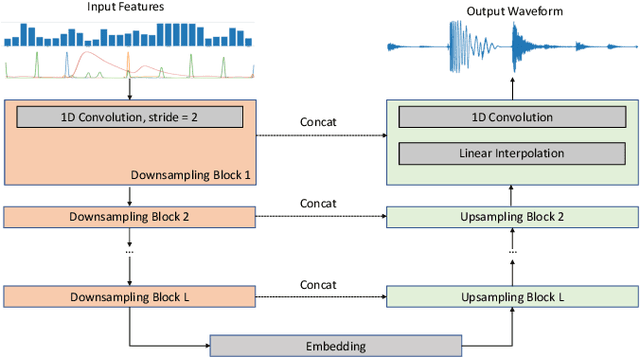

Abstract:Loops, seamlessly repeatable musical segments, are a cornerstone of modern music production. Contemporary artists often mix and match various sampled or pre-recorded loops based on musical criteria such as rhythm, harmony and timbral texture to create compositions. Taking such criteria into account, we present LoopNet, a feed-forward generative model for creating loops conditioned on intuitive parameters. We leverage Music Information Retrieval (MIR) models as well as a large collection of public loop samples in our study and use the Wave-U-Net architecture to map control parameters to audio. We also evaluate the quality of the generated audio and propose intuitive controls for composers to map the ideas in their minds to an audio loop.

Neural Percussive Synthesis Parameterised by High-Level Timbral Features

Nov 25, 2019

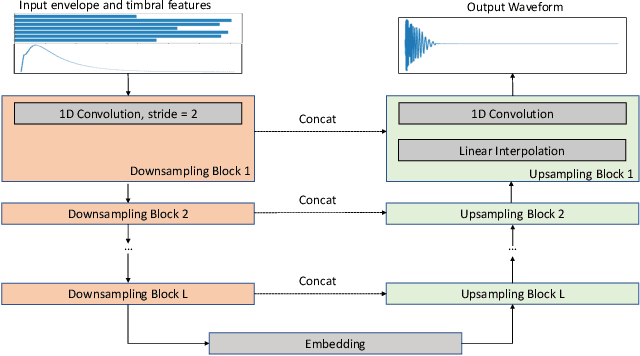

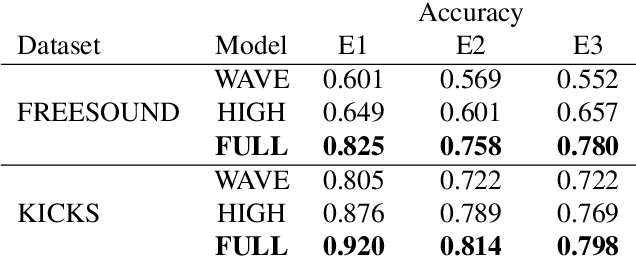

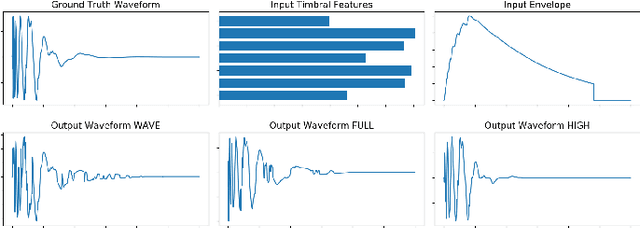

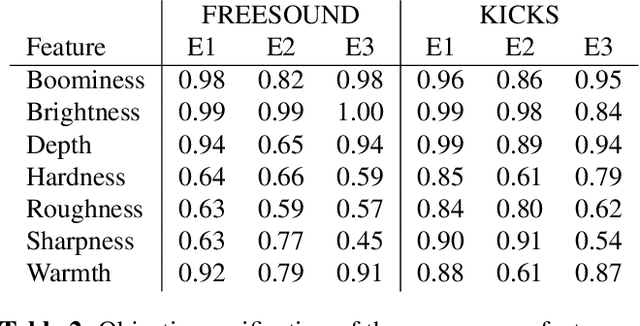

Abstract:We present a deep neural network-based methodology for synthesising percussive sounds with control over high-level timbral characteristics of the sounds. This approach allows for intuitive control of a synthesizer, enabling the user to shape sounds without extensive knowledge of signal processing. We use a feedforward convolutional neural network-based architecture, which is able to map input parameters to the corresponding waveform. We propose two datasets to evaluate our approach on both a restrictive context, and in one covering a broader spectrum of sounds. The timbral features used as parameters are taken from recent literature in signal processing. We also use these features for evaluation and validation of the presented model, to ensure that changing the input parameters produces a congruent waveform with the desired characteristics. Finally, we evaluate the quality of the output sound using a subjective listening test. We provide sound examples and the system's source code for reproducibility.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge