Ankur Mani

Learning Optimal Features via Partial Invariance

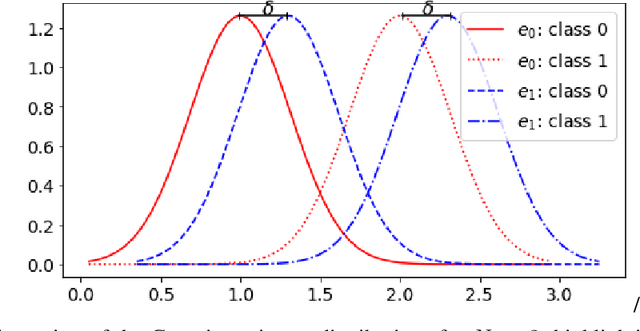

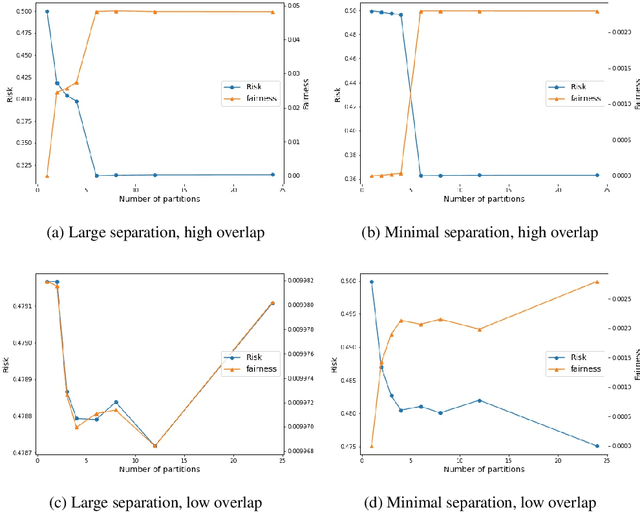

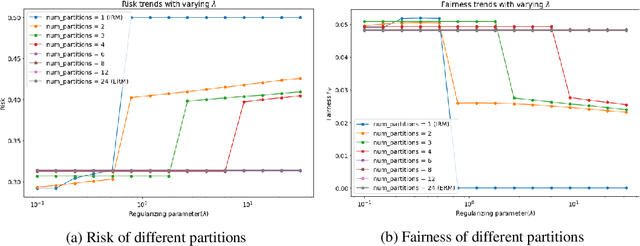

Jan 28, 2023Abstract:Learning models that are robust to test-time distribution shifts is a key concern in domain generalization, and in the wider context of their real-life applicability. Invariant Risk Minimization (IRM) is one particular framework that aims to learn deep invariant features from multiple domains and has subsequently led to further variants. A key assumption for the success of these methods requires that the underlying causal mechanisms/features remain invariant across domains and the true invariant features be sufficient to learn the optimal predictor. In practical problem settings, these assumptions are often not satisfied, which leads to IRM learning a sub-optimal predictor for that task. In this work, we propose the notion of partial invariance as a relaxation of the IRM framework. Under our problem setting, we first highlight the sub-optimality of the IRM solution. We then demonstrate how partitioning the training domains, assuming access to some meta-information about the domains, can help improve the performance of invariant models via partial invariance. Finally, we conduct several experiments, both in linear settings as well as with classification tasks in language and images with deep models, which verify our conclusions.

Balancing Fairness and Robustness via Partial Invariance

Dec 24, 2021

Abstract:The Invariant Risk Minimization (IRM) framework aims to learn invariant features from a set of environments for solving the out-of-distribution (OOD) generalization problem. The underlying assumption is that the causal components of the data generating distributions remain constant across the environments or alternately, the data "overlaps" across environments to find meaningful invariant features. Consequently, when the "overlap" assumption does not hold, the set of truly invariant features may not be sufficient for optimal prediction performance. Such cases arise naturally in networked settings and hierarchical data-generating models, wherein the IRM performance becomes suboptimal. To mitigate this failure case, we argue for a partial invariance framework. The key idea is to introduce flexibility into the IRM framework by partitioning the environments based on hierarchical differences, while enforcing invariance locally within the partitions. We motivate this framework in classification settings with causal distribution shifts across environments. Our results show the capability of the partial invariant risk minimization to alleviate the trade-off between fairness and risk in certain settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge