Ankuj Arora

A Review on Learning Planning Action Models for Socio-Communicative HRI

Oct 22, 2018

Abstract:For social robots to be brought more into widespread use in the fields of companionship, care taking and domestic help, they must be capable of demonstrating social intelligence. In order to be acceptable, they must exhibit socio-communicative skills. Classic approaches to program HRI from observed human-human interactions fails to capture the subtlety of multimodal interactions as well as the key structural differences between robots and humans. The former arises due to a difficulty in quantifying and coding multimodal behaviours, while the latter due to a difference of the degrees of liberty between a robot and a human. However, the notion of reverse engineering from multimodal HRI traces to learn the underlying behavioral blueprint of the robot given multimodal traces seems an option worth exploring. With this spirit, the entire HRI can be seen as a sequence of exchanges of speech acts between the robot and human, each act treated as an action, bearing in mind that the entire sequence is goal-driven. Thus, this entire interaction can be treated as a sequence of actions propelling the interaction from its initial to goal state, also known as a plan in the domain of AI planning. In the same domain, this action sequence that stems from plan execution can be represented as a trace. AI techniques, such as machine learning, can be used to learn behavioral models (also known as symbolic action models in AI), intended to be reusable for AI planning, from the aforementioned multimodal traces. This article reviews recent machine learning techniques for learning planning action models which can be applied to the field of HRI with the intent of rendering robots as socio-communicative.

* Workshop on Affect, Artifcial Compagnon and Interaction, 2016

Action Model Acquisition using LSTM

Oct 03, 2018

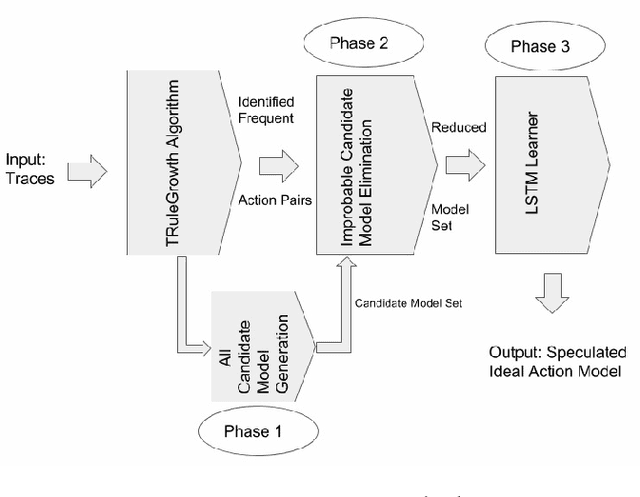

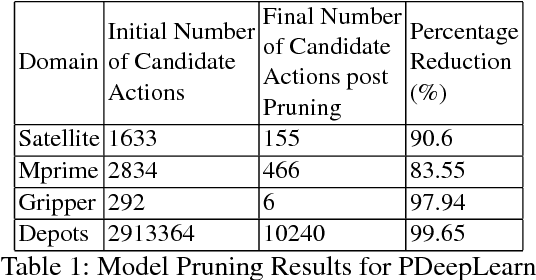

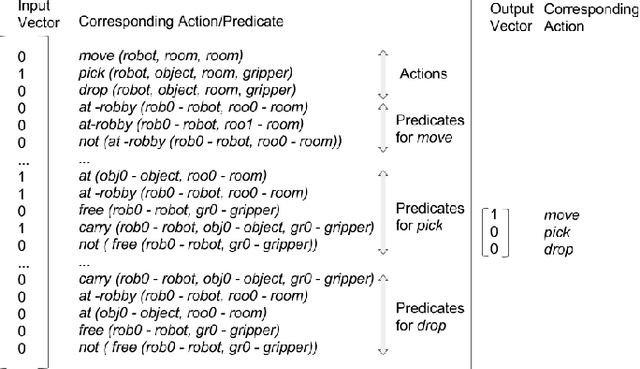

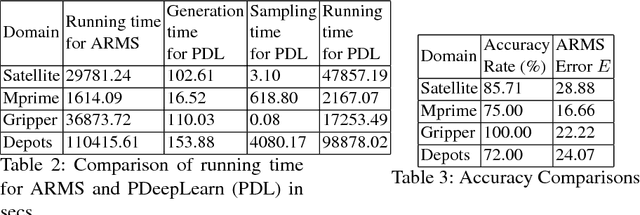

Abstract:In the field of Automated Planning and Scheduling (APS), intelligent agents by virtue require an action model (blueprints of actions whose interleaved executions effectuates transitions of the system state) in order to plan and solve real world problems. It is, however, becoming increasingly cumbersome to codify this model, and is more efficient to learn it from observed plan execution sequences (training data). While the underlying objective is to subsequently plan from this learnt model, most approaches fall short as anything less than a flawless reconstruction of the underlying model renders it unusable in certain domains. This work presents a novel approach using long short-term memory (LSTM) techniques for the acquisition of the underlying action model. We use the sequence labelling capabilities of LSTMs to isolate from an exhaustive model set a model identical to the one responsible for producing the training data. This isolation capability renders our approach as an effective one.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge