Ankita Samaddar

Out-of-Distribution Detection for Neurosymbolic Autonomous Cyber Agents

Dec 03, 2024

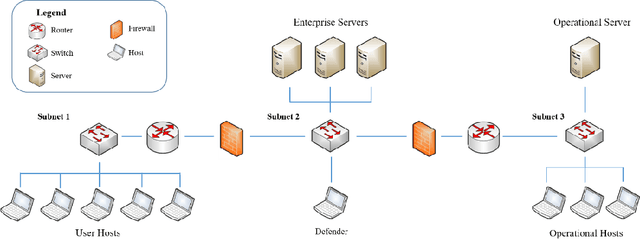

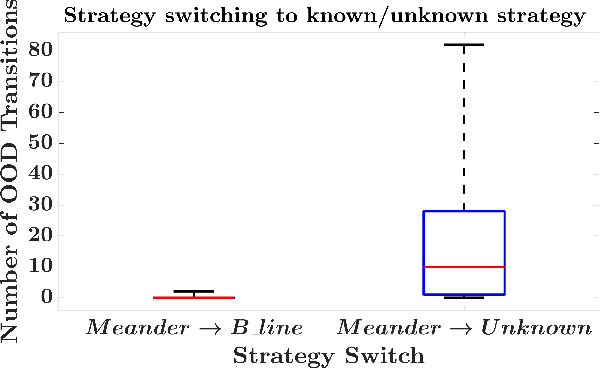

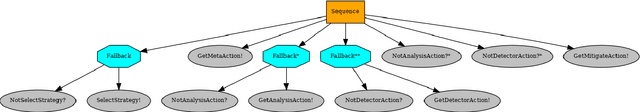

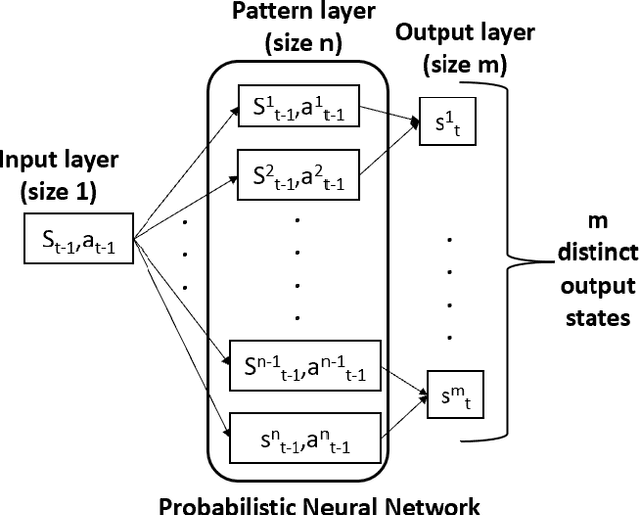

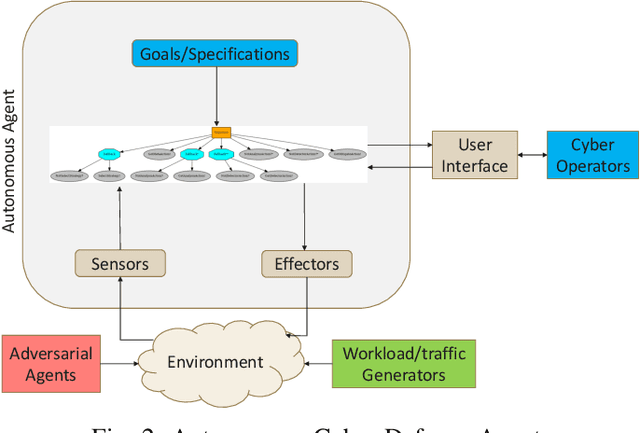

Abstract:Autonomous agents for cyber applications take advantage of modern defense techniques by adopting intelligent agents with conventional and learning-enabled components. These intelligent agents are trained via reinforcement learning (RL) algorithms, and can learn, adapt to, reason about and deploy security rules to defend networked computer systems while maintaining critical operational workflows. However, the knowledge available during training about the state of the operational network and its environment may be limited. The agents should be trustworthy so that they can reliably detect situations they cannot handle, and hand them over to cyber experts. In this work, we develop an out-of-distribution (OOD) Monitoring algorithm that uses a Probabilistic Neural Network (PNN) to detect anomalous or OOD situations of RL-based agents with discrete states and discrete actions. To demonstrate the effectiveness of the proposed approach, we integrate the OOD monitoring algorithm with a neurosymbolic autonomous cyber agent that uses behavior trees with learning-enabled components. We evaluate the proposed approach in a simulated cyber environment under different adversarial strategies. Experimental results over a large number of episodes illustrate the overall efficiency of our proposed approach.

Designing Robust Cyber-Defense Agents with Evolving Behavior Trees

Oct 21, 2024

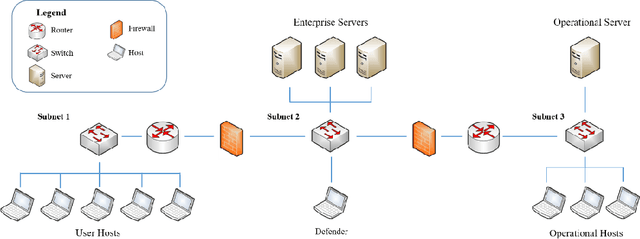

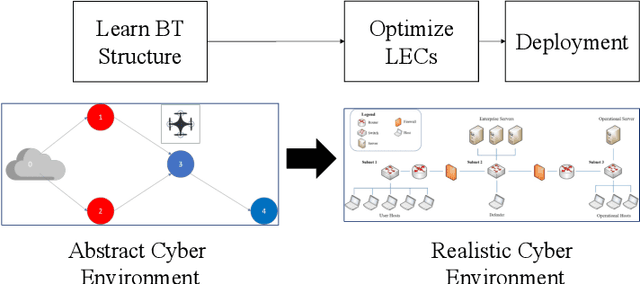

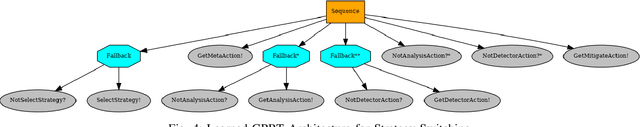

Abstract:Modern network defense can benefit from the use of autonomous systems, offloading tedious and time-consuming work to agents with standard and learning-enabled components. These agents, operating on critical network infrastructure, need to be robust and trustworthy to ensure defense against adaptive cyber-attackers and, simultaneously, provide explanations for their actions and network activity. However, learning-enabled components typically use models, such as deep neural networks, that are not transparent in their high-level decision-making leading to assurance challenges. Additionally, cyber-defense agents must execute complex long-term defense tasks in a reactive manner that involve coordination of multiple interdependent subtasks. Behavior trees are known to be successful in modelling interpretable, reactive, and modular agent policies with learning-enabled components. In this paper, we develop an approach to design autonomous cyber defense agents using behavior trees with learning-enabled components, which we refer to as Evolving Behavior Trees (EBTs). We learn the structure of an EBT with a novel abstract cyber environment and optimize learning-enabled components for deployment. The learning-enabled components are optimized for adapting to various cyber-attacks and deploying security mechanisms. The learned EBT structure is evaluated in a simulated cyber environment, where it effectively mitigates threats and enhances network visibility. For deployment, we develop a software architecture for evaluating EBT-based agents in computer network defense scenarios. Our results demonstrate that the EBT-based agent is robust to adaptive cyber-attacks and provides high-level explanations for interpreting its decisions and actions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge