Anirudh Dash

Exploring the Limitations of Structured Orthogonal Dictionary Learning

Jan 25, 2025

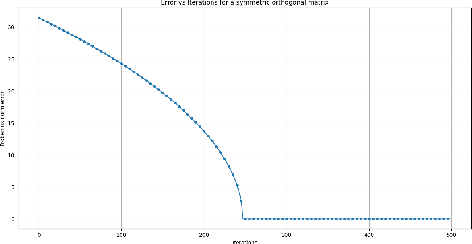

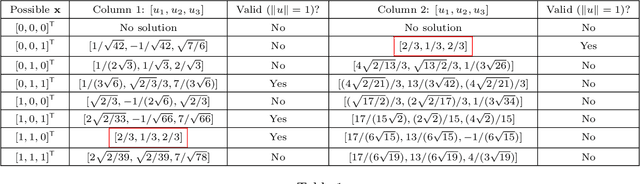

Abstract:This work is motivated by recent applications of structured dictionary learning, in particular when the dictionary is assumed to be the product of a few Householder atoms. We investigate the following two problems: 1) How do we approximate an orthogonal matrix $\mathbf{V}$ with a product of a specified number of Householder matrices, and 2) How many samples are required to learn a structured (Householder) dictionary from data? For 1) we discuss an algorithm that decomposes $\mathbf{V}$ as a product of a specified number of Householder matrices. We see that the algorithm outputs the decomposition when it exists, and give bounds on the approximation error of the algorithm when such a decomposition does not exist. For 2) given data $\mathbf{Y}=\mathbf{HX}$, we show that when assuming a binary coefficient matrix $\mathbf{X}$, the structured (Householder) dictionary learning problem can be solved with just $2$ samples (columns) in $\mathbf{Y}$.

Fast Structured Orthogonal Dictionary Learning using Householder Reflections

Sep 13, 2024Abstract:In this paper, we propose and investigate algorithms for the structured orthogonal dictionary learning problem. First, we investigate the case when the dictionary is a Householder matrix. We give sample complexity results and show theoretically guaranteed approximate recovery (in the $l_{\infty}$ sense) with optimal computational complexity. We then attempt to generalize these techniques when the dictionary is a product of a few Householder matrices. We numerically validate these techniques in the sample-limited setting to show performance similar to or better than existing techniques while having much improved computational complexity.

Column Bound for Orthogonal Matrix Factorization

May 21, 2024Abstract:This article explores the intersection of the Coupon Collector's Problem and the Orthogonal Matrix Factorization (OMF) problem. Specifically, we derive bounds on the minimum number of columns $p$ (in $\mathbf{X}$) required for the OMF problem to be tractable, using insights from the Coupon Collector's Problem. Specifically, we establish a theorem outlining the relationship between the sparsity of the matrix $\mathbf{X}$ and the number of columns $p$ required to recover the matrices $\mathbf{V}$ and $\mathbf{X}$ in the OMF problem. We show that the minimum number of columns $p$ required is given by $p = \Omega \left(\max \left\{ \frac{n}{1 - (1 - \theta)^n}, \frac{1}{\theta} \log n \right\} \right)$, where $\theta$ is the i.i.d Bernoulli parameter from which the sparsity model of the matrix $\mathbf{X}$ is derived.

Efficient Matrix Factorization Via Householder Reflections

May 13, 2024Abstract:Motivated by orthogonal dictionary learning problems, we propose a novel method for matrix factorization, where the data matrix $\mathbf{Y}$ is a product of a Householder matrix $\mathbf{H}$ and a binary matrix $\mathbf{X}$. First, we show that the exact recovery of the factors $\mathbf{H}$ and $\mathbf{X}$ from $\mathbf{Y}$ is guaranteed with $\Omega(1)$ columns in $\mathbf{Y}$ . Next, we show approximate recovery (in the $l\infty$ sense) can be done in polynomial time($O(np)$) with $\Omega(\log n)$ columns in $\mathbf{Y}$ . We hope the techniques in this work help in developing alternate algorithms for orthogonal dictionary learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge