Exploring the Limitations of Structured Orthogonal Dictionary Learning

Paper and Code

Jan 25, 2025

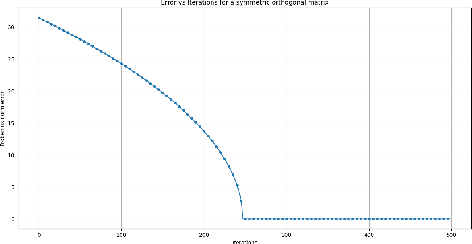

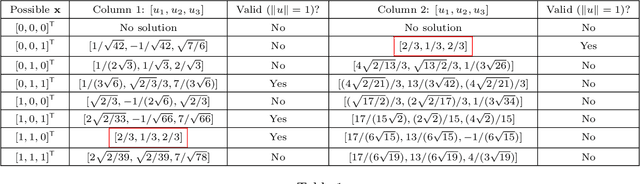

This work is motivated by recent applications of structured dictionary learning, in particular when the dictionary is assumed to be the product of a few Householder atoms. We investigate the following two problems: 1) How do we approximate an orthogonal matrix $\mathbf{V}$ with a product of a specified number of Householder matrices, and 2) How many samples are required to learn a structured (Householder) dictionary from data? For 1) we discuss an algorithm that decomposes $\mathbf{V}$ as a product of a specified number of Householder matrices. We see that the algorithm outputs the decomposition when it exists, and give bounds on the approximation error of the algorithm when such a decomposition does not exist. For 2) given data $\mathbf{Y}=\mathbf{HX}$, we show that when assuming a binary coefficient matrix $\mathbf{X}$, the structured (Householder) dictionary learning problem can be solved with just $2$ samples (columns) in $\mathbf{Y}$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge