Andrew Geiss

Exploring Randomly Wired Neural Networks for Climate Model Emulation

Dec 09, 2022

Abstract:Exploring the climate impacts of various anthropogenic emissions scenarios is key to making informed decisions for climate change mitigation and adaptation. State-of-the-art Earth system models can provide detailed insight into these impacts, but have a large associated computational cost on a per-scenario basis. This large computational burden has driven recent interest in developing cheap machine learning models for the task of climate model emulation. In this manuscript, we explore the efficacy of randomly wired neural networks for this task. We describe how they can be constructed and compare them to their standard feedforward counterparts using the ClimateBench dataset. Specifically, we replace the serially connected dense layers in multilayer perceptrons, convolutional neural networks, and convolutional long short-term memory networks with randomly wired dense layers and assess the impact on model performance for models with 1 million and 10 million parameters. We find average performance improvements of 4.2% across model complexities and prediction tasks, with substantial performance improvements of up to 16.4% in some cases. Furthermore, we find no significant difference in prediction speed between networks with standard feedforward dense layers and those with randomly wired layers. These findings indicate that randomly wired neural networks may be suitable direct replacements for traditional dense layers in many standard models.

Invertible CNN-Based Super Resolution with Downsampling Awareness

Nov 11, 2020

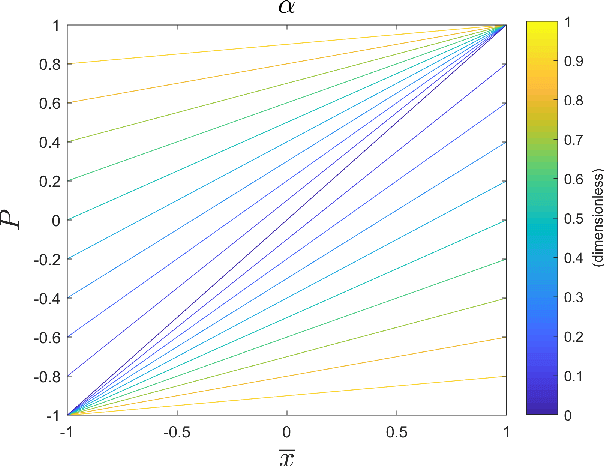

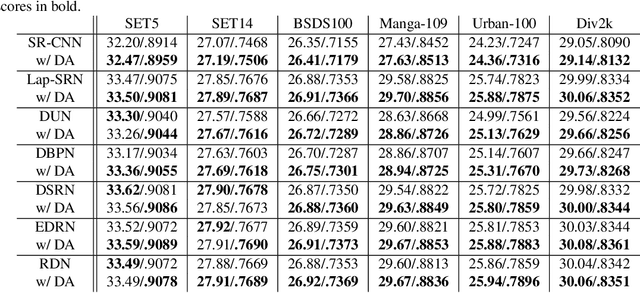

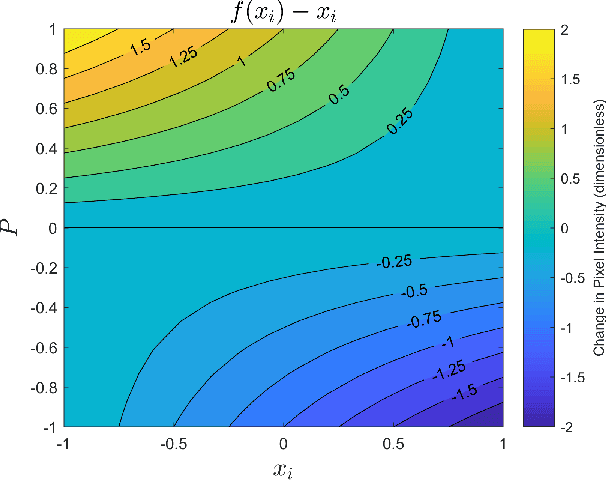

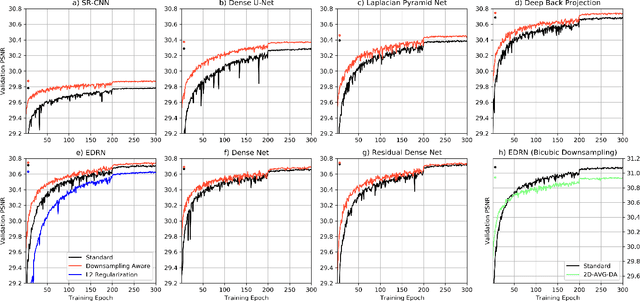

Abstract:Single image super resolution involves artificially increasing the resolution of an image. Recently, convolutional neural networks have been demonstrated as very powerful tools for this problem. These networks are typically trained by artificially degrading high resolution images and training the neural network to reproduce the original. Because these neural networks are learning an inverse function for an image downsampling scheme, their high-resolution outputs should ideally re-produce the corresponding low-resolution input when the same downsampling scheme is applied. This constraint has not historically been explicitly and strictly imposed during training however. Here, a method for "downsampling aware" super resolution networks is proposed. A differentiable operator is applied as the final output layer of the neural network that forces the downsampled output to match the low resolution input data under 2D-average downsampling. It is demonstrated that appending this operator to a selection of state-of-the-art deep-learning-based super resolution schemes improves training time and overall performance on most of the common image super resolution benchmark datasets. In addition to this performance improvement for images, this method has potentially broad and significant impacts in the physical sciences. This scheme can be applied to data produced by medical scans, precipitation radars, gridded numerical simulations, satellite imagers, and many other sources. In such applications, the proposed method's guarantee of strict adherence to physical conservation laws is of critical importance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge