Andrew C. Morrison

espiownage: Tracking Transients in Steelpan Drum Strikes Using Surveillance Technology

Oct 23, 2021

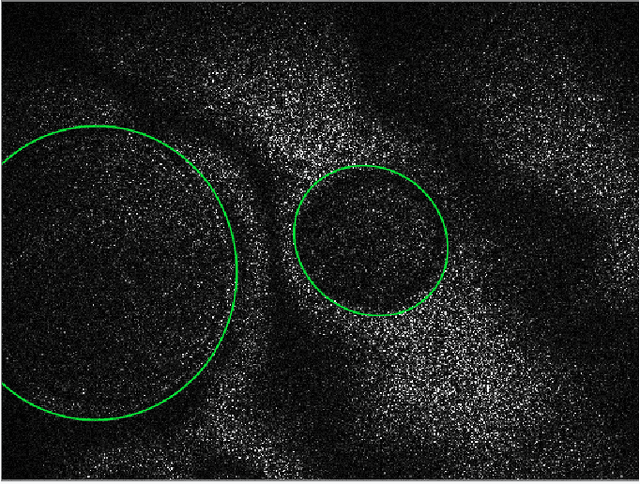

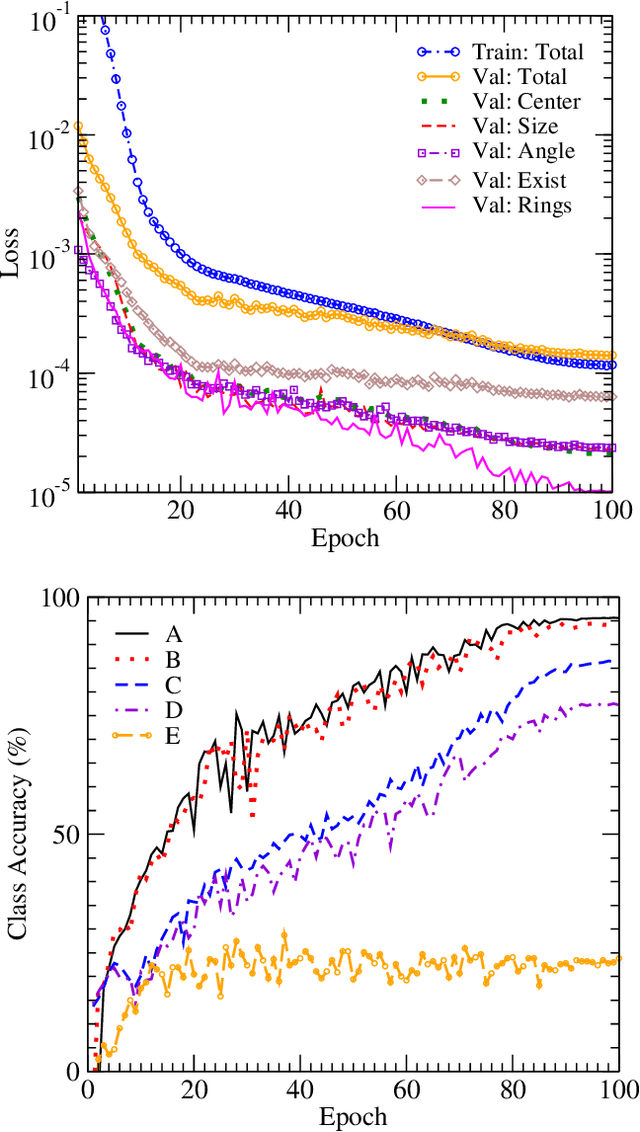

Abstract:We present an improvement in the ability to meaningfully track features in high speed videos of Caribbean steelpan drums illuminated by Electronic Speckle Pattern Interferometry (ESPI). This is achieved through the use of up-to-date computer vision libraries for object detection and image segmentation as well as a significant effort toward cleaning the dataset previously used to train systems for this application. Besides improvements on previous metric scores by 10% or more, noteworthy in this project are the introduction of a segmentation-regression map for the entire drum surface yielding interference fringe counts comparable to those obtained via object detection, as well as the accelerated workflow for coordinating the data-cleaning-and-model-training feedback loop for rapid iteration allowing this project to be conducted on a timescale of only 18 days.

ConvNets for Counting: Object Detection of Transient Phenomena in Steelpan Drums

Feb 01, 2021

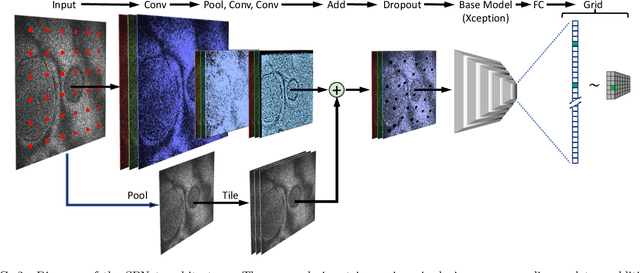

Abstract:We train an object detector built from convolutional neural networks to count interference fringes in elliptical antinode regions visible in frames of high-speed video recordings of transient oscillations in Caribbean steelpan drums illuminated by electronic speckle pattern interferometry (ESPI). The annotations provided by our model, "SPNet" are intended to contribute to the understanding of time-dependent behavior in such drums by tracking the development of sympathetic vibration modes. The system is trained on a dataset of crowdsourced human-annotated images obtained from the Zooniverse Steelpan Vibrations Project. Due to the relatively small number of human-annotated images, we also train on a large corpus of synthetic images whose visual properties have been matched to those of the real images by using a Generative Adversarial Network to perform style transfer. Applying the model to predict annotations of thousands of unlabeled video frames, we can track features and measure oscillations consistent with audio recordings of the same drum strikes. One surprising result is that the machine-annotated video frames reveal transitions between the first and second harmonics of drum notes that significantly precede such transitions present in the audio recordings. As this paper primarily concerns the development of the model, deeper physical insights await its further application.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge