Andres Weintraub

Two Scalable Approaches for Burned-Area Mapping Using U-Net and Landsat Imagery

Nov 29, 2023

Abstract:Monitoring wildfires is an essential step in minimizing their impact on the planet, understanding the many negative environmental, economic, and social consequences. Recent advances in remote sensing technology combined with the increasing application of artificial intelligence methods have improved real-time, high-resolution fire monitoring. This study explores two proposed approaches based on the U-Net model for automating and optimizing the burned-area mapping process. Denoted 128 and AllSizes (AS), they are trained on datasets with a different class balance by cropping input images to different sizes. They are then applied to Landsat imagery and time-series data from two fire-prone regions in Chile. The results obtained after enhancement of model performance by hyperparameter optimization demonstrate the effectiveness of both approaches. Tests based on 195 representative images of the study area show that increasing dataset balance using the AS model yields better performance. More specifically, AS exhibited a Dice Coefficient (DC) of 0.93, an Omission Error (OE) of 0.086, and a Commission Error (CE) of 0.045, while the 128 model achieved a DC of 0.86, an OE of 0.12, and a CE of 0.12. These findings should provide a basis for further development of scalable automatic burned-area mapping tools.

Adjusting Rate of Spread Factors through Derivative-Free Optimization: A New Methodology to Improve the Performance of Forest Fire Simulators

Sep 11, 2019

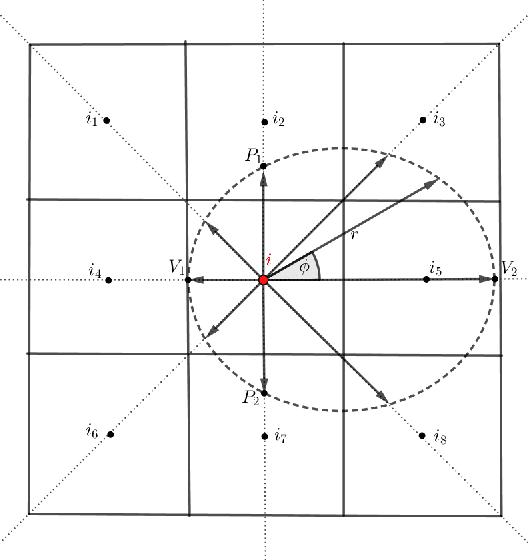

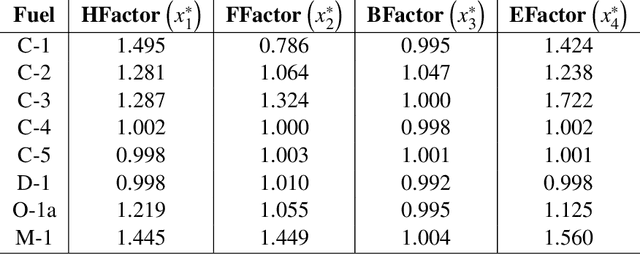

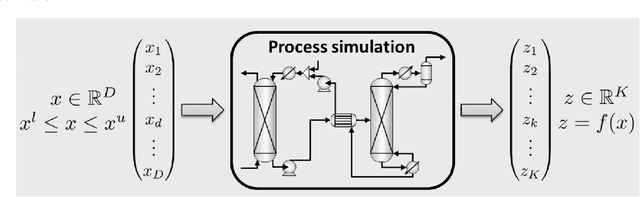

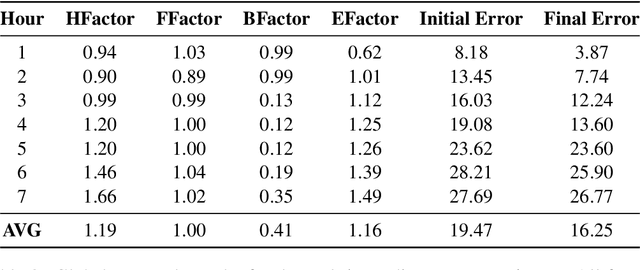

Abstract:In practical applications, it is common that wildfire simulators do not correctly predict the evolution of the fire scar. Usually, this is caused due to multiple factors including inaccuracy in the input data such as land cover classification, moisture, improperly represented local winds, cumulative errors in the fire growth simulation model, high level of discontinuity/heterogeneity within the landscape, among many others. Therefore in practice, it is necessary to adjust the propagation of the fire to obtain better results, either to support suppression activities or to improve the performance of the simulator considering new default parameters for future events, best representing the current fire spread growth phenomenon. In this article, we address this problem through a new methodology using Derivative-Free Optimization (DFO) algorithms for adjusting the Rate of Spread (ROS) factors in a fire simulation growth model called Cell2Fire. To achieve this, we solve an error minimization optimization problem that captures the difference between the simulated and observed fire, which involves the evaluation of the simulator output in each iteration as part of a DFO framework, allowing us to find the best possible factors for each fuel present on the landscape. Numerical results for different objective functions are shown and discussed, including a performance comparison of alternative DFO algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge