Andres Valenzuela

Artificial Pupil Dilation for Data Augmentation in Iris Semantic Segmentation

Dec 24, 2022

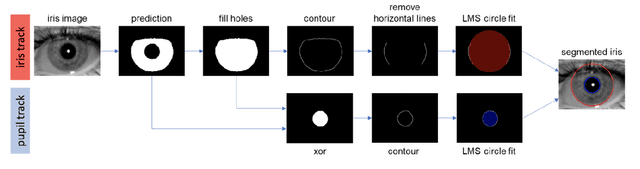

Abstract:Biometrics is the science of identifying an individual based on their intrinsic anatomical or behavioural characteristics, such as fingerprints, face, iris, gait, and voice. Iris recognition is one of the most successful methods because it exploits the rich texture of the human iris, which is unique even for twins and does not degrade with age. Modern approaches to iris recognition utilize deep learning to segment the valid portion of the iris from the rest of the eye, so it can then be encoded, stored and compared. This paper aims to improve the accuracy of iris semantic segmentation systems by introducing a novel data augmentation technique. Our method can transform an iris image with a certain dilation level into any desired dilation level, thus augmenting the variability and number of training examples from a small dataset. The proposed method is fast and does not require training. The results indicate that our data augmentation method can improve segmentation accuracy up to 15% for images with high pupil dilation, which creates a more reliable iris recognition pipeline, even under extreme dilation.

* 6 pages, 7 figures, 2 tables

Learning to Predict Fitness for Duty using Near Infrared Periocular Iris Images

Sep 04, 2022

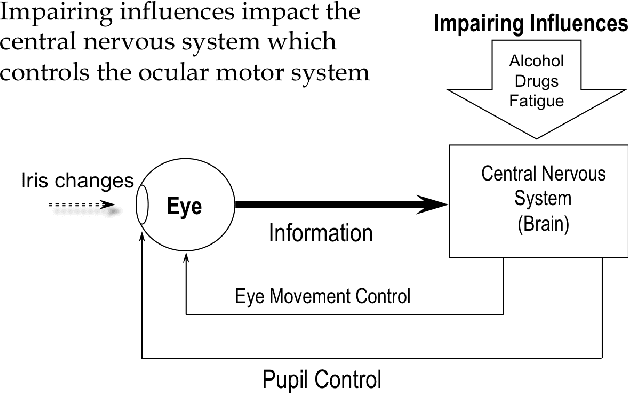

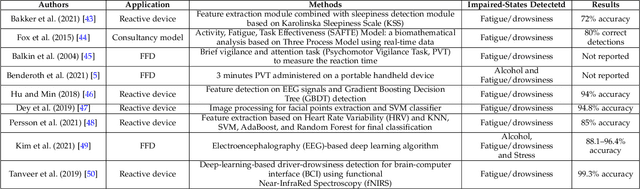

Abstract:This research proposes a new database and method to detect the reduction of alertness conditions due to alcohol, drug consumption and sleepiness deprivation from Near-Infra-Red (NIR) periocular eye images. The study focuses on determining the effect of external factors on the Central Nervous System (CNS). The goal is to analyse how this impacts iris and pupil movement behaviours and if it is possible to classify these changes with a standard iris NIR capture device. This paper proposes a modified MobileNetV2 to classify iris NIR images taken from subjects under alcohol/drugs/sleepiness influences. The results show that the MobileNetV2-based classifier can detect the Unfit alertness condition from iris samples captured after alcohol and drug consumption robustly with a detection accuracy of 91.3% and 99.1%, respectively. The sleepiness condition is the most challenging with 72.4%. For two-class grouped images belonging to the Fit/Unfit classes, the model obtained an accuracy of 94.0% and 84.0%, respectively, using a smaller number of parameters than the standard Deep learning Network algorithm. This work is a step forward in biometric applications for developing an automatic system to classify "Fitness for Duty" and prevent accidents due to alcohol/drug consumption and sleepiness.

Towards an Efficient Semantic Segmentation Method of ID Cards for Verification Systems

Nov 24, 2021

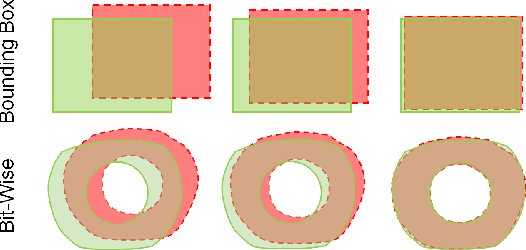

Abstract:Removing the background in ID Card images is a real challenge for remote verification systems because many of the re-digitalised images present cluttered backgrounds, poor illumination conditions, distortion and occlusions. The background in ID Card images confuses the classifiers and the text extraction. Due to the lack of available images for research, this field represents an open problem in computer vision today. This work proposes a method for removing the background using semantic segmentation of ID Cards. In the end, images captured in the wild from the real operation, using a manually labelled dataset consisting of 45,007 images, with five types of ID Cards from three countries (Chile, Argentina and Mexico), including typical presentation attack scenarios, were used. This method can help to improve the following stages in a regular identity verification or document tampering detection system. Two Deep Learning approaches were explored, based on MobileUNet and DenseNet10. The best results were obtained using MobileUNet, with 6.5 million parameters. A Chilean ID Card's mean Intersection Over Union (IoU) was 0.9926 on a private test dataset of 4,988 images. The best results for the fused multi-country dataset of ID Card images from Chile, Argentina and Mexico reached an IoU of 0.9911. The proposed methods are lightweight enough to be used in real-time operation on mobile devices.

Semantic Segmentation of Periocular Near-Infra-Red Eye Images Under Alcohol Effects

Jun 30, 2021

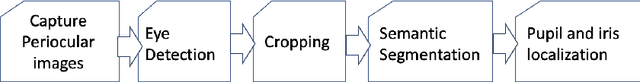

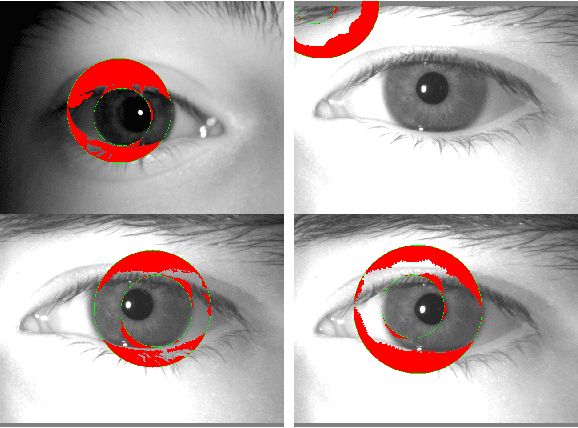

Abstract:This paper proposes a new framework to detect, segment, and estimate the localization of the eyes from a periocular Near-Infra-Red iris image under alcohol consumption. The purpose of the system is to measure the fitness for duty. Fitness systems allow us to determine whether a person is physically or psychologically able to perform their tasks. Our framework is based on an object detector trained from scratch to detect both eyes from a single image. Then, two efficient networks were used for semantic segmentation; a Criss-Cross attention network and DenseNet10, with only 122,514 and 210,732 parameters, respectively. These networks can find the pupil, iris, and sclera. In the end, the binary output eye mask is used for pupil and iris diameter estimation with high precision. Five state-of-the-art algorithms were used for this purpose. A mixed proposal reached the best results. A second contribution is establishing an alcohol behavior curve to detect the alcohol presence utilizing a stream of images captured from an iris instance. Also, a manually labeled database with more than 20k images was created. Our best method obtains a mean Intersection-over-Union of 94.54% with DenseNet10 with only 210,732 parameters and an error of only 1-pixel on average.

Selfie Periocular Verification using an Efficient Super-Resolution Approach

Feb 16, 2021

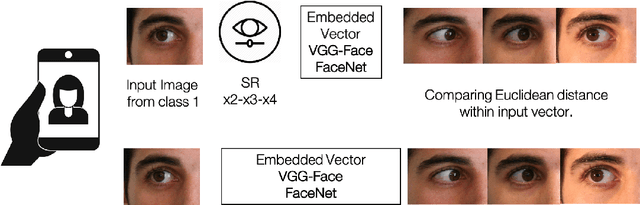

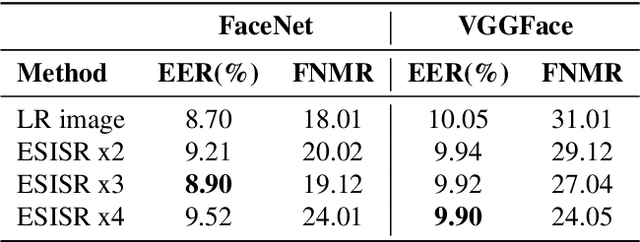

Abstract:Selfie-based biometrics has great potential for a wide range of applications from marketing to higher security environments like online banking. This is now especially relevant since e.g. periocular verification is contactless, and thereby safe to use in pandemics such as COVID-19. However, selfie-based biometrics faces some challenges since there is limited control over the data acquisition conditions. Therefore, super-resolution has to be used to increase the quality of the captured images. Most of the state of the art super-resolution methods use deep networks with large filters, thereby needing to train and store a correspondingly large number of parameters, and making their use difficult for mobile devices commonly used for selfie-based. In order to achieve an efficient super-resolution method, we propose an Efficient Single Image Super-Resolution (ESISR) algorithm, which takes into account a trade-off between the efficiency of the deep neural network and the size of its filters. To that end, the method implements a novel loss function based on the Sharpness metric. This metric turns out to be more suitable for increasing the quality of the eye images. Our method drastically reduces the number of parameters when compared with Deep CNNs with Skip Connection and Network (DCSCN): from 2,170,142 to 28,654 parameters when the image size is increased by a factor of x3. Furthermore, the proposed method keeps the sharp quality of the images, which is highly relevant for biometric recognition purposes. The results on remote verification systems with raw images reached an Equal Error Rate (EER) of 8.7% for FaceNet and 10.05% for VGGFace. Where embedding vectors were used from periocular images the best results reached an EER of 8.9% (x3) for FaceNet and 9.90% (x4) for VGGFace.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge