Andrei Pătraşcu

Fusing Dictionary Learning and Support Vector Machines for Unsupervised Anomaly Detection

Apr 05, 2024

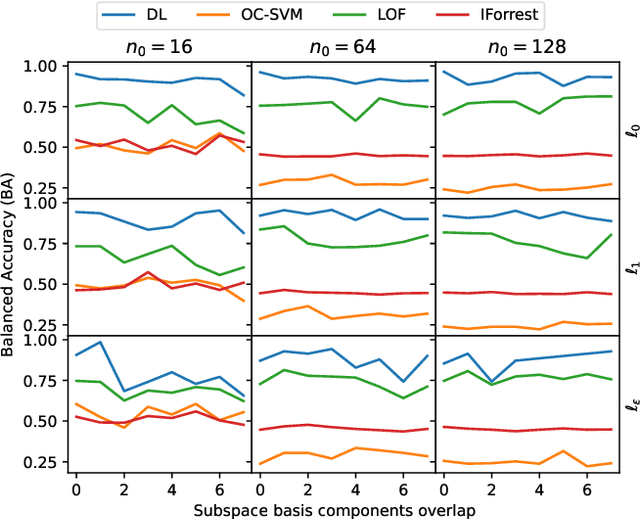

Abstract:We study in this paper the improvement of one-class support vector machines (OC-SVM) through sparse representation techniques for unsupervised anomaly detection. As Dictionary Learning (DL) became recently a common analysis technique that reveals hidden sparse patterns of data, our approach uses this insight to endow unsupervised detection with more control on pattern finding and dimensions. We introduce a new anomaly detection model that unifies the OC-SVM and DL residual functions into a single composite objective, subsequently solved through K-SVD-type iterative algorithms. A closed-form of the alternating K-SVD iteration is explicitly derived for the new composite model and practical implementable schemes are discussed. The standard DL model is adapted for the Dictionary Pair Learning (DPL) context, where the usual sparsity constraints are naturally eliminated. Finally, we extend both objectives to the more general setting that allows the use of kernel functions. The empirical convergence properties of the resulting algorithms are provided and an in-depth analysis of their parametrization is performed while also demonstrating their numerical performance in comparison with existing methods.

Learning Explicitly Conditioned Sparsifying Transforms

Mar 05, 2024

Abstract:Sparsifying transforms became in the last decades widely known tools for finding structured sparse representations of signals in certain transform domains. Despite the popularity of classical transforms such as DCT and Wavelet, learning optimal transforms that guarantee good representations of data into the sparse domain has been recently analyzed in a series of papers. Typically, the conditioning number and representation ability are complementary key features of learning square transforms that may not be explicitly controlled in a given optimization model. Unlike the existing approaches from the literature, in our paper, we consider a new sparsifying transform model that enforces explicit control over the data representation quality and the condition number of the learned transforms. We confirm through numerical experiments that our model presents better numerical behavior than the state-of-the-art.

Unsupervised Abnormal Traffic Detection through Topological Flow Analysis

May 14, 2022

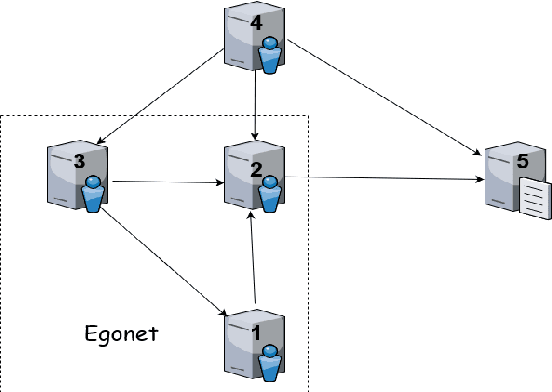

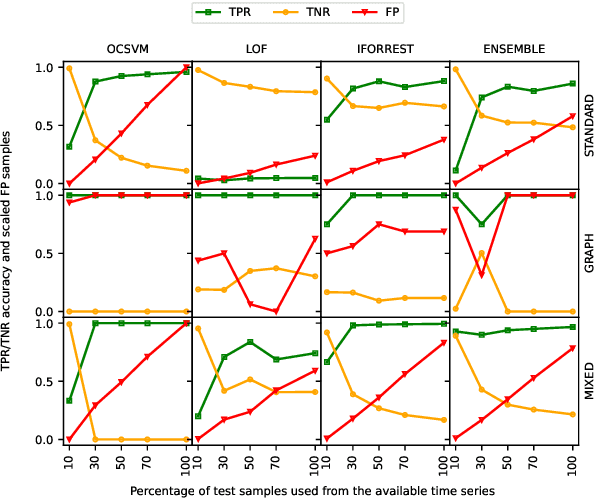

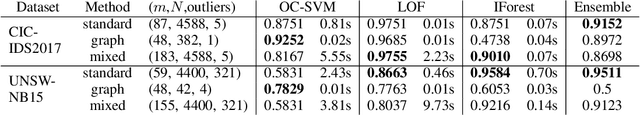

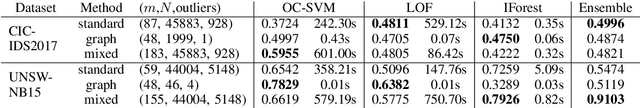

Abstract:Cyberthreats are a permanent concern in our modern technological world. In the recent years, sophisticated traffic analysis techniques and anomaly detection (AD) algorithms have been employed to face the more and more subversive adversarial attacks. A malicious intrusion, defined as an invasive action intending to illegally exploit private resources, manifests through unusual data traffic and/or abnormal connectivity pattern. Despite the plethora of statistical or signature-based detectors currently provided in the literature, the topological connectivity component of a malicious flow is less exploited. Furthermore, a great proportion of the existing statistical intrusion detectors are based on supervised learning, that relies on labeled data. By viewing network flows as weighted directed interactions between a pair of nodes, in this paper we present a simple method that facilitate the use of connectivity graph features in unsupervised anomaly detection algorithms. We test our methodology on real network traffic datasets and observe several improvements over standard AD.

Dictionary Learning with Uniform Sparse Representations for Anomaly Detection

Jan 11, 2022

Abstract:Many applications like audio and image processing show that sparse representations are a powerful and efficient signal modeling technique. Finding an optimal dictionary that generates at the same time the sparsest representations of data and the smallest approximation error is a hard problem approached by dictionary learning (DL). We study how DL performs in detecting abnormal samples in a dataset of signals. In this paper we use a particular DL formulation that seeks uniform sparse representations model to detect the underlying subspace of the majority of samples in a dataset, using a K-SVD-type algorithm. Numerical simulations show that one can efficiently use this resulted subspace to discriminate the anomalies over the regular data points.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge