Andreas Grafberger

FedLesScan: Mitigating Stragglers in Serverless Federated Learning

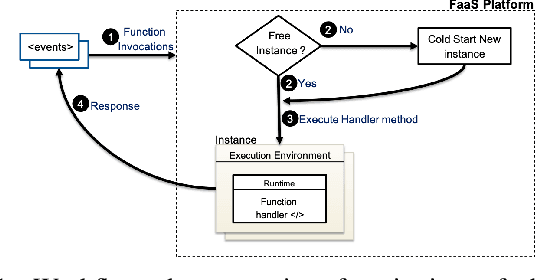

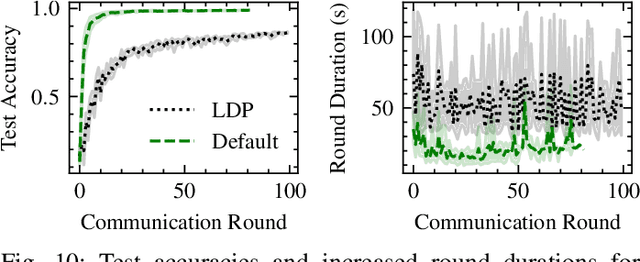

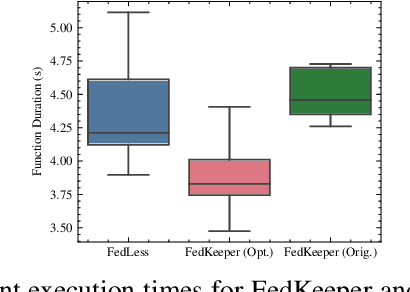

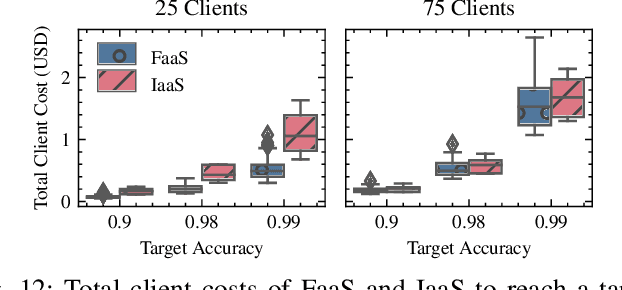

Nov 10, 2022Abstract:Federated Learning (FL) is a machine learning paradigm that enables the training of a shared global model across distributed clients while keeping the training data local. While most prior work on designing systems for FL has focused on using stateful always running components, recent work has shown that components in an FL system can greatly benefit from the usage of serverless computing and Function-as-a-Service technologies. To this end, distributed training of models with severless FL systems can be more resource-efficient and cheaper than conventional FL systems. However, serverless FL systems still suffer from the presence of stragglers, i.e., slow clients due to their resource and statistical heterogeneity. While several strategies have been proposed for mitigating stragglers in FL, most methodologies do not account for the particular characteristics of serverless environments, i.e., cold-starts, performance variations, and the ephemeral stateless nature of the function instances. Towards this, we propose FedLesScan, a novel clustering-based semi-asynchronous training strategy, specifically tailored for serverless FL. FedLesScan dynamically adapts to the behaviour of clients and minimizes the effect of stragglers on the overall system. We implement our strategy by extending an open-source serverless FL system called FedLess. Moreover, we comprehensively evaluate our strategy using the 2nd generation Google Cloud Functions with four datasets and varying percentages of stragglers. Results from our experiments show that compared to other approaches FedLesScan reduces training time and cost by an average of 8% and 20% respectively while utilizing clients better with an average increase in the effective update ratio of 17.75%.

FedLess: Secure and Scalable Federated Learning Using Serverless Computing

Nov 05, 2021

Abstract:The traditional cloud-centric approach for Deep Learning (DL) requires training data to be collected and processed at a central server which is often challenging in privacy-sensitive domains like healthcare. Towards this, a new learning paradigm called Federated Learning (FL) has been proposed that brings the potential of DL to these domains while addressing privacy and data ownership issues. FL enables remote clients to learn a shared ML model while keeping the data local. However, conventional FL systems face several challenges such as scalability, complex infrastructure management, and wasted compute and incurred costs due to idle clients. These challenges of FL systems closely align with the core problems that serverless computing and Function-as-a-Service (FaaS) platforms aim to solve. These include rapid scalability, no infrastructure management, automatic scaling to zero for idle clients, and a pay-per-use billing model. To this end, we present a novel system and framework for serverless FL, called FedLess. Our system supports multiple commercial and self-hosted FaaS providers and can be deployed in the cloud, on-premise in institutional data centers, and on edge devices. To the best of our knowledge, we are the first to enable FL across a large fabric of heterogeneous FaaS providers while providing important features like security and Differential Privacy. We demonstrate with comprehensive experiments that the successful training of DNNs for different tasks across up to 200 client functions and more is easily possible using our system. Furthermore, we demonstrate the practical viability of our methodology by comparing it against a traditional FL system and show that it can be cheaper and more resource-efficient.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge