Anders Miltner

Program Synthesis Through Reinforcement Learning Guided Tree Search

Jun 08, 2018

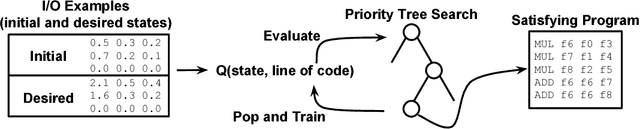

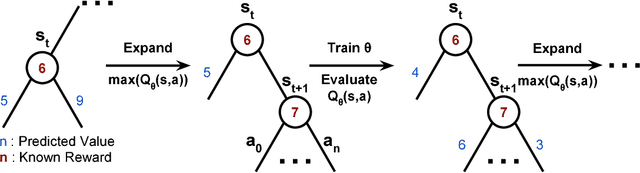

Abstract:Program Synthesis is the task of generating a program from a provided specification. Traditionally, this has been treated as a search problem by the programming languages (PL) community and more recently as a supervised learning problem by the machine learning community. Here, we propose a third approach, representing the task of synthesizing a given program as a Markov decision process solvable via reinforcement learning(RL). From observations about the states of partial programs, we attempt to find a program that is optimal over a provided reward metric on pairs of programs and states. We instantiate this approach on a subset of the RISC-V assembly language operating on floating point numbers, and as an optimization inspired by search-based techniques from the PL community, we combine RL with a priority search tree. We evaluate this instantiation and demonstrate the effectiveness of our combined method compared to a variety of baselines, including a pure RL ablation and a state of the art Markov chain Monte Carlo search method on this task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge