Anastasios K. Papazafeiropoulos

Near-Field Terahertz Communications: Model-Based and Model-Free Channel Estimation

Feb 09, 2023

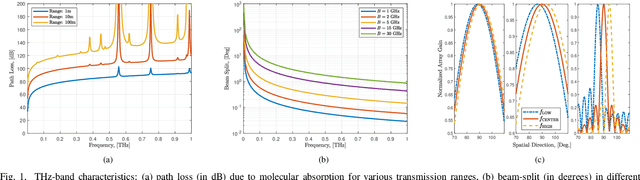

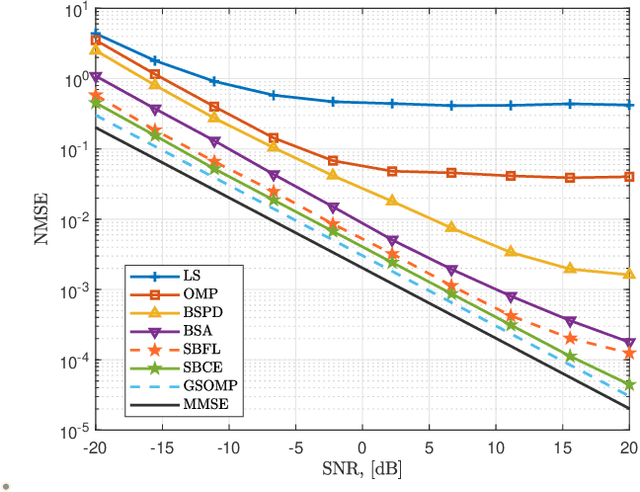

Abstract:Terahertz (THz) band is expected to be one of the key enabling technologies of the sixth generation (6G) wireless networks because of its abundant available bandwidth and very narrow beam width. Due to high frequency operations, electrically small array apertures are employed, and the signal wavefront becomes spherical in the near-field. Therefore, near-field signal model should be considered for channel acquisition in THz systems. Unlike prior works which mostly ignore the impact of near-field beam-split (NB) and consider either narrowband scenario or far-field models, this paper introduces both a model-based and a model-free techniques for wideband THz channel estimation in the presence of NB. The model-based approach is based on orthogonal matching pursuit (OMP) algorithm, for which we design an NB-aware dictionary. The key idea is to exploit the angular and range deviations due to the NB. We then employ the OMP algorithm, which accounts for the deviations thereby ipso facto mitigating the effect of NB. We further introduce a federated learning (FL)-based approach as a model-free solution for channel estimation in a multi-user scenario to achieve reduced complexity and training overhead. Through numerical simulations, we demonstrate the effectiveness of the proposed channel estimation techniques for wideband THz systems in comparison with the existing state-of-the-art techniques.

Terahertz-Band Channel and Beam Split Estimation via Array Perturbation Model

Aug 13, 2022

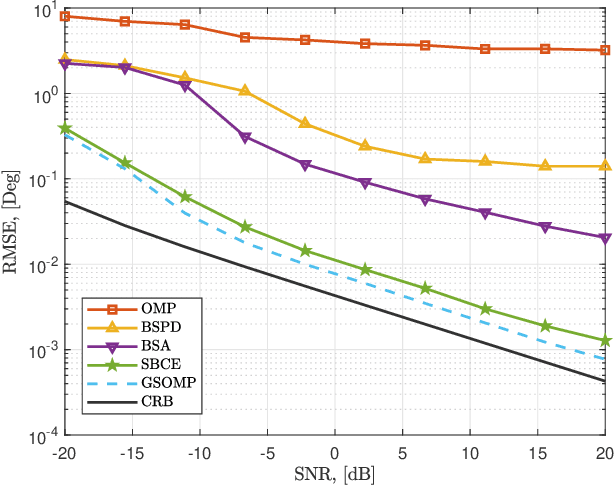

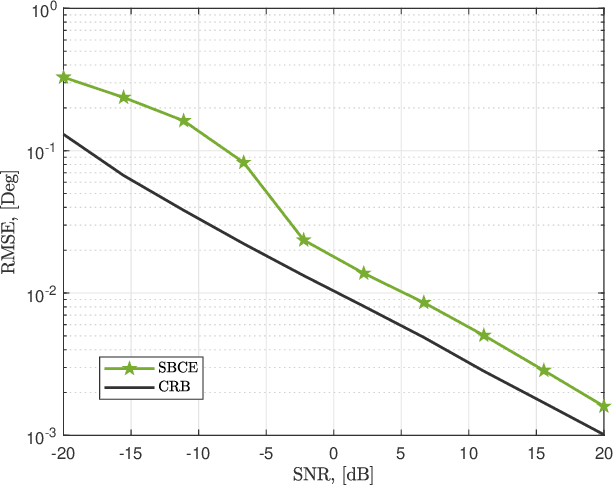

Abstract:For the demonstration of ultra-wideband bandwidth and pencil-beamforming, the terahertz (THz)-band has been envisioned as one of the key enabling technologies for the sixth generation networks. However, the acquisition of the THz channel entails several unique challenges such as severe path loss and beam-split. Prior works usually employ ultra-massive arrays and additional hardware components comprised of time-delayers to compensate for these loses. In order to provide a cost-effective solution, this paper introduces a sparse-Bayesian-learning (SBL) technique for joint channel and beam-split estimation. Specifically, we first model the beam-split as an array perturbation inspired from array signal processing. Next, a low-complexity approach is developed by exploiting the line-of-sight-dominant feature of THz channel to reduce the computational complexity involved in the proposed SBL technique for channel estimation (SBCE). Additionally, based on federated-learning, we implement a model-free technique to the proposed model-based SBCE solution. Further to that, we examine the near-field considerations of THz channel, and introduce the range-dependent near-field beam-split. The theoretical performance bounds, i.e., Cram\'er-Rao lower bounds, are derived for near- and far-field parameters, e.g., user directions, ranges and beam-split, and several numerical experiments are conducted. Numerical simulations demonstrate that SBCE outperforms the existing approaches and exhibits lower hardware cost.

Implicit Channel Learning for Machine Learning Applications in 6G Wireless Networks

Jun 24, 2022

Abstract:With the deployment of the fifth generation (5G) wireless systems gathering momentum across the world, possible technologies for 6G are under active research discussions. In particular, the role of machine learning (ML) in 6G is expected to enhance and aid emerging applications such as virtual and augmented reality, vehicular autonomy, and computer vision. This will result in large segments of wireless data traffic comprising image, video and speech. The ML algorithms process these for classification/recognition/estimation through the learning models located on cloud servers. This requires wireless transmission of data from edge devices to the cloud server. Channel estimation, handled separately from recognition step, is critical for accurate learning performance. Toward combining the learning for both channel and the ML data, we introduce implicit channel learning to perform the ML tasks without estimating the wireless channel. Here, the ML models are trained with channel-corrupted datasets in place of nominal data. Without channel estimation, the proposed approach exhibits approximately 60% improvement in image and speech classification tasks for diverse scenarios such as millimeter wave and IEEE 802.11p vehicular channels.

Uplink Performance Analysis of Cell-Free mMIMO Systems under Channel Aging

Apr 21, 2021

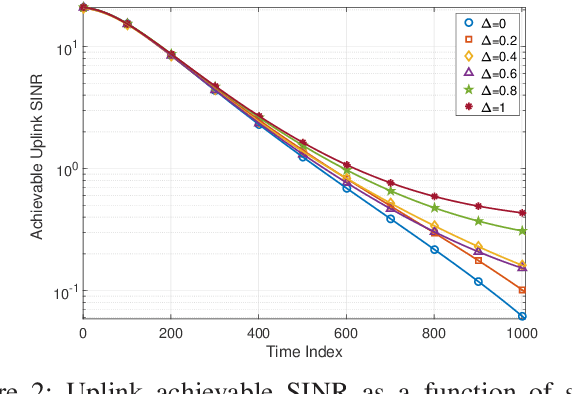

Abstract:In this paper, we investigate the impact of channel aging on the uplink performance of a cell-free~(CF) massive multiple-input multiple-output (mMIMO) system with a minimum mean squared error (MMSE) receiver. To this end, we present a new model for the temporal evolution of the channel, which allows the channel to age at different rates at different access points (APs). Under this setting, we derive the deterministic equivalent of the per-user achievable signal-to-interference-plus-noise ratio (SINR). In addition to validating the theoretical expressions, our simulation results reveal that, {at low user mobilities,} the SINR of CF-mMIMO is nearly 5 dB higher than its cellular counterpart with the same number of antennas, and about 8 dB higher than that of an equivalent small-cell network with the same number of APs. {On the other hand, at very high user velocities, and when the channel between the UEs the different APs age at same rate, the relative impact of aging is higher for CF-mMIMO compared to cellular mMIMO. However, when the channel ages at the different APs with different rates, the effect of aging on CF-mMIMO is marginally mitigated, especially for larger frame durations.

Federated Learning for Physical Layer Design

Feb 23, 2021

Abstract:Model-free techniques, such as machine learning (ML), have recently attracted much interest for physical layer design, e.g., symbol detection, channel estimation and beamforming. Most of these ML techniques employ centralized learning (CL) schemes and assume the availability of datasets at a parameter server (PS), demanding the transmission of data from the edge devices, such as mobile phones, to the PS. Exploiting the data generated at the edge, federated learning (FL) has been proposed recently as a distributed learning scheme, in which each device computes the model parameters and sends them to the PS for model aggregation, while the datasets are kept intact at the edge. Thus, FL is more communication-efficient and privacy-preserving than CL and applicable to the wireless communication scenarios, wherein the data are generated at the edge devices. This article discusses the recent advances in FL-based training for physical layer design problems, and identifies the related design challenges along with possible solutions to improve the performance in terms of communication overhead, model/data/hardware complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge