Amy LaViers

Toward an Expressive Bipedal Robot: Variable Gait Synthesis and Validation in a Planar Model

Sep 13, 2018

Abstract:Humans are efficient, yet expressive in their motion. Human walking behaviors can be used to walk across a great variety of surfaces without falling and to communicate internal state to other humans through variable gait styles. This provides inspiration for creating similarly expressive bipedal robots. To this end, a framework is presented for stylistic gait generation in a compass-like under-actuated planar biped model. The gait design is done using model-based trajectory optimization with variable constraints. For a finite range of optimization parameters, a large set of 360 gaits can be generated for this model. In particular, step length and cost function are varied to produce distinct cyclic walking gaits. From these resulting gaits, 6 gaits are identified and labeled, using embodied movement analysis, with stylistic verbs that correlate with human activity, e.g., "lope" and "saunter". These labels have been validated by conducting user studies in Amazon Mechanical Turk and thus demonstrate that visually distinguishable, meaningful gaits are generated using this framework. This lays groundwork for creating a bipedal humanoid with variable socially competent movement profiles.

Expressivity in Natural and Artificial Systems

Jul 05, 2018

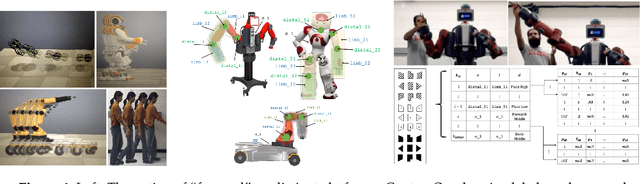

Abstract:Roboticists are trying to replicate animal behavior in artificial systems. Yet, quantitative bounds on capacity of a moving platform (natural or artificial) to express information in the environment are not known. This paper presents a measure for the capacity of motion complexity -- the expressivity -- of articulated platforms (both natural and artificial) and shows that this measure is stagnant and unexpectedly limited in extant robotic systems. This analysis indicates trends in increasing capacity in both internal and external complexity for natural systems while artificial, robotic systems have increased significantly in the capacity of computational (internal) states but remained more or less constant in mechanical (external) state capacity. This work presents a way to analyze trends in animal behavior and shows that robots are not capable of the same multi-faceted behavior in rich, dynamic environments as natural systems.

Choreographic and Somatic Approaches for the Development of Expressive Robotic Systems

Dec 21, 2017

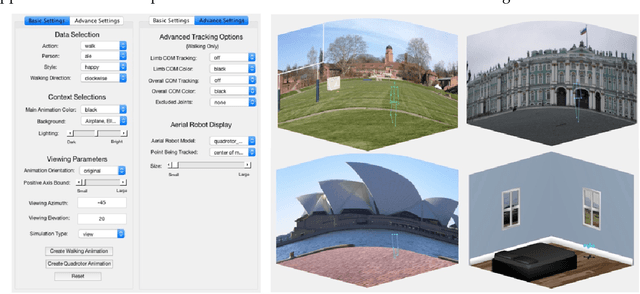

Abstract:As robotic systems are moved out of factory work cells into human-facing environments questions of choreography become central to their design, placement, and application. With a human viewer or counterpart present, a system will automatically be interpreted within context, style of movement, and form factor by human beings as animate elements of their environment. The interpretation by this human counterpart is critical to the success of the system's integration: knobs on the system need to make sense to a human counterpart; an artificial agent should have a way of notifying a human counterpart of a change in system state, possibly through motion profiles; and the motion of a human counterpart may have important contextual clues for task completion. Thus, professional choreographers, dance practitioners, and movement analysts are critical to research in robotics. They have design methods for movement that align with human audience perception, can identify simplified features of movement for human-robot interaction goals, and have detailed knowledge of the capacity of human movement. This article provides approaches employed by one research lab, specific impacts on technical and artistic projects within, and principles that may guide future such work. The background section reports on choreography, somatic perspectives, improvisation, the Laban/Bartenieff Movement System, and robotics. From this context methods including embodied exercises, writing prompts, and community building activities have been developed to facilitate interdisciplinary research. The results of this work is presented as an overview of a smattering of projects in areas like high-level motion planning, software development for rapid prototyping of movement, artistic output, and user studies that help understand how people interpret movement. Finally, guiding principles for other groups to adopt are posited.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge