Amrita Sawhney

Playing with Food: Learning Food Item Representations through Interactive Exploration

Jan 06, 2021

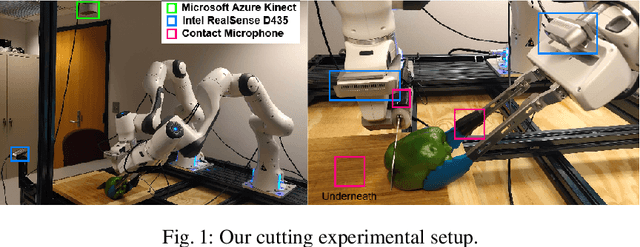

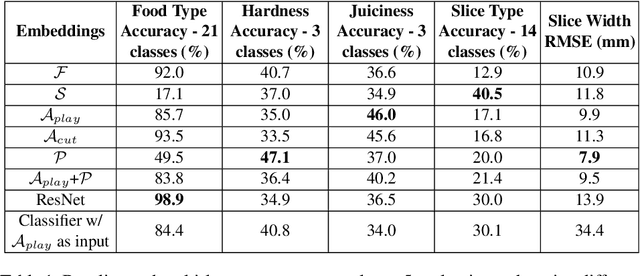

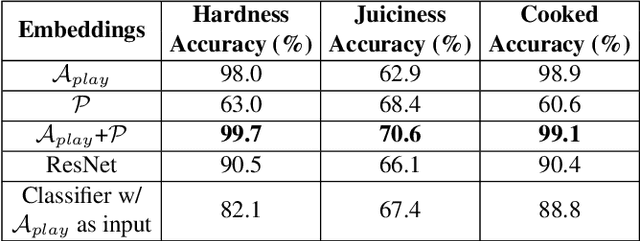

Abstract:A key challenge in robotic food manipulation is modeling the material properties of diverse and deformable food items. We propose using a multimodal sensory approach to interact and play with food that facilitates the ability to distinguish these properties across food items. First, we use a robotic arm and an array of sensors, which are synchronized using ROS, to collect a diverse dataset consisting of 21 unique food items with varying slices and properties. Afterwards, we learn visual embedding networks that utilize a combination of proprioceptive, audio, and visual data to encode similarities among food items using a triplet loss formulation. Our evaluations show that embeddings learned through interactions can successfully increase performance in a wide range of material and shape classification tasks. We envision that these learned embeddings can be utilized as a basis for planning and selecting optimal parameters for more material-aware robotic food manipulation skills. Furthermore, we hope to stimulate further innovations in the field of food robotics by sharing this food playing dataset with the research community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge