Alperen Görmez

Class Based Thresholding in Early Exit Semantic Segmentation Networks

Oct 27, 2022

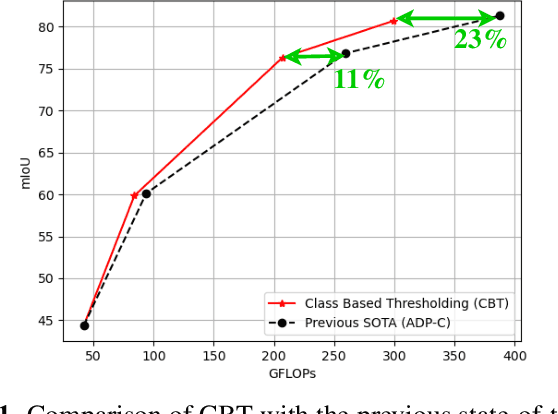

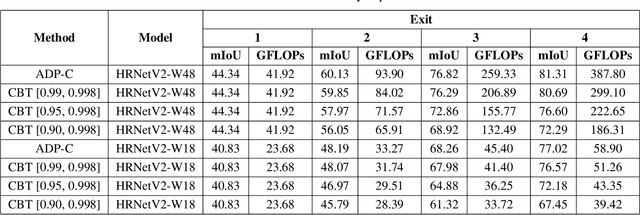

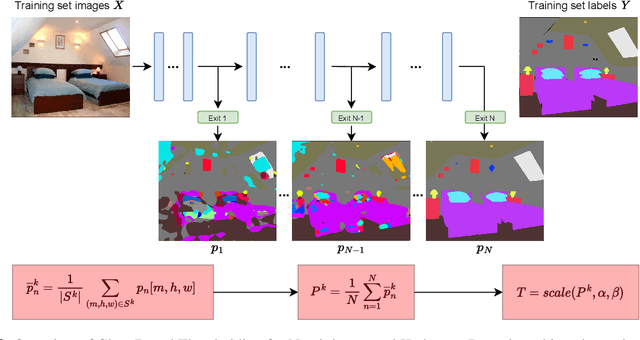

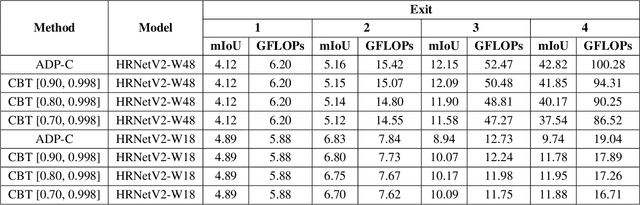

Abstract:We propose Class Based Thresholding (CBT) to reduce the computational cost of early exit semantic segmentation models while preserving the mean intersection over union (mIoU) performance. A key idea of CBT is to exploit the naturally-occurring neural collapse phenomenon. Specifically, by calculating the mean prediction probabilities of each class in the training set, CBT assigns different masking threshold values to each class, so that the computation can be terminated sooner for pixels belonging to easy-to-predict classes. We show the effectiveness of CBT on Cityscapes and ADE20K datasets. CBT can reduce the computational cost by $23\%$ compared to the previous state-of-the-art early exit models.

Pruning Early Exit Networks

Jul 08, 2022

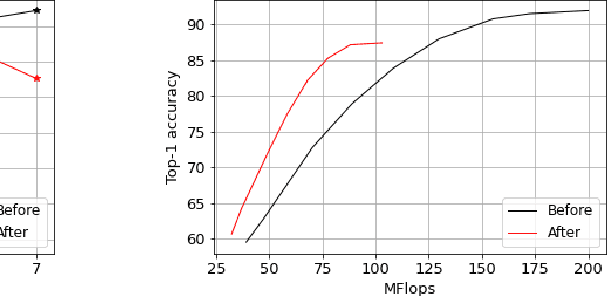

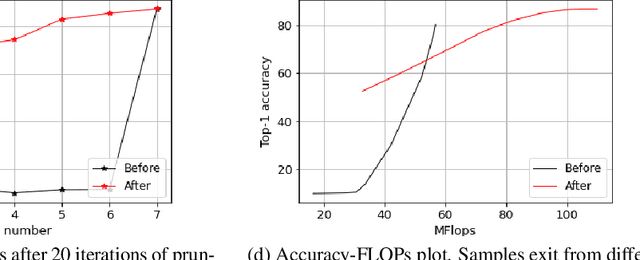

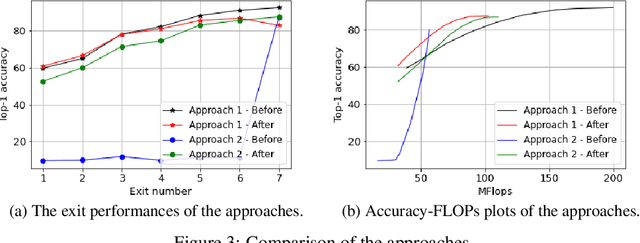

Abstract:Deep learning models that perform well often have high computational costs. In this paper, we combine two approaches that try to reduce the computational cost while keeping the model performance high: pruning and early exit networks. We evaluate two approaches of pruning early exit networks: (1) pruning the entire network at once, (2) pruning the base network and additional linear classifiers in an ordered fashion. Experimental results show that pruning the entire network at once is a better strategy in general. However, at high accuracy rates, the two approaches have a similar performance, which implies that the processes of pruning and early exit can be separated without loss of optimality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge