Aliyu Umar

Detecting Dark Patterns in User Interfaces Using Logistic Regression and Bag-of-Words Representation

Dec 09, 2024Abstract:Dark patterns in user interfaces represent deceptive design practices intended to manipulate users' behavior, often leading to unintended consequences such as coerced purchases, involuntary data disclosures, or user frustration. Detecting and mitigating these dark patterns is crucial for promoting transparency, trust, and ethical design practices in digital environments. This paper proposes a novel approach for detecting dark patterns in user interfaces using logistic regression and bag-of-words representation. Our methodology involves collecting a diverse dataset of user interface text samples, preprocessing the data, extracting text features using the bag-of-words representation, training a logistic regression model, and evaluating its performance using various metrics such as accuracy, precision, recall, F1-score, and the area under the ROC curve (AUC). Experimental results demonstrate the effectiveness of the proposed approach in accurately identifying instances of dark patterns, with high predictive performance and robustness to variations in dataset composition and model parameters. The insights gained from this study contribute to the growing body of knowledge on dark patterns detection and classification, offering practical implications for designers, developers, and policymakers in promoting ethical design practices and protecting user rights in digital environments.

DualKanbaFormer: Kolmogorov-Arnold Networks and State Space Model Transformer for Multimodal Aspect-based Sentiment Analysis

Aug 30, 2024

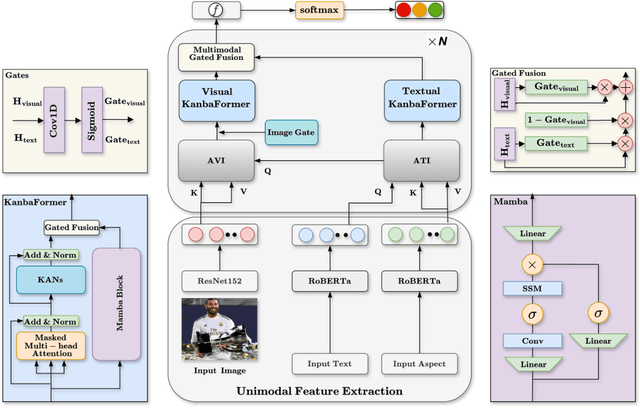

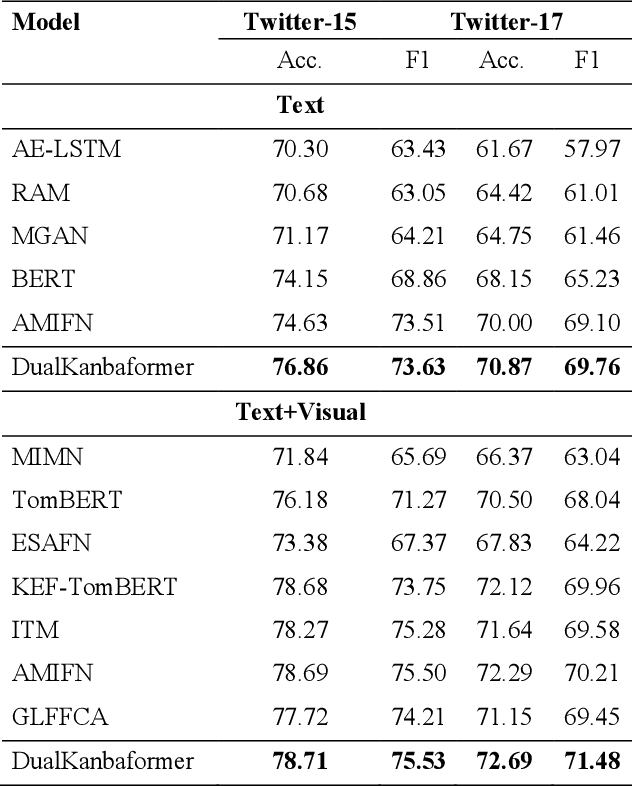

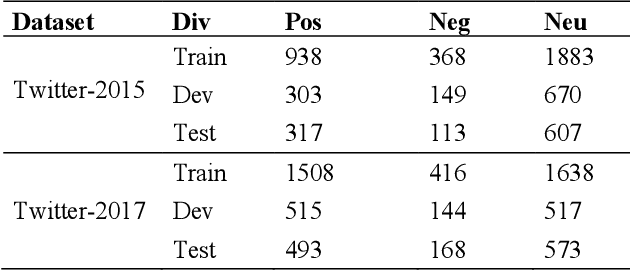

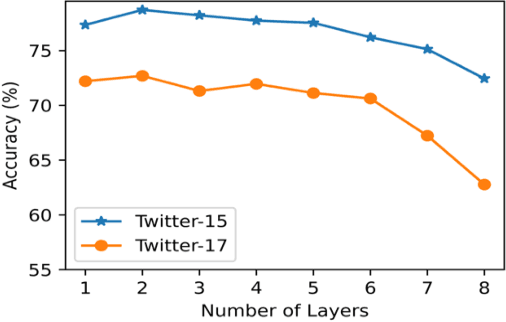

Abstract:Multimodal aspect-based sentiment analysis (MABSA) enhances sentiment detection by combining text with other data types like images. However, despite setting significant benchmarks, attention mechanisms exhibit limitations in efficiently modelling long-range dependencies between aspect and opinion targets within the text. They also face challenges in capturing global-context dependencies for visual representations. To this end, we propose Kolmogorov-Arnold Networks (KANs) and Selective State Space model (Mamba) transformer (DualKanbaFormer), a novel architecture to address the above issues. We leverage the power of Mamba to capture global context dependencies, Multi-head Attention (MHA) to capture local context dependencies, and KANs to capture non-linear modelling patterns for both textual representations (textual KanbaFormer) and visual representations (visual KanbaFormer). Furthermore, we fuse the textual KanbaFormer and visual KanbaFomer with a gated fusion layer to capture the inter-modality dynamics. According to extensive experimental results, our model outperforms some state-of-the-art (SOTA) studies on two public datasets.

MambaForGCN: Enhancing Long-Range Dependency with State Space Model and Kolmogorov-Arnold Networks for Aspect-Based Sentiment Analysis

Jul 14, 2024

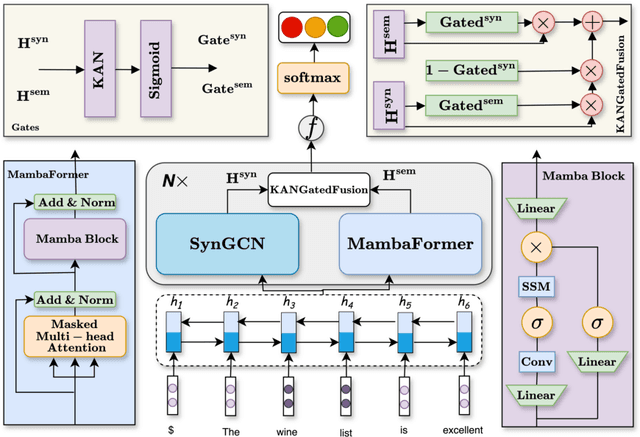

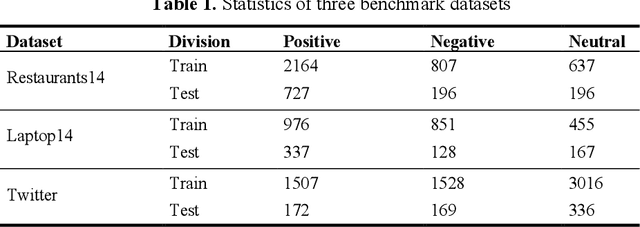

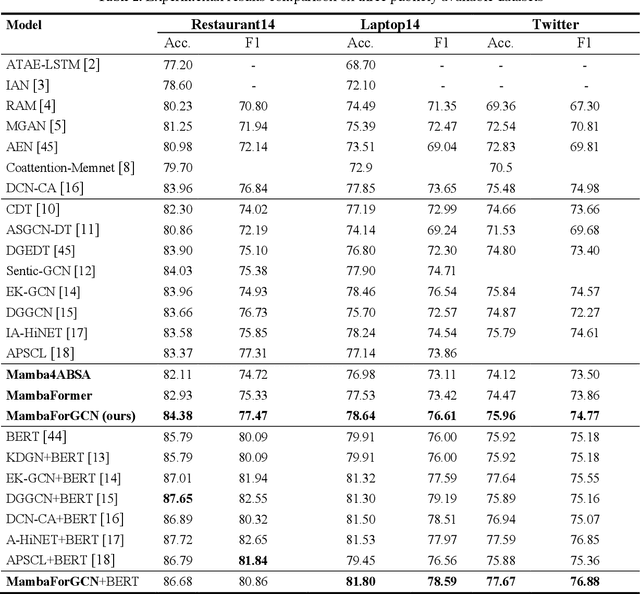

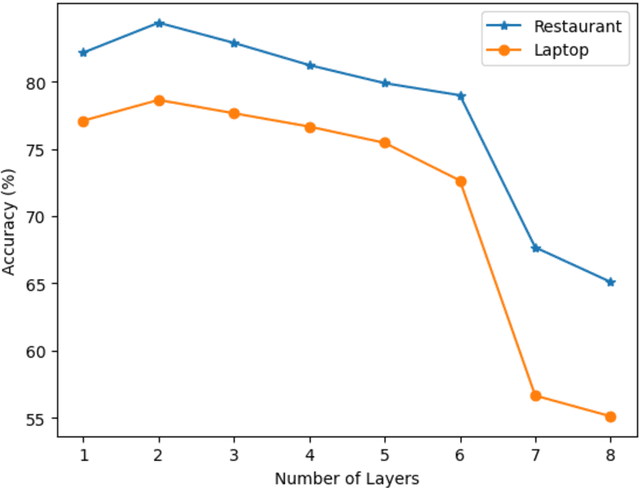

Abstract:Aspect-based sentiment Analysis (ABSA) identifies and evaluates sentiments toward specific aspects of entities within text, providing detailed insights beyond overall sentiment. However, Attention mechanisms and neural network models struggle with syntactic constraints, and the quadratic complexity of attention mechanisms hinders their adoption for capturing long-range dependencies between aspect and opinion words in ABSA. This complexity can lead to the misinterpretation of irrelevant con-textual words, restricting their effectiveness to short-range dependencies. Some studies have investigated merging semantic and syntactic approaches but face challenges in effectively integrating these methods. To address the above problems, we present MambaForGCN, a novel approach to enhance short and long-range dependencies between aspect and opinion words in ABSA. This innovative approach incorporates syntax-based Graph Convolutional Network (SynGCN) and MambaFormer (Mamba-Transformer) modules to encode input with dependency relations and semantic information. The Multihead Attention (MHA) and Mamba blocks in the MambaFormer module serve as channels to enhance the model with short and long-range dependencies between aspect and opinion words. We also introduce the Kolmogorov-Arnold Networks (KANs) gated fusion, an adaptively integrated feature representation system combining SynGCN and MambaFormer representations. Experimental results on three benchmark datasets demonstrate MambaForGCN's effectiveness, outperforming state-of-the-art (SOTA) baseline models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge