Ali Taghavirashidizadeh

Analysis of liver cancer detection based on image processing

Jul 16, 2022

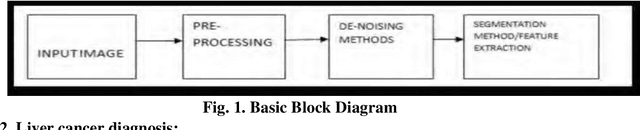

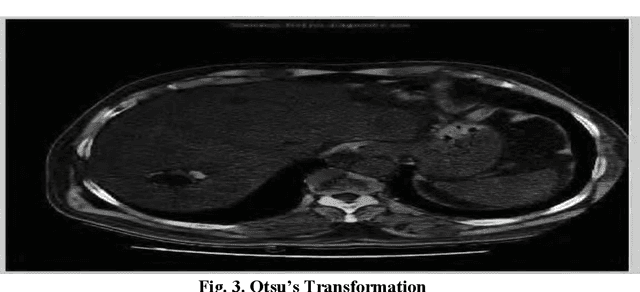

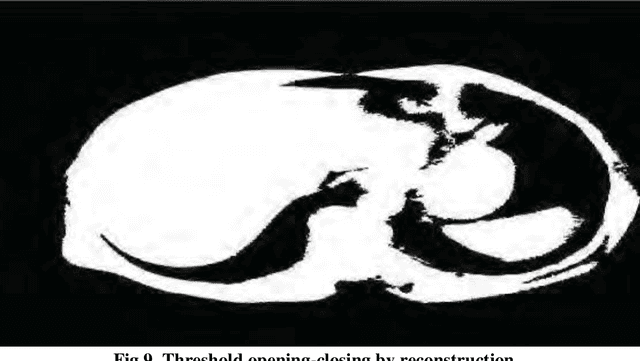

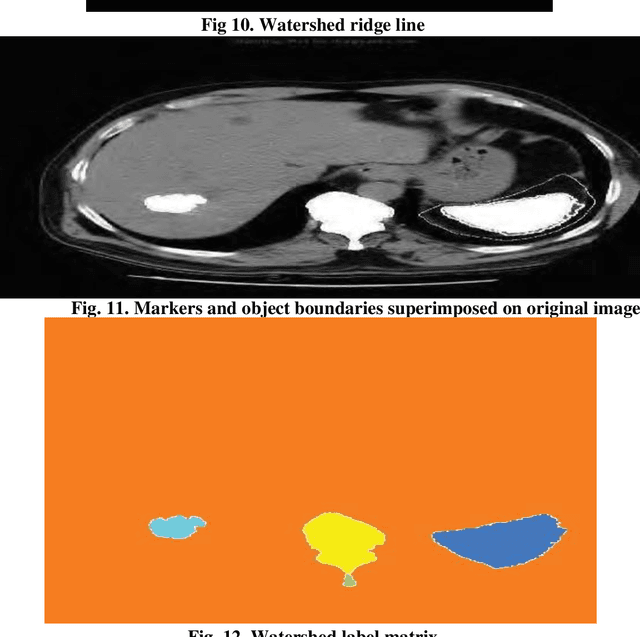

Abstract:Medical imaging is the most important tool for detecting complications in the inner body of medicine. Nowadays, with the development of image processing technology as well as changing the size of photos to higher resolution images in the field of digital medical imaging, there is an efficient and accurate system for segmenting this. Real-world images that for a variety of reasons have poor heterogeneity, noise and contrast are essential. Digital image segmentation in medicine is used for diagnostic and therapeutic analysis, which is very helpful for physicians. In this study, we aim at liver cancer photographs, which aim to more accurately detect the lesion or tumor of the liver because accurate and timely detection of the tumor is very important in the survival and life of the patient.The aim of this paper is to simplify the obnoxious study problems related to the study of MR images. The liver is the second organ most generic involved by metastatic disease being liver cancer one of the prominent causes of death worldwide. Without healthy liver a person cannot survive. It is life threatening disease which is very challenging perceptible for both medical and engineering technologists. Medical image processing is used as a non-invasive method to detect tumours. The chances of survival having liver Tumor highly depends on early detection of Tumor and then classification as cancerous and noncancerous tumours. Image processing techniques for automatic detection of brain are includes pre-processing and enhancement, image segmentation, classification and volume calculation, Poly techniques have been developed for the detection of liver Tumor and different liver toM oR detection algorithms and methodologies utilized for Tumor diagnosis. Novel methodology for the detection and diagnosis of liver Tumor.

Analysis of the attack and its solution in wireless sensor networks

Jul 16, 2022

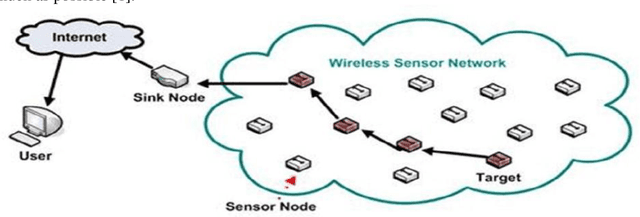

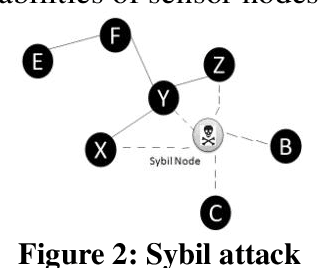

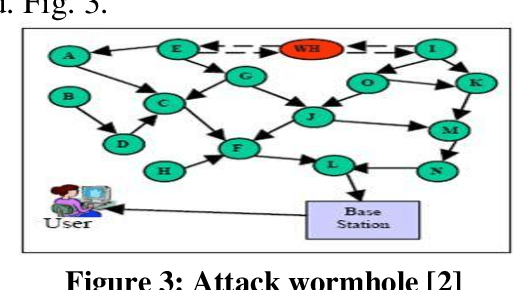

Abstract:Several years ago, wireless sensor networks were used only by the military. These networks, which have many uses and are subject to limitations, the most important of which is the energy constraint, this energy constraint creates the requirement that the number And the length of the messages exchanged between the sensors is low. Sensor networks do not have a stable topology due to their inaccessible environments, and continually changes with the disappearance or addition of a node, support for the topology is performed in three steps before deployment, after deployment and deployment. . The sensor nodes are scattered across the field and transmitted by a multidimensional connection to the sink. The communication protocol of the sensor networks used by all nodes in the network and sink. The protocol consists of five layers and three levels of management. Sensor networks require a kind of security mechanism due to inaccessibility and protection. Conventional security mechanisms are inefficient due to the inherent limitations of sensor nodes in these networks. Sensor nodes, due to energy and resource constraints, require security requirements such as the confidentiality of data integrity data, authentication, synchronization, etc. Currently, many organizations use wireless sensor networks for purposes such as air, Pollution, Traffic Control and Healthcare Security is the main concern of wireless sensor networks. In this article, I will focus on the types of security attacks and their detection. This article outlines security needs and security attacks in wireless sensor networks. Also, the security criteria in wireless sensor networks are mentioned.

DQRE-SCnet: A novel hybrid approach for selecting users in Federated Learning with Deep-Q-Reinforcement Learning based on Spectral Clustering

Nov 07, 2021

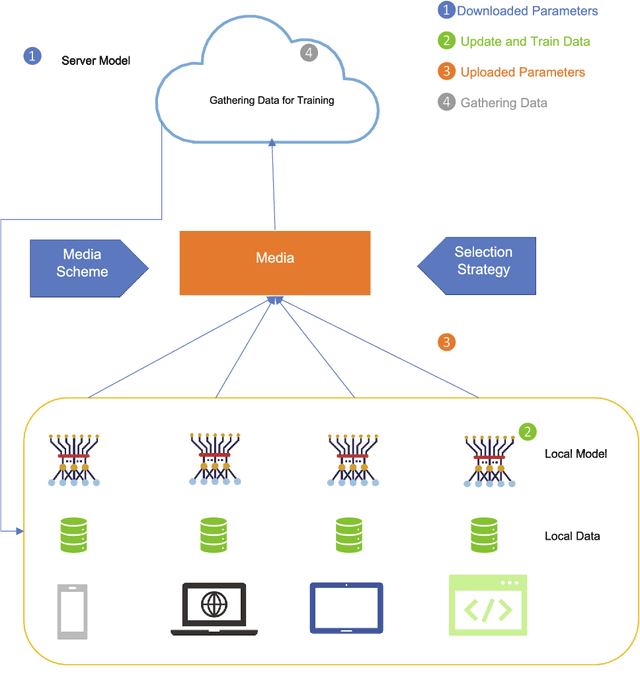

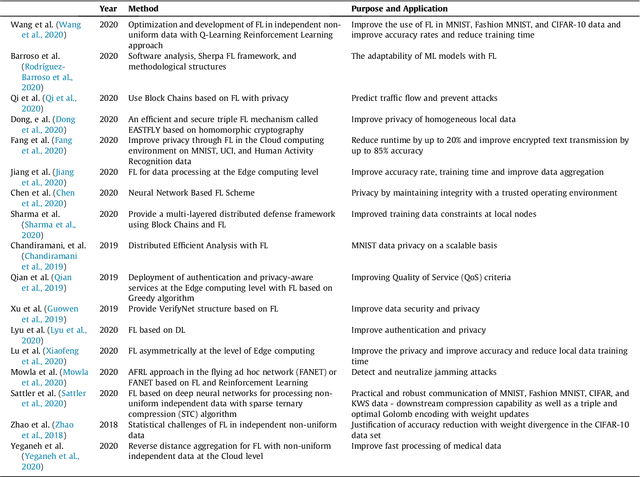

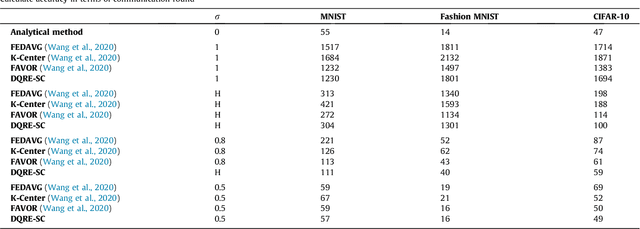

Abstract:Machine learning models based on sensitive data in the real-world promise advances in areas ranging from medical screening to disease outbreaks, agriculture, industry, defense science, and more. In many applications, learning participant communication rounds benefit from collecting their own private data sets, teaching detailed machine learning models on the real data, and sharing the benefits of using these models. Due to existing privacy and security concerns, most people avoid sensitive data sharing for training. Without each user demonstrating their local data to a central server, Federated Learning allows various parties to train a machine learning algorithm on their shared data jointly. This method of collective privacy learning results in the expense of important communication during training. Most large-scale machine-learning applications require decentralized learning based on data sets generated on various devices and places. Such datasets represent an essential obstacle to decentralized learning, as their diverse contexts contribute to significant differences in the delivery of data across devices and locations. Researchers have proposed several ways to achieve data privacy in Federated Learning systems. However, there are still challenges with homogeneous local data. This research approach is to select nodes (users) to share their data in Federated Learning for independent data-based equilibrium to improve accuracy, reduce training time, and increase convergence. Therefore, this research presents a combined Deep-QReinforcement Learning Ensemble based on Spectral Clustering called DQRE-SCnet to choose a subset of devices in each communication round. Based on the results, it has been displayed that it is possible to decrease the number of communication rounds needed in Federated Learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge