Ali Alshaarawy

PRUNIX: Non-Ideality Aware Convolutional Neural Network Pruning for Memristive Accelerators

Feb 03, 2022

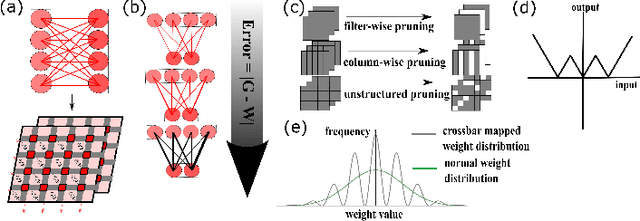

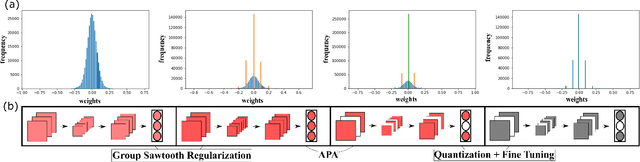

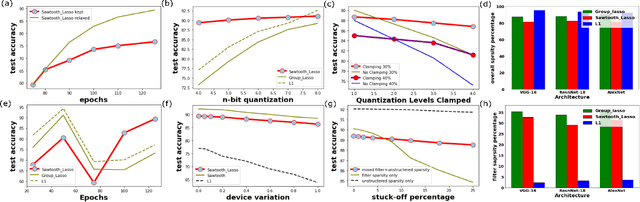

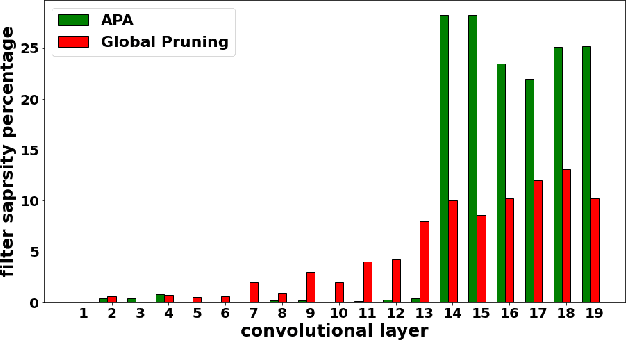

Abstract:In this work, PRUNIX, a framework for training and pruning convolutional neural networks is proposed for deployment on memristor crossbar based accelerators. PRUNIX takes into account the numerous non-ideal effects of memristor crossbars including weight quantization, state-drift, aging and stuck-at-faults. PRUNIX utilises a novel Group Sawtooth Regularization intended to improve non-ideality tolerance as well as sparsity, and a novel Adaptive Pruning Algorithm (APA) intended to minimise accuracy loss by considering the sensitivity of different layers of a CNN to pruning. We compare our regularization and pruning methods with other standards on multiple CNN architectures, and observe an improvement of 13% test accuracy when quantization and other non-ideal effects are accounted for with an overall sparsity of 85%, which is similar to other methods

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge