Alexis Ducarouge

To Share or Not To Share: A Comprehensive Appraisal of Weight-Sharing

Feb 11, 2020

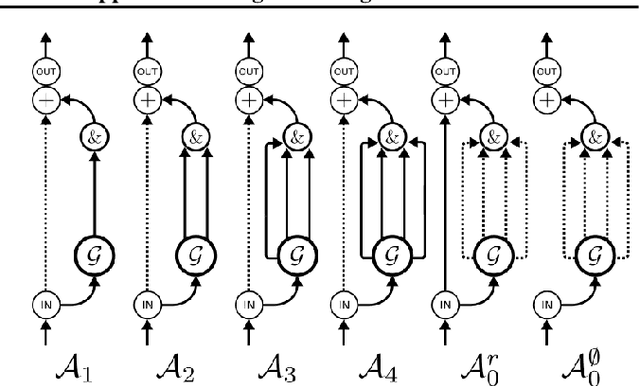

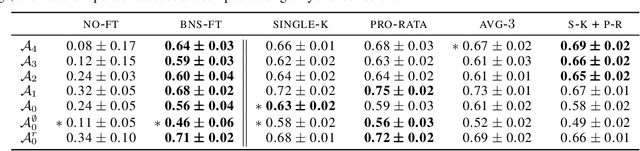

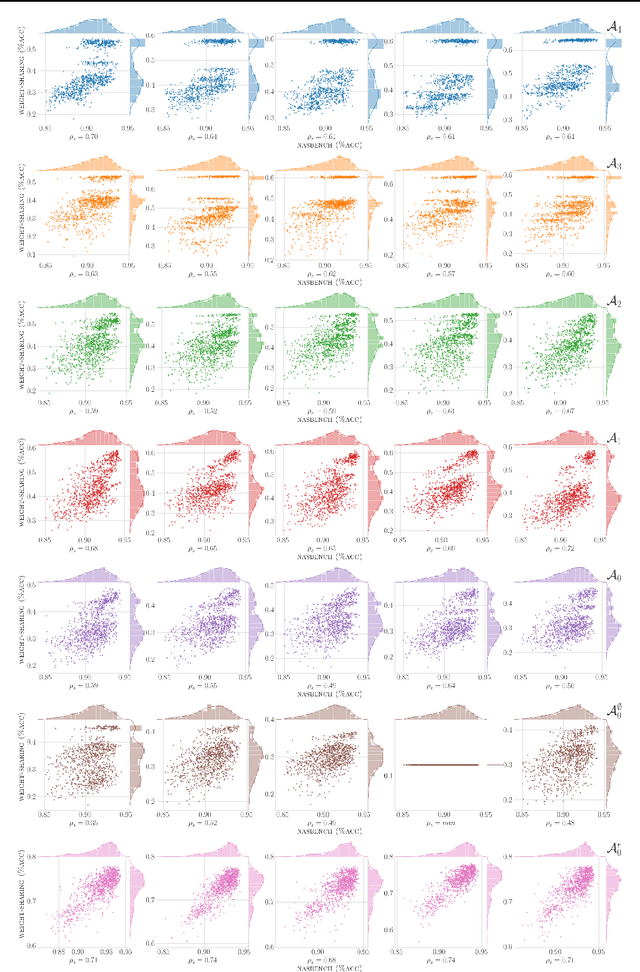

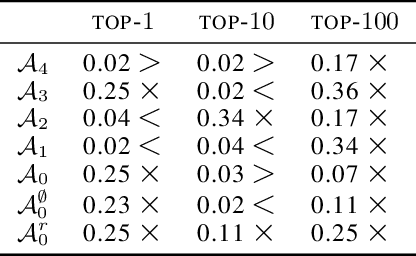

Abstract:Weight-sharing (WS) has recently emerged as a paradigm to accelerate the automated search for efficient neural architectures, a process dubbed Neural Architecture Search (NAS). Although very appealing, this framework is not without drawbacks and several works have started to question its capabilities on small hand-crafted benchmarks. In this paper, we take advantage of the NASBench-101 dataset to challenge the efficiency of WS on a representative search space. By comparing a SOTA WS approach to a plain random search we show that, despite decent correlations between evaluations using weight-sharing and standalone ones, WS is only rarely helpful to NAS. We highlight in particular the reliance of the benefits on the search space itself.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge