Alexandre Falcão

UNICAMP

Efficient Multiscale Object-based Superpixel Framework

Apr 07, 2022

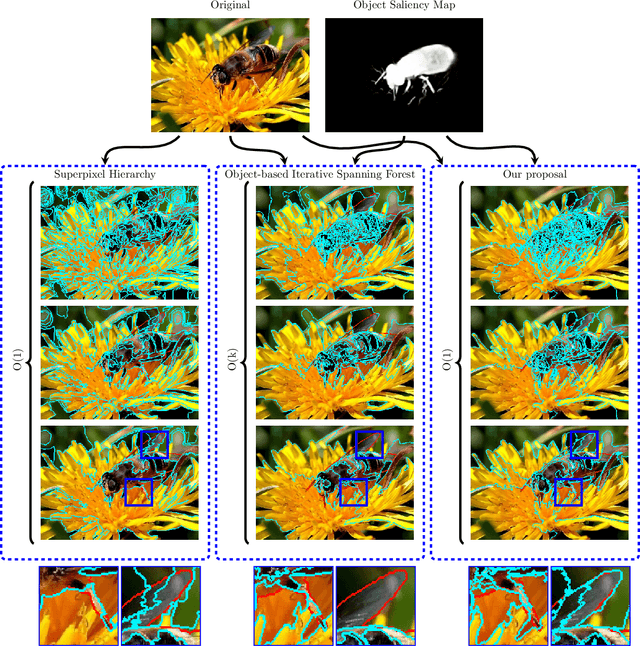

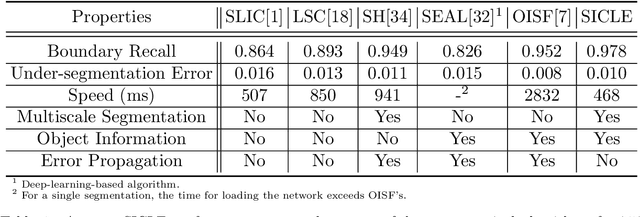

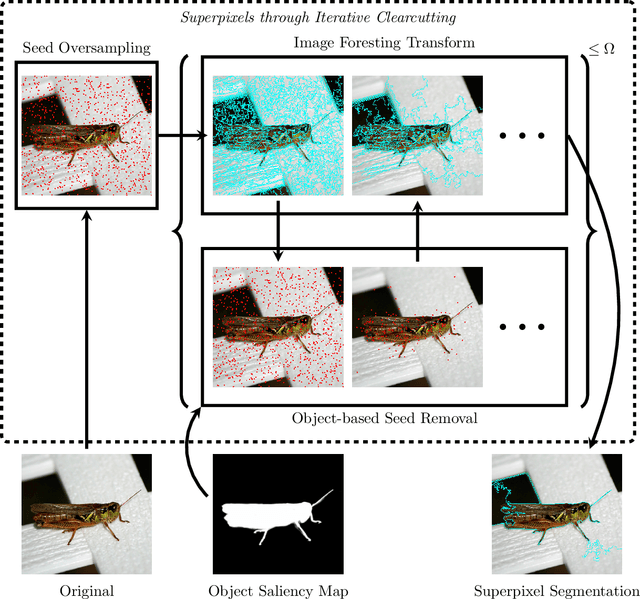

Abstract:Superpixel segmentation can be used as an intermediary step in many applications, often to improve object delineation and reduce computer workload. However, classical methods do not incorporate information about the desired object. Deep-learning-based approaches consider object information, but their delineation performance depends on data annotation. Additionally, the computational time of object-based methods is usually much higher than desired. In this work, we propose a novel superpixel framework, named Superpixels through Iterative CLEarcutting (SICLE), which exploits object information being able to generate a multiscale segmentation on-the-fly. SICLE starts off from seed oversampling and repeats optimal connectivity-based superpixel delineation and object-based seed removal until a desired number of superpixels is reached. It generalizes recent superpixel methods, surpassing them and other state-of-the-art approaches in efficiency and effectiveness according to multiple delineation metrics.

Rethinking Interactive Image Segmentation: Feature Space Annotation

Jan 12, 2021

Abstract:Despite the progress of interactive image segmentation methods, high-quality pixel-level annotation is still time-consuming and laborious -- a bottleneck for several deep learning applications. We take a step back to propose interactive and simultaneous segment annotation from multiple images guided by feature space projection and optimized by metric learning as the labeling progresses. This strategy is in stark contrast to existing interactive segmentation methodologies, which perform annotation in the image domain. We show that our approach can surpass the accuracy of state-of-the-art methods in foreground segmentation datasets: iCoSeg, DAVIS, and Rooftop. Moreover, it achieves 91.5\% accuracy in a known semantic segmentation dataset, Cityscapes, being 74.75 times faster than the original annotation procedure. The appendix presents additional qualitative results. Code and video demonstration will be released upon publication.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge