Alexander Zellner

What Do End-Users Really Want? Investigation of Human-Centered XAI for Mobile Health Apps

Oct 07, 2022

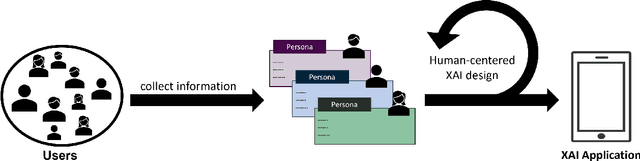

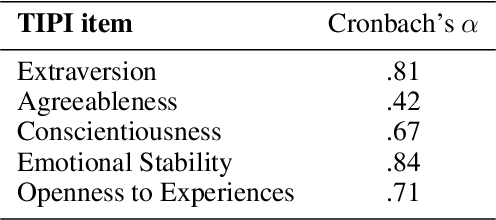

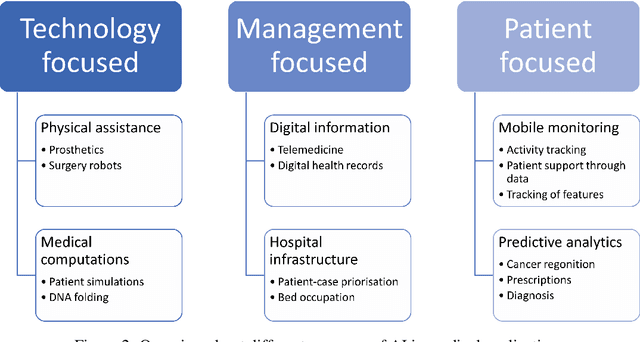

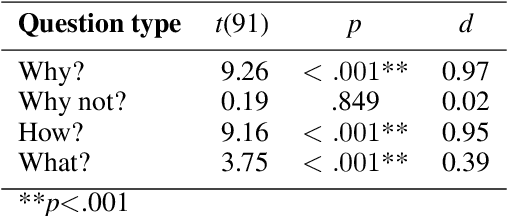

Abstract:In healthcare, AI systems support clinicians and patients in diagnosis, treatment, and monitoring, but many systems' poor explainability remains challenging for practical application. Overcoming this barrier is the goal of explainable AI (XAI). However, an explanation can be perceived differently and, thus, not solve the black-box problem for everyone. The domain of Human-Centered AI deals with this problem by adapting AI to users. We present a user-centered persona concept to evaluate XAI and use it to investigate end-users preferences for various explanation styles and contents in a mobile health stress monitoring application. The results of our online survey show that users' demographics and personality, as well as the type of explanation, impact explanation preferences, indicating that these are essential features for XAI design. We subsumed the results in three prototypical user personas: power-, casual-, and privacy-oriented users. Our insights bring an interactive, human-centered XAI closer to practical application.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge