Alexander Schäfer

The Gesture Authoring Space: Authoring Customised Hand Gestures for Grasping Virtual Objects in Immersive Virtual Environments

Jul 03, 2022

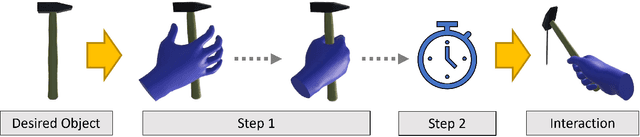

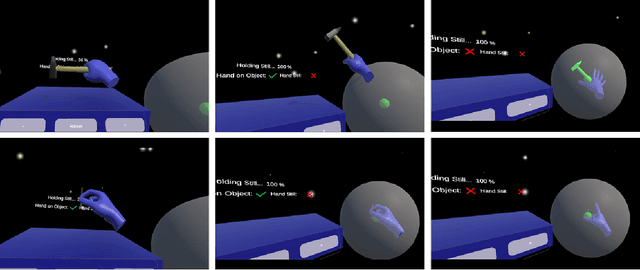

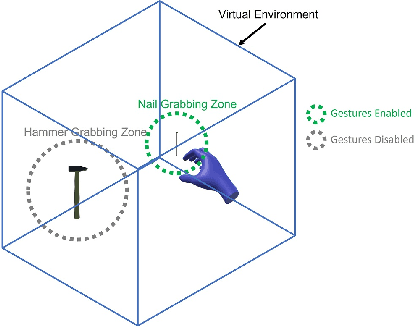

Abstract:Natural user interfaces are on the rise. Manufacturers for Augmented, Virtual, and Mixed Reality head mounted displays are increasingly integrating new sensors into their consumer grade products, allowing gesture recognition without additional hardware. This offers new possibilities for bare handed interaction within virtual environments. This work proposes a hand gesture authoring tool for object specific grab gestures allowing virtual objects to be grabbed as in the real world. The presented solution uses template matching for gesture recognition and requires no technical knowledge to design and create custom tailored hand gestures. In a user study, the proposed approach is compared with the pinch gesture and the controller for grasping virtual objects. The different grasping techniques are compared in terms of accuracy, task completion time, usability, and naturalness. The study showed that gestures created with the proposed approach are perceived by users as a more natural input modality than the others.

Learning Effect of Lay People in Gesture-Based Locomotion in Virtual Reality

Jun 16, 2022Abstract:Locomotion in Virtual Reality (VR) is an important part of VR applications. Many scientists are enriching the community with different variations that enable locomotion in VR. Some of the most promising methods are gesture-based and do not require additional handheld hardware. Recent work focused mostly on user preference and performance of the different locomotion techniques. This ignores the learning effect that users go through while new methods are being explored. In this work, it is investigated whether and how quickly users can adapt to a hand gesture-based locomotion system in VR. Four different locomotion techniques are implemented and tested by participants. The goal of this paper is twofold: First, it aims to encourage researchers to consider the learning effect in their studies. Second, this study aims to provide insight into the learning effect of users in gesture-based systems.

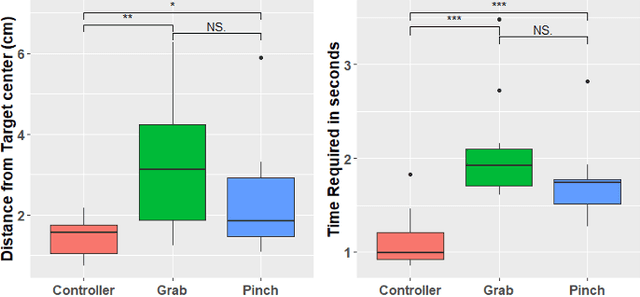

Comparing Controller With the Hand Gestures Pinch and Grab for Picking Up and Placing Virtual Objects

Feb 22, 2022

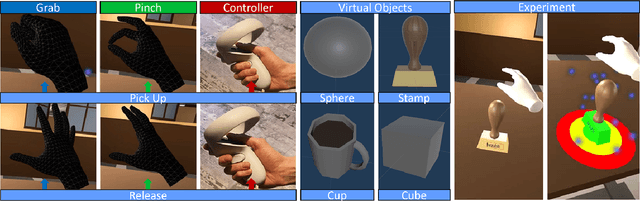

Abstract:Grabbing virtual objects is one of the essential tasks for Augmented, Virtual, and Mixed Reality applications. Modern applications usually use a simple pinch gesture for grabbing and moving objects. However, picking up objects by pinching has disadvantages. It can be an unnatural gesture to pick up objects and prevents the implementation of other gestures which would be performed with thumb and index. Therefore it is not the optimal choice for many applications. In this work, different implementations for grabbing and placing virtual objects are proposed and compared. Performance and accuracy of the proposed techniques are measured and compared.

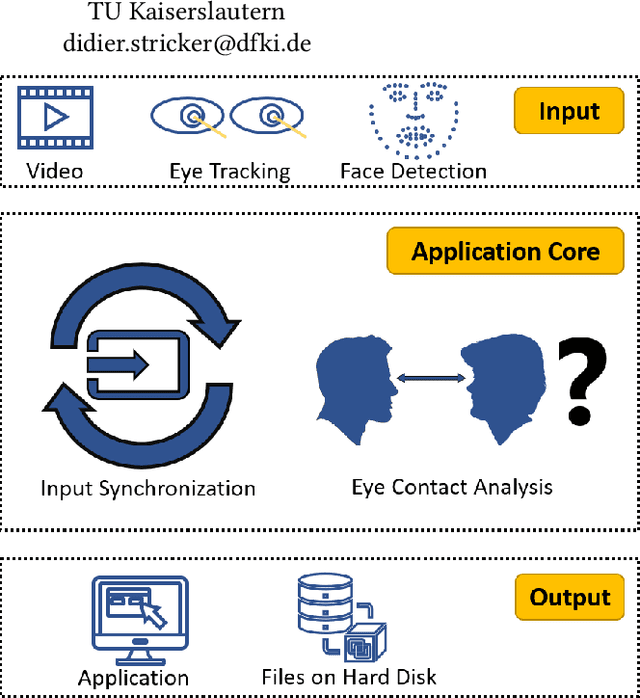

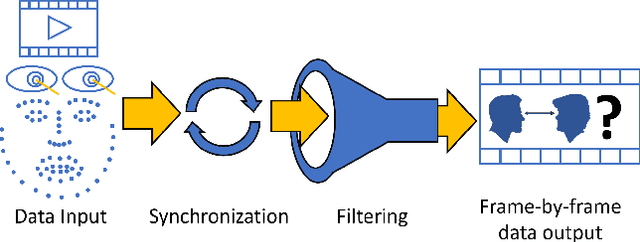

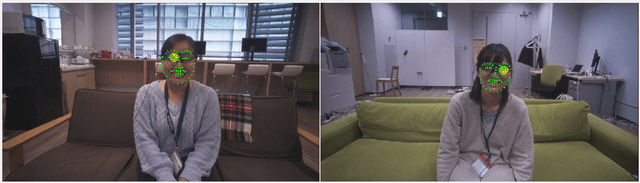

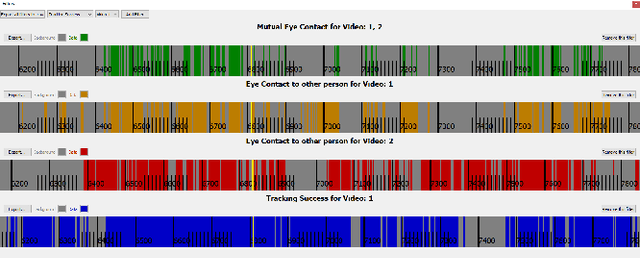

MutualEyeContact: A conversation analysis tool with focus on eye contact

Jul 09, 2021

Abstract:Eye contact between individuals is particularly important for understanding human behaviour. To further investigate the importance of eye contact in social interactions, portable eye tracking technology seems to be a natural choice. However, the analysis of available data can become quite complex. Scientists need data that is calculated quickly and accurately. Additionally, the relevant data must be automatically separated to save time. In this work, we propose a tool called MutualEyeContact which excels in those tasks and can help scientists to understand the importance of (mutual) eye contact in social interactions. We combine state-of-the-art eye tracking with face recognition based on machine learning and provide a tool for analysis and visualization of social interaction sessions. This work is a joint collaboration of computer scientists and cognitive scientists. It combines the fields of social and behavioural science with computer vision and deep learning.

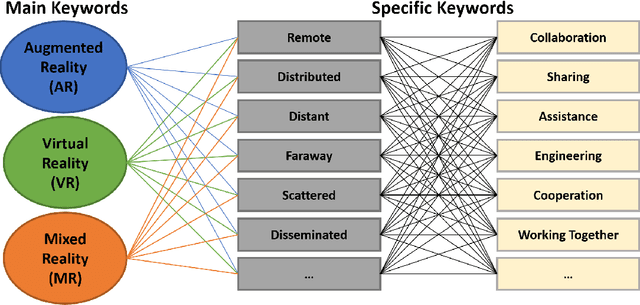

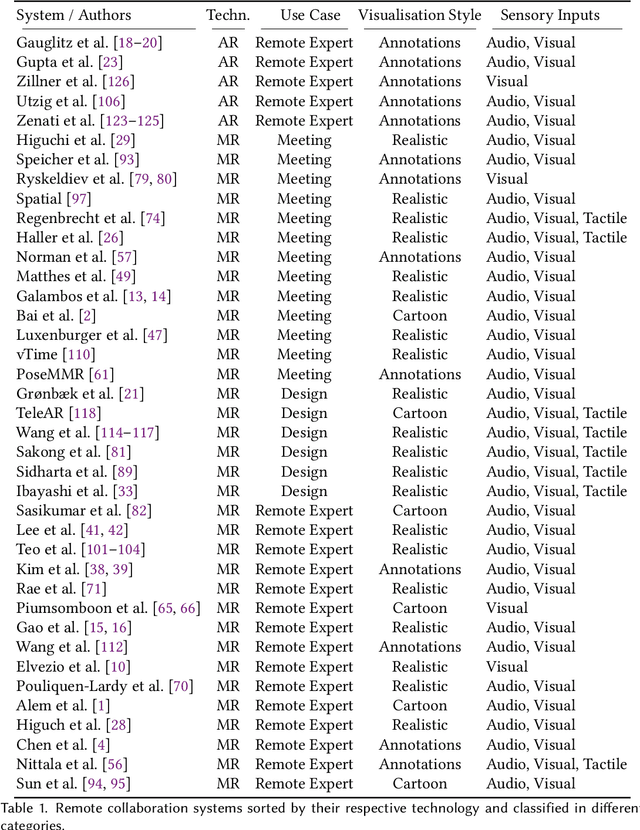

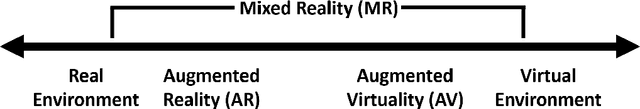

A Survey on Synchronous Augmented, Virtual and Mixed Reality Remote Collaboration Systems

Feb 11, 2021

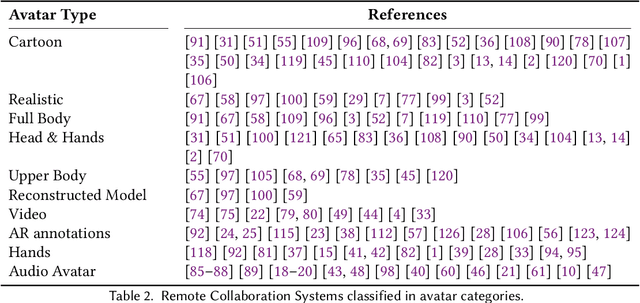

Abstract:Remote collaboration systems have become increasingly important in today's society, especially during times where physical distancing is advised. Industry, research and individuals face the challenging task of collaborating and networking over long distances. While video and teleconferencing are already widespread, collaboration systems in augmented, virtual, and mixed reality are still a niche technology. We provide an overview of recent developments of synchronous remote collaboration systems and create a taxonomy by dividing them into three main components that form such systems: Environment, Avatars, and Interaction. A thorough overview of existing systems is given, categorising their main contributions in order to help researchers working in different fields by providing concise information about specific topics such as avatars, virtual environment, visualisation styles and interaction. The focus of this work is clearly on synchronised collaboration from a distance. A total of 82 unique systems for remote collaboration are discussed, including more than 100 publications and 25 commercial systems.

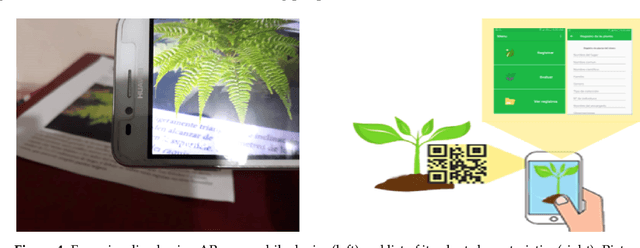

A survey on applications of augmented, mixed and virtual reality for nature and environment

Aug 28, 2020

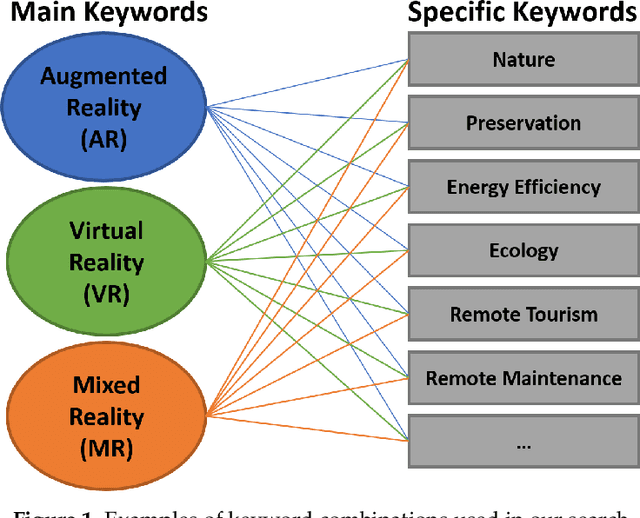

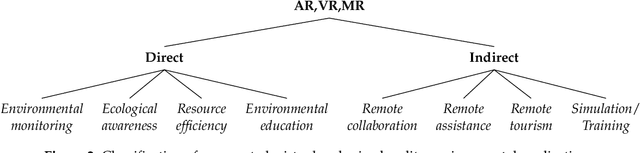

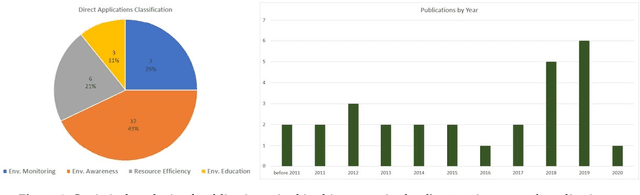

Abstract:Augmented reality (AR), virtual reality (VR) and mixed reality (MR) are technologies of great potential due to the engaging and enriching experiences they are capable of providing. Their use is rapidly increasing in diverse fields such as medicine, manufacturing or entertainment. However, the possibilities that AR, VR and MR offer in the area of environmental applications are not yet widely explored. In this paper we present the outcome of a survey meant to discover and classify existing AR/VR/MR applications that can benefit the environment or increase awareness on environmental issues. We performed an exhaustive search over several online publication access platforms and past proceedings of major conferences in the fields of AR/VR/MR. Identified relevant papers were filtered based on novelty, technical soundness, impact and topic relevance, and classified into different categories. Referring to the selected papers, we discuss how the applications of each category are contributing to environmental protection, preservation and sensitization purposes. We further analyse these approaches as well as possible future directions in the scope of existing and upcoming AR/VR/MR enabling technologies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge