Alexander Fritzler

Higher School of Economics, Skolkovo Institute of Science and Technology, Yandex

Personalized Transformer-based Ranking for e-Commerce at Yandex

Oct 09, 2023

Abstract:Personalizing user experience with high-quality recommendations based on user activity is vital for e-commerce platforms. This is particularly important in scenarios where the user's intent is not explicit, such as on the homepage. Recently, personalized embedding-based systems have significantly improved the quality of recommendations and search in the e-commerce domain. However, most of these works focus on enhancing the retrieval stage. In this paper, we demonstrate that features produced by retrieval-focused deep learning models are sub-optimal for ranking stage in e-commerce recommendations. To address this issue, we propose a two-stage training process that fine-tunes two-tower models to achieve optimal ranking performance. We provide a detailed description of our transformer-based two-tower model architecture, which is specifically designed for personalization in e-commerce. Additionally, we introduce a novel technique for debiasing context in offline models and report significant improvements in ranking performance when using web-search queries for e-commerce recommendations. Our model has been successfully deployed at Yandex, serves millions of users daily, and has delivered strong performance in online A/B testing.

Quantization of Generative Adversarial Networks for Efficient Inference: a Methodological Study

Aug 31, 2021

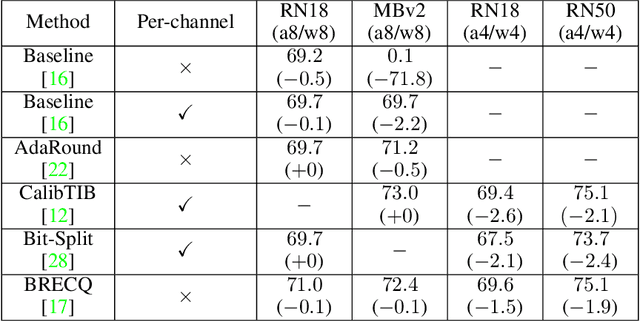

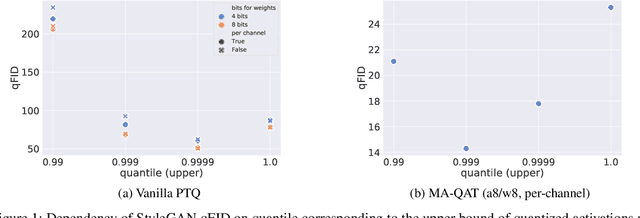

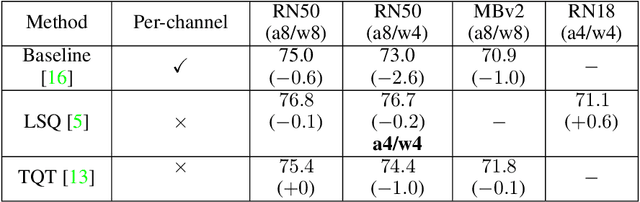

Abstract:Generative adversarial networks (GANs) have an enormous potential impact on digital content creation, e.g., photo-realistic digital avatars, semantic content editing, and quality enhancement of speech and images. However, the performance of modern GANs comes together with massive amounts of computations performed during the inference and high energy consumption. That complicates, or even makes impossible, their deployment on edge devices. The problem can be reduced with quantization -- a neural network compression technique that facilitates hardware-friendly inference by replacing floating-point computations with low-bit integer ones. While quantization is well established for discriminative models, the performance of modern quantization techniques in application to GANs remains unclear. GANs generate content of a more complex structure than discriminative models, and thus quantization of GANs is significantly more challenging. To tackle this problem, we perform an extensive experimental study of state-of-art quantization techniques on three diverse GAN architectures, namely StyleGAN, Self-Attention GAN, and CycleGAN. As a result, we discovered practical recipes that allowed us to successfully quantize these models for inference with 4/8-bit weights and 8-bit activations while preserving the quality of the original full-precision models.

Few-shot classification in Named Entity Recognition Task

Dec 14, 2018

Abstract:For many natural language processing (NLP) tasks the amount of annotated data is limited. This urges a need to apply semi-supervised learning techniques, such as transfer learning or meta-learning. In this work we tackle Named Entity Recognition (NER) task using Prototypical Network - a metric learning technique. It learns intermediate representations of words which cluster well into named entity classes. This property of the model allows classifying words with extremely limited number of training examples, and can potentially be used as a zero-shot learning method. By coupling this technique with transfer learning we achieve well-performing classifiers trained on only 20 instances of a target class.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge