Alex Kyllo

Fairness in Federated Learning for Spatial-Temporal Applications

Jan 20, 2022

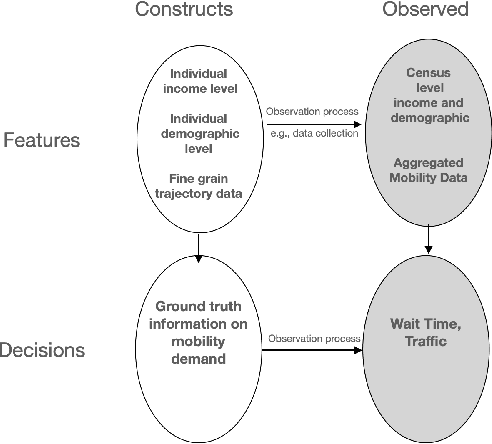

Abstract:Federated learning involves training statistical models over remote devices such as mobile phones while keeping data localized. Training in heterogeneous and potentially massive networks introduces opportunities for privacy-preserving data analysis and diversifying these models to become more inclusive of the population. Federated learning can be viewed as a unique opportunity to bring fairness and parity to many existing models by enabling model training to happen on a diverse set of participants and on data that is generated regularly and dynamically. In this paper, we discuss the current metrics and approaches that are available to measure and evaluate fairness in the context of spatial-temporal models. We propose how these metrics and approaches can be re-defined to address the challenges that are faced in the federated learning setting.

Privacy-Aware Human Mobility Prediction via Adversarial Networks

Jan 19, 2022

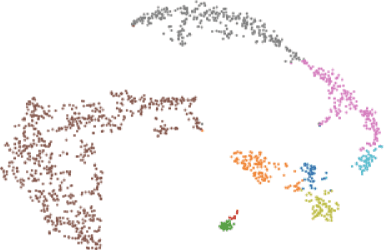

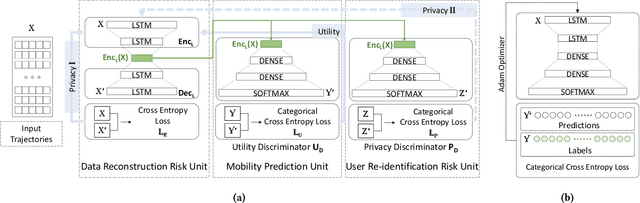

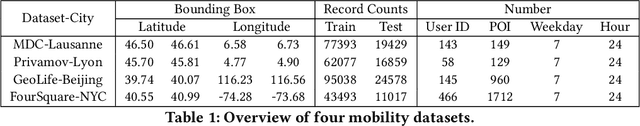

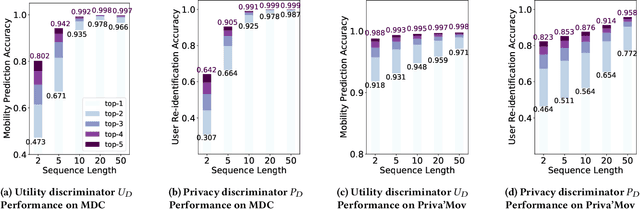

Abstract:As various mobile devices and location-based services are increasingly developed in different smart city scenarios and applications, many unexpected privacy leakages have arisen due to geolocated data collection and sharing. While these geolocated data could provide a rich understanding of human mobility patterns and address various societal research questions, privacy concerns for users' sensitive information have limited their utilization. In this paper, we design and implement a novel LSTM-based adversarial mechanism with representation learning to attain a privacy-preserving feature representation of the original geolocated data (mobility data) for a sharing purpose. We quantify the utility-privacy trade-off of mobility datasets in terms of trajectory reconstruction risk, user re-identification risk, and mobility predictability. Our proposed architecture reports a Pareto Frontier analysis that enables the user to assess this trade-off as a function of Lagrangian loss weight parameters. The extensive comparison results on four representative mobility datasets demonstrate the superiority of our proposed architecture and the efficiency of the proposed privacy-preserving features extractor. Our results show that by exploring Pareto optimal setting, we can simultaneously increase both privacy (45%) and utility (32%).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge