Alessandro Navone

Autonomous Robotic Pruning in Orchards and Vineyards: a Review

May 12, 2025Abstract:Manual pruning is labor intensive and represents up to 25% of annual labor costs in fruit production, notably in apple orchards and vineyards where operational challenges and cost constraints limit the adoption of large-scale machinery. In response, a growing body of research is investigating compact, flexible robotic platforms capable of precise pruning in varied terrains, particularly where traditional mechanization falls short. This paper reviews recent advances in autonomous robotic pruning for orchards and vineyards, addressing a critical need in precision agriculture. Our review examines literature published between 2014 and 2024, focusing on innovative contributions across key system components. Special attention is given to recent developments in machine vision, perception, plant skeletonization, and control strategies, areas that have experienced significant influence from advancements in artificial intelligence and machine learning. The analysis situates these technological trends within broader agricultural challenges, including rising labor costs, a decline in the number of young farmers, and the diverse pruning requirements of different fruit species such as apple, grapevine, and cherry trees. By comparing various robotic architectures and methodologies, this survey not only highlights the progress made toward autonomous pruning but also identifies critical open challenges and future research directions. The findings underscore the potential of robotic systems to bridge the gap between manual and mechanized operations, paving the way for more efficient, sustainable, and precise agricultural practices.

Adaptive Robot Localization with Ultra-wideband Novelty Detection

May 09, 2025Abstract:Ultra-wideband (UWB) technology has shown remarkable potential as a low-cost general solution for robot localization. However, limitations of the UWB signal for precise positioning arise from the disturbances caused by the environment itself, due to reflectance, multi-path effect, and Non-Line-of-Sight (NLOS) conditions. This problem is emphasized in cluttered indoor spaces where service robotic platforms usually operate. Both model-based and learning-based methods are currently under investigation to precisely predict the UWB error patterns. Despite the great capability in approximating strong non-linearity, learning-based methods often do not consider environmental factors and require data collection and re-training for unseen data distributions, making them not practically feasible on a large scale. The goal of this research is to develop a robust and adaptive UWB localization method for indoor confined spaces. A novelty detection technique is used to recognize outlier conditions from nominal UWB range data with a semi-supervised autoencoder. Then, the obtained novelty scores are combined with an Extended Kalman filter, leveraging a dynamic estimation of covariance and bias error for each range measurement received from the UWB anchors. The resulting solution is a compact, flexible, and robust system which enables the localization system to adapt the trustworthiness of UWB data spatially and temporally in the environment. The extensive experimentation conducted with a real robot in a wide range of testing scenarios demonstrates the advantages and benefits of the proposed solution in indoor cluttered spaces presenting NLoS conditions, reaching an average improvement of almost 60% and greater than 25cm of absolute positioning error.

GPS-free Autonomous Navigation in Cluttered Tree Rows with Deep Semantic Segmentation

Apr 08, 2024

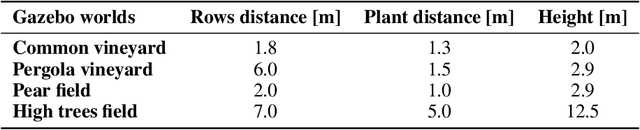

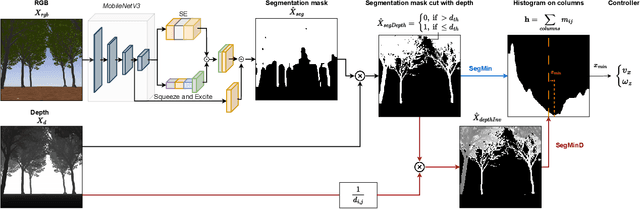

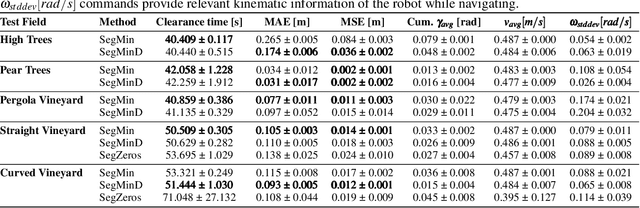

Abstract:Segmentation-based autonomous navigation has recently been presented as an appealing approach to guiding robotic platforms through crop rows without requiring perfect GPS localization. Nevertheless, current techniques are restricted to situations where the distinct separation between the plants and the sky allows for the identification of the row's center. However, tall, dense vegetation, such as high tree rows and orchards, is the primary cause of GPS signal blockage. In this study, we increase the overall robustness and adaptability of the control algorithm by extending the segmentation-based robotic guiding to those cases where canopies and branches occlude the sky and prevent the utilization of GPS and earlier approaches. An efficient Deep Neural Network architecture has been used to address semantic segmentation, performing the training with synthetic data only. Numerous vineyards and tree fields have undergone extensive testing in both simulation and real-world to show the solution's competitive benefits.

Semi-Supervised Novelty Detection for Precise Ultra-Wideband Error Signal Prediction

Apr 08, 2024Abstract:Ultra-Wideband (UWB) technology is an emerging low-cost solution for localization in a generic environment. However, UWB signal can be affected by signal reflections and non-line-of-sight (NLoS) conditions between anchors; hence, in a broader sense, the specific geometry of the environment and the disposition of obstructing elements in the map may drastically hinder the reliability of UWB for precise robot localization. This work aims to mitigate this problem by learning a map-specific characterization of the UWB quality signal with a fingerprint semi-supervised novelty detection methodology. An unsupervised autoencoder neural network is trained on nominal UWB map conditions, and then it is used to predict errors derived from the introduction of perturbing novelties in the environment. This work poses a step change in the understanding of UWB localization and its reliability in evolving environmental conditions. The resulting performance of the proposed method is proved by fine-grained experiments obtained with a visual tracking ground truth.

Non-linear Model Predictive Control for Multi-task GPS-free Autonomous Navigation in Vineyards

Apr 08, 2024

Abstract:Autonomous navigation is the foundation of agricultural robots. This paper focuses on developing an advanced autonomous navigation system for a rover operating within row-based crops. A position-agnostic system is proposed to address the challenging situation when standard localization methods, like GPS, fail due to unfavorable weather or obstructed signals. This breakthrough is especially vital in densely vegetated regions, including areas covered by thick tree canopies or pergola vineyards. This work proposed a novel system that leverages a single RGB-D camera and a Non-linear Model Predictive Control strategy to navigate through entire rows, adapting to various crop spacing. The presented solution demonstrates versatility in handling diverse crop densities, environmental factors, and multiple navigation tasks to support agricultural activities at an extremely cost-effective implementation. Experimental validation in simulated and real vineyards underscores the system's robustness and competitiveness in both standard row traversal and target objects approach.

Lavender Autonomous Navigation with Semantic Segmentation at the Edge

Sep 13, 2023Abstract:Achieving success in agricultural activities heavily relies on precise navigation in row crop fields. Recently, segmentation-based navigation has emerged as a reliable technique when GPS-based localization is unavailable or higher accuracy is needed due to vegetation or unfavorable weather conditions. It also comes in handy when plants are growing rapidly and require an online adaptation of the navigation algorithm. This work applies a segmentation-based visual agnostic navigation algorithm to lavender fields, considering both simulation and real-world scenarios. The effectiveness of this approach is validated through a wide set of experimental tests, which show the capability of the proposed solution to generalize over different scenarios and provide highly-reliable results.

Autonomous Navigation in Rows of Trees and High Crops with Deep Semantic Segmentation

Apr 18, 2023

Abstract:Segmentation-based autonomous navigation has recently been proposed as a promising methodology to guide robotic platforms through crop rows without requiring precise GPS localization. However, existing methods are limited to scenarios where the centre of the row can be identified thanks to the sharp distinction between the plants and the sky. However, GPS signal obstruction mainly occurs in the case of tall, dense vegetation, such as high tree rows and orchards. In this work, we extend the segmentation-based robotic guidance to those scenarios where canopies and branches occlude the sky and hinder the usage of GPS and previous methods, increasing the overall robustness and adaptability of the control algorithm. Extensive experimentation on several realistic simulated tree fields and vineyards demonstrates the competitive advantages of the proposed solution.

Domain Generalization for Crop Segmentation with Knowledge Distillation

Apr 03, 2023

Abstract:In recent years, precision agriculture has gradually oriented farming closer to automation processes to support all the activities related to field management. Service robotics plays a predominant role in this evolution by deploying autonomous agents that can navigate fields while performing tasks without human intervention, such as monitoring, spraying, and harvesting. To execute these precise actions, mobile robots need a real-time perception system that understands their surroundings and identifies their targets in the wild. Generalizing to new crops and environmental conditions is critical for practical applications, as labeled samples are rarely available. In this paper, we investigate the problem of crop segmentation and propose a novel approach to enhance domain generalization using knowledge distillation. In the proposed framework, we transfer knowledge from an ensemble of models individually trained on source domains to a student model that can adapt to unseen target domains. To evaluate the proposed method, we present a synthetic multi-domain dataset for crop segmentation containing plants of variegate shapes and covering different terrain styles, weather conditions, and light scenarios for more than 50,000 samples. We demonstrate significant improvements in performance over state-of-the-art methods. Our approach provides a promising solution for domain generalization in crop segmentation and has the potential to enhance precision agriculture applications.

Online Learning of Wheel Odometry Correction for Mobile Robots with Attention-based Neural Network

Mar 21, 2023

Abstract:Modern robotic platforms need a reliable localization system to operate daily beside humans. Simple pose estimation algorithms based on filtered wheel and inertial odometry often fail in the presence of abrupt kinematic changes and wheel slips. Moreover, despite the recent success of visual odometry, service and assistive robotic tasks often present challenging environmental conditions where visual-based solutions fail due to poor lighting or repetitive feature patterns. In this work, we propose an innovative online learning approach for wheel odometry correction, paving the way for a robust multi-source localization system. An efficient attention-based neural network architecture has been studied to combine precise performances with real-time inference. The proposed solution shows remarkable results compared to a standard neural network and filter-based odometry correction algorithms. Nonetheless, the online learning paradigm avoids the time-consuming data collection procedure and can be adopted on a generic robotic platform on-the-fly.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge