Aleksandar Pavlovic

Differentiable Reasoning about Knowledge Graphs with Region-based Graph Neural Networks

Jun 13, 2024

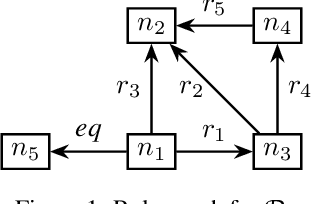

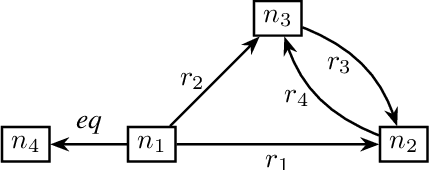

Abstract:Methods for knowledge graph (KG) completion need to capture semantic regularities and use these regularities to infer plausible knowledge that is not explicitly stated. Most embedding-based methods are opaque in the kinds of regularities they can capture, although region-based KG embedding models have emerged as a more transparent alternative. By modeling relations as geometric regions in high-dimensional vector spaces, such models can explicitly capture semantic regularities in terms of the spatial arrangement of these regions. Unfortunately, existing region-based approaches are severely limited in the kinds of rules they can capture. We argue that this limitation arises because the considered regions are defined as the Cartesian product of two-dimensional regions. As an alternative, in this paper, we propose RESHUFFLE, a simple model based on ordering constraints that can faithfully capture a much larger class of rule bases than existing approaches. Moreover, the embeddings in our framework can be learned by a monotonic Graph Neural Network (GNN), which effectively acts as a differentiable rule base. This approach has the important advantage that embeddings can be easily updated as new knowledge is added to the KG. At the same time, since the resulting representations can be used similarly to standard KG embeddings, our approach is significantly more efficient than existing approaches to differentiable reasoning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge