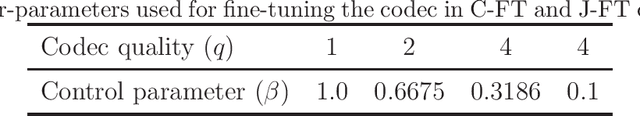

Akshay Pushparaja

End-to-end optimized image compression for multiple machine tasks

Mar 06, 2021

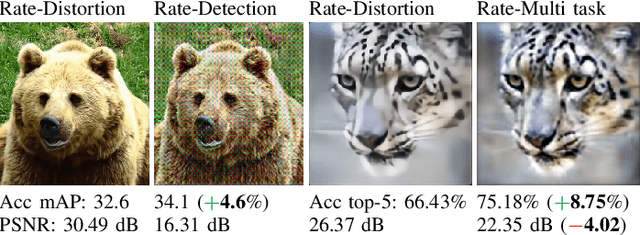

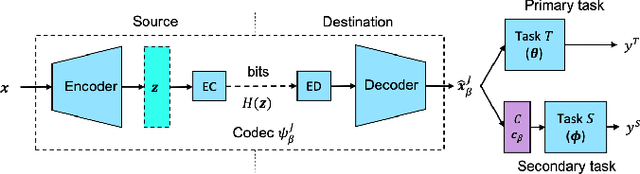

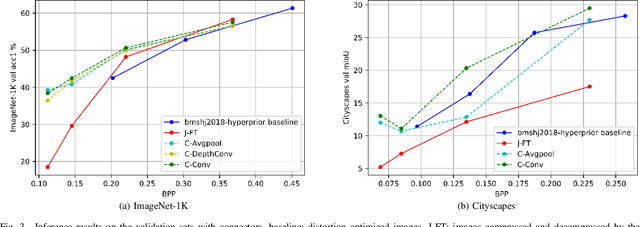

Abstract:An increasing share of captured images and videos are transmitted for storage and remote analysis by computer vision algorithms, rather than to be viewed by humans. Contrary to traditional standard codecs with engineered tools, neural network based codecs can be trained end-to-end to optimally compress images with respect to a target rate and any given differentiable performance metric. Although it is possible to train such compression tools to achieve better rate-accuracy performance for a particular computer vision task, it could be practical and relevant to re-use the compressed bit-stream for multiple machine tasks. For this purpose, we introduce 'Connectors' that are inserted between the decoder and the task algorithms to enable a direct transformation of the compressed content, which was previously optimized for a specific task, to multiple other machine tasks. We demonstrate the effectiveness of the proposed method by achieving significant rate-accuracy performance improvement for both image classification and object segmentation, using the same bit-stream, originally optimized for object detection.

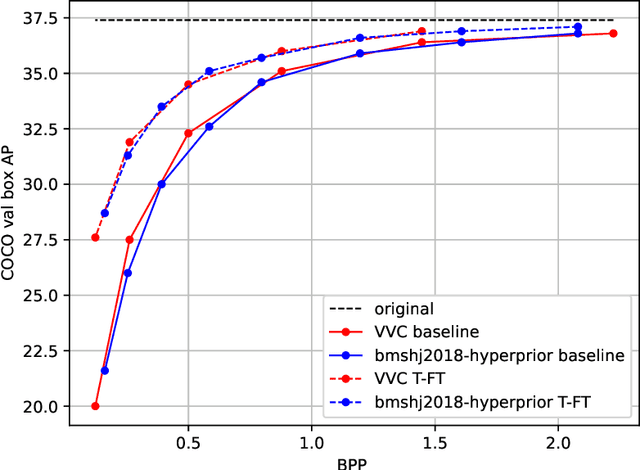

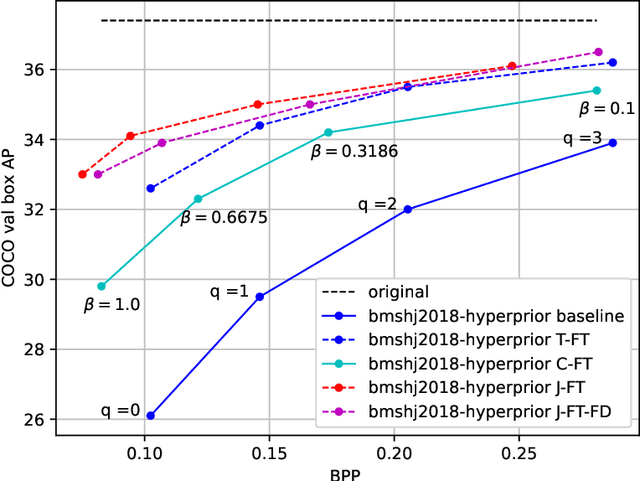

End-to-end optimized image compression for machines, a study

Nov 10, 2020

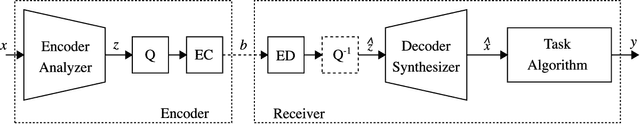

Abstract:An increasing share of image and video content is analyzed by machines rather than viewed by humans, and therefore it becomes relevant to optimize codecs for such applications where the analysis is performed remotely. Unfortunately, conventional coding tools are challenging to specialize for machine tasks as they were originally designed for human perception. However, neural network based codecs can be jointly trained end-to-end with any convolutional neural network (CNN)-based task model. In this paper, we propose to study an end-to-end framework enabling efficient image compression for remote machine task analysis, using a chain composed of a compression module and a task algorithm that can be optimized end-to-end. We show that it is possible to significantly improve the task accuracy when fine-tuning jointly the codec and the task networks, especially at low bit-rates. Depending on training or deployment constraints, selective fine-tuning can be applied only on the encoder, decoder or task network and still achieve rate-accuracy improvements over an off-the-shelf codec and task network. Our results also demonstrate the flexibility of end-to-end pipelines for practical applications.

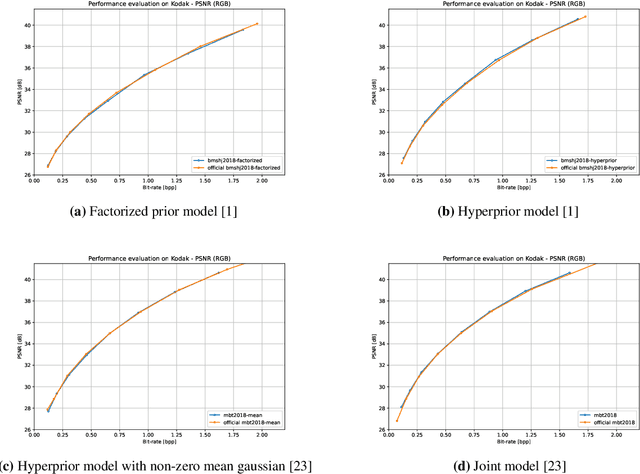

CompressAI: a PyTorch library and evaluation platform for end-to-end compression research

Nov 05, 2020

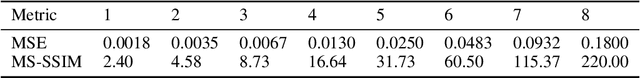

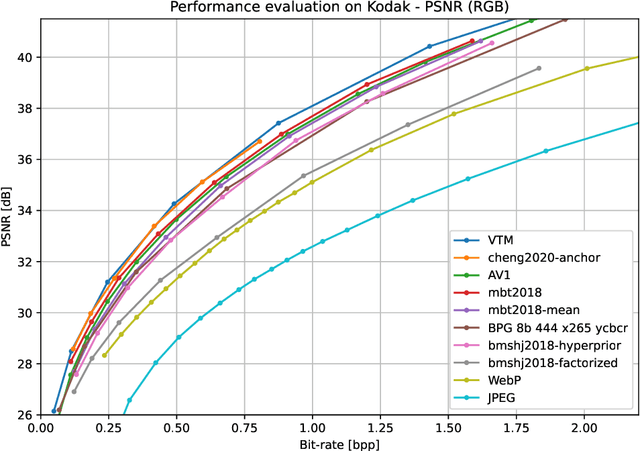

Abstract:This paper presents CompressAI, a platform that provides custom operations, layers, models and tools to research, develop and evaluate end-to-end image and video compression codecs. In particular, CompressAI includes pre-trained models and evaluation tools to compare learned methods with traditional codecs. Multiple models from the state-of-the-art on learned end-to-end compression have thus been reimplemented in PyTorch and trained from scratch. We also report objective comparison results using PSNR and MS-SSIM metrics vs. bit-rate, using the Kodak image dataset as test set. Although this framework currently implements models for still-picture compression, it is intended to be soon extended to the video compression domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge