Ajesh Koyatan Chathoth

University of Pittsburgh

Contrastive Continual Learning for Model Adaptability in Internet of Things

Feb 04, 2026Abstract:Internet of Things (IoT) deployments operate in nonstationary, dynamic environments where factors such as sensor drift, evolving user behavior, and heterogeneous user privacy requirements can affect application utility. Continual learning (CL) addresses this by adapting models over time without catastrophic forgetting. Meanwhile, contrastive learning has emerged as a powerful representation-learning paradigm that improves robustness and sample efficiency in a self-supervised manner. This paper reviews the usage of \emph{contrastive continual learning} (CCL) for IoT, connecting algorithmic design (replay, regularization, distillation, prompts) with IoT system realities (TinyML constraints, intermittent connectivity, privacy). We present a unifying problem formulation, derive common objectives that blend contrastive and distillation losses, propose an IoT-oriented reference architecture for on-device, edge, and cloud-based CCL, and provide guidance on evaluation protocols and metrics. Finally, we highlight open unique challenges with respect to the IoT domain, such as spanning tabular and streaming IoT data, concept drift, federated settings, and energy-aware training.

Log Anomaly Detection with Large Language Models via Knowledge-Enriched Fusion

Dec 12, 2025Abstract:System logs are a critical resource for monitoring and managing distributed systems, providing insights into failures and anomalous behavior. Traditional log analysis techniques, including template-based and sequence-driven approaches, often lose important semantic information or struggle with ambiguous log patterns. To address this, we present EnrichLog, a training-free, entry-based anomaly detection framework that enriches raw log entries with both corpus-specific and sample-specific knowledge. EnrichLog incorporates contextual information, including historical examples and reasoning derived from the corpus, to enable more accurate and interpretable anomaly detection. The framework leverages retrieval-augmented generation to integrate relevant contextual knowledge without requiring retraining. We evaluate EnrichLog on four large-scale system log benchmark datasets and compare it against five baseline methods. Our results show that EnrichLog consistently improves anomaly detection performance, effectively handles ambiguous log entries, and maintains efficient inference. Furthermore, incorporating both corpus- and sample-specific knowledge enhances model confidence and detection accuracy, making EnrichLog well-suited for practical deployments.

Dynamic Black-box Backdoor Attacks on IoT Sensory Data

Nov 18, 2025Abstract:Sensor data-based recognition systems are widely used in various applications, such as gait-based authentication and human activity recognition (HAR). Modern wearable and smart devices feature various built-in Inertial Measurement Unit (IMU) sensors, and such sensor-based measurements can be fed to a machine learning-based model to train and classify human activities. While deep learning-based models have proven successful in classifying human activity and gestures, they pose various security risks. In our paper, we discuss a novel dynamic trigger-generation technique for performing black-box adversarial attacks on sensor data-based IoT systems. Our empirical analysis shows that the attack is successful on various datasets and classifier models with minimal perturbation on the input data. We also provide a detailed comparative analysis of performance and stealthiness to various other poisoning techniques found in backdoor attacks. We also discuss some adversarial defense mechanisms and their impact on the effectiveness of our trigger-generation technique.

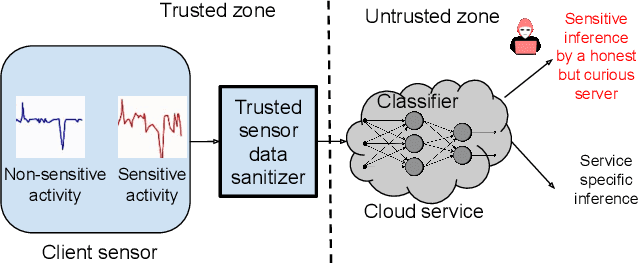

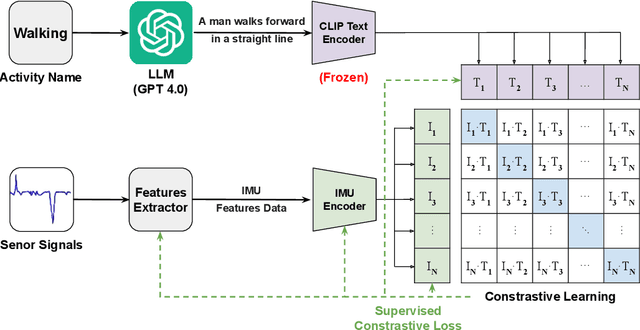

Dynamic User-controllable Privacy-preserving Few-shot Sensing Framework

Aug 06, 2025

Abstract:User-controllable privacy is important in modern sensing systems, as privacy preferences can vary significantly from person to person and may evolve over time. This is especially relevant in devices equipped with Inertial Measurement Unit (IMU) sensors, such as smartphones and wearables, which continuously collect rich time-series data that can inadvertently expose sensitive user behaviors. While prior work has proposed privacy-preserving methods for sensor data, most rely on static, predefined privacy labels or require large quantities of private training data, limiting their adaptability and user agency. In this work, we introduce PrivCLIP, a dynamic, user-controllable, few-shot privacy-preserving sensing framework. PrivCLIP allows users to specify and modify their privacy preferences by categorizing activities as sensitive (black-listed), non-sensitive (white-listed), or neutral (gray-listed). Leveraging a multimodal contrastive learning approach, PrivCLIP aligns IMU sensor data with natural language activity descriptions in a shared embedding space, enabling few-shot detection of sensitive activities. When a privacy-sensitive activity is identified, the system uses a language-guided activity sanitizer and a motion generation module (IMU-GPT) to transform the original data into a privacy-compliant version that semantically resembles a non-sensitive activity. We evaluate PrivCLIP on multiple human activity recognition datasets and demonstrate that it significantly outperforms baseline methods in terms of both privacy protection and data utility.

PCAP-Backdoor: Backdoor Poisoning Generator for Network Traffic in CPS/IoT Environments

Jan 26, 2025Abstract:The rapid expansion of connected devices has made them prime targets for cyberattacks. To address these threats, deep learning-based, data-driven intrusion detection systems (IDS) have emerged as powerful tools for detecting and mitigating such attacks. These IDSs analyze network traffic to identify unusual patterns and anomalies that may indicate potential security breaches. However, prior research has shown that deep learning models are vulnerable to backdoor attacks, where attackers inject triggers into the model to manipulate its behavior and cause misclassifications of network traffic. In this paper, we explore the susceptibility of deep learning-based IDS systems to backdoor attacks in the context of network traffic analysis. We introduce \texttt{PCAP-Backdoor}, a novel technique that facilitates backdoor poisoning attacks on PCAP datasets. Our experiments on real-world Cyber-Physical Systems (CPS) and Internet of Things (IoT) network traffic datasets demonstrate that attackers can effectively backdoor a model by poisoning as little as 1\% or less of the entire training dataset. Moreover, we show that an attacker can introduce a trigger into benign traffic during model training yet cause the backdoored model to misclassify malicious traffic when the trigger is present. Finally, we highlight the difficulty of detecting this trigger-based backdoor, even when using existing backdoor defense techniques.

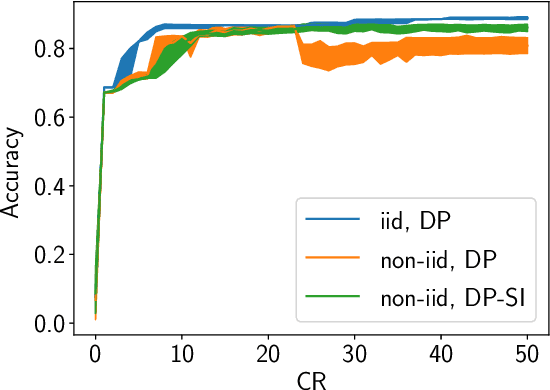

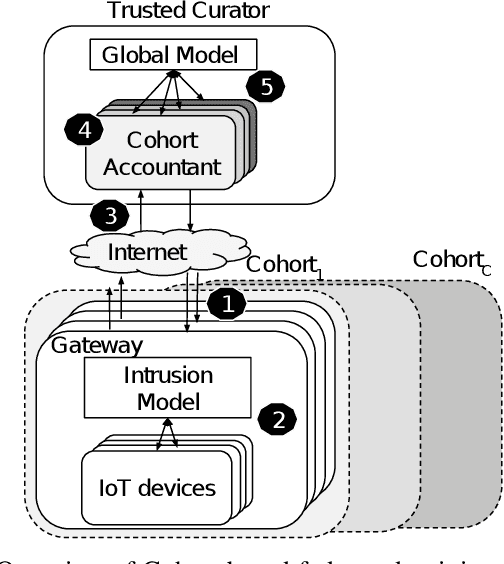

Federated Intrusion Detection for IoT with Heterogeneous Cohort Privacy

Jan 25, 2021

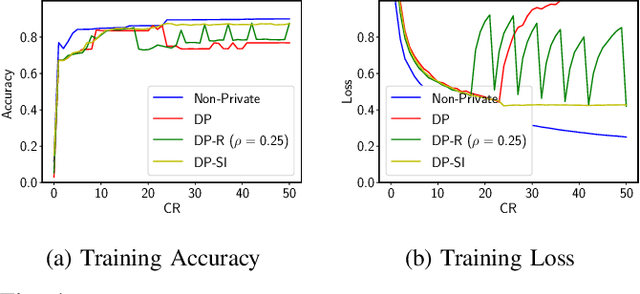

Abstract:Internet of Things (IoT) devices are becoming increasingly popular and are influencing many application domains such as healthcare and transportation. These devices are used for real-world applications such as sensor monitoring, real-time control. In this work, we look at differentially private (DP) neural network (NN) based network intrusion detection systems (NIDS) to detect intrusion attacks on networks of such IoT devices. Existing NN training solutions in this domain either ignore privacy considerations or assume that the privacy requirements are homogeneous across all users. We show that the performance of existing differentially private stochastic methods degrade for clients with non-identical data distributions when clients' privacy requirements are heterogeneous. We define a cohort-based $(\epsilon,\delta)$-DP framework that models the more practical setting of IoT device cohorts with non-identical clients and heterogeneous privacy requirements. We propose two novel continual-learning based DP training methods that are designed to improve model performance in the aforementioned setting. To the best of our knowledge, ours is the first system that employs a continual learning-based approach to handle heterogeneity in client privacy requirements. We evaluate our approach on real datasets and show that our techniques outperform the baselines. We also show that our methods are robust to hyperparameter changes. Lastly, we show that one of our proposed methods can easily adapt to post-hoc relaxations of client privacy requirements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge