Aida Ahmadzadegan

Learning to Utilize Correlated Auxiliary Classical or Quantum Noise

Jun 08, 2020

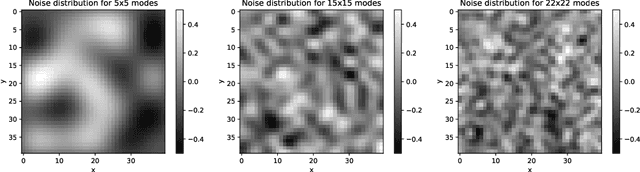

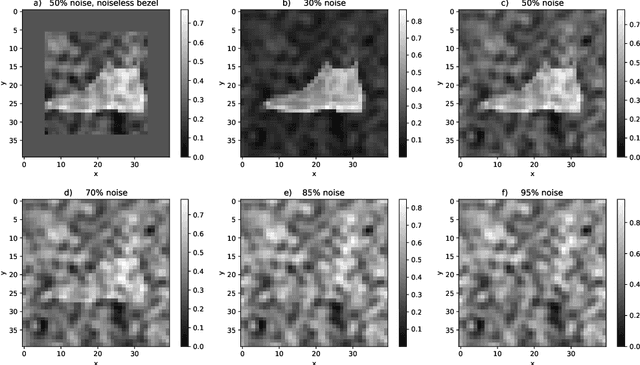

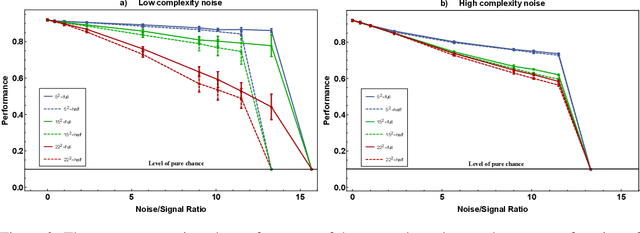

Abstract:This paper has two messages. First, we demonstrate that neural networks can learn to exploit correlations between noisy data and suitable auxiliary noise. In effect, the network learns to use the correlated auxiliary noise as an approximate key to decipher its noisy input data. Second, we show that the scaling behavior with increasing noise is such that future quantum machines should possess an advantage. For a concrete example, we reduce the image classification performance of convolutional neural networks (CNNs) by adding noise of different amounts and quality to the input images. We then demonstrate that the CNNs are able to partly recover their performance if, along with each noisy image, they are given auxiliary noise that is correlated with the image noise. We analyze the scaling of a CNN ability to learn and utilize these noise correlations as the level, dimensionality, or complexity of the noise is increased. We thereby find numerical and theoretical indications that quantum machines, due to their efficiency in representing complex correlations, could possess a significant advantage over classical machines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge